Muthu Kumar Chandrasekaran, PhD

@muthukumarc87

Scientist #NLProc #PhD #AI #ML | Social Injustice in Education | Tweets don't represent my employer

ID: 53433085

http://linkedin.com/in/muthukumarc87 03-07-2009 16:45:10

1,1K Tweet

264 Followers

506 Following

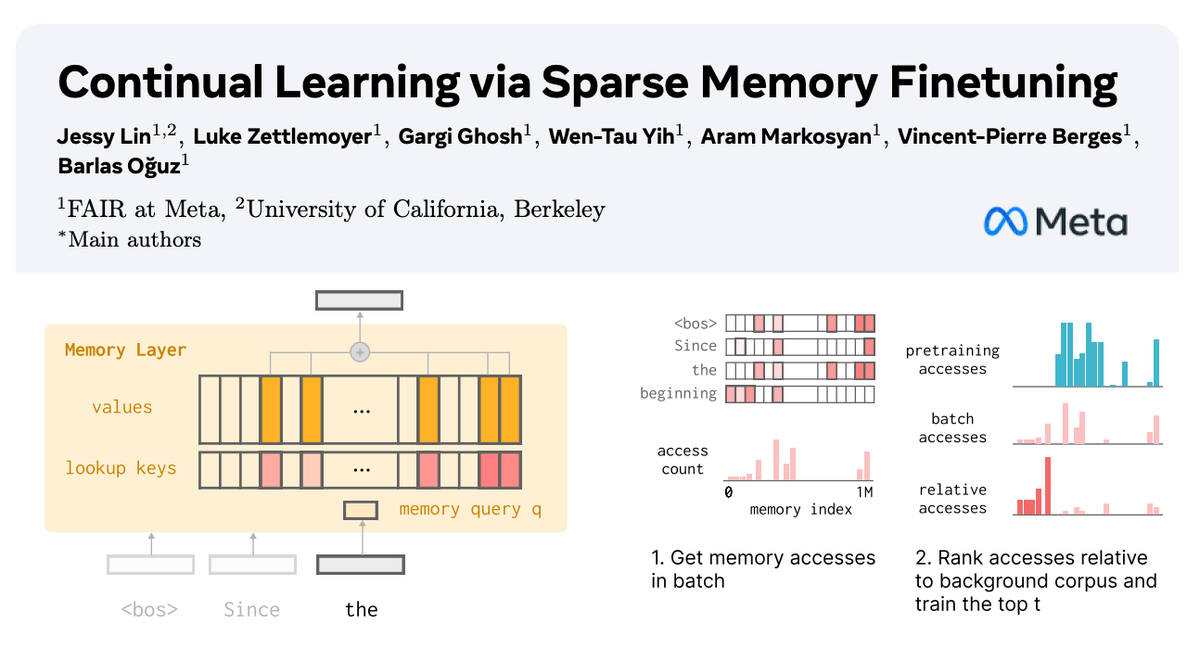

🧠 How can we equip LLMs with memory that allows them to continually learn new things? In our new paper with AI at Meta, we show how sparsely finetuning memory layers enables targeted updates for continual learning, w/ minimal interference with existing knowledge. While full