ML@CMU

@mlcmublog

Official twitter account for the ML@CMU blog @mldcmu @SCSatCMU

ID: 1233552889055834112

https://blog.ml.cmu.edu/ 29-02-2020 00:42:45

110 Tweet

2,2K Takipçi

20 Takip Edilen

blog.ml.cmu.edu/2024/10/07/vqa… With the rapid advancement of text-to-visual models like Sora, Midjourney, and Stable Diffusion, evaluating how well the generated imagery follows input text prompts has become a major challenge. However, work by Zhiqiu Lin, Deepak Pathak, Baiqi Li, Emily

blog.ml.cmu.edu/2024/12/06/scr… A critical question arises when using large language models: should we fine-tune them or rely on prompting with in-context examples? Recent work led by Junhong Shen and collaborators demonstrates that we can develop state-of-the-art web agents by

blog.ml.cmu.edu/2024/12/12/hum… Have you had difficulty using a new machine for DIY or latte-making? Have you forgotten to add spice during cooking? Riku Arakawa Hiromu Yakura Vimal Mollyn, Jill Fain Lehman, and Mayank Goel are leveraging multimodal sensing to improve the

blog.ml.cmu.edu/2025/01/08/opt… How can we train LLMs to solve complex challenges beyond just data scaling? In a new blogpost, Amrith Setlur, Yuxiao Qu Matthew Yang, Lunjun Zhang , Virginia Smith and Aviral Kumar demonstrate that Meta RL can help LLMs better optimize test time compute

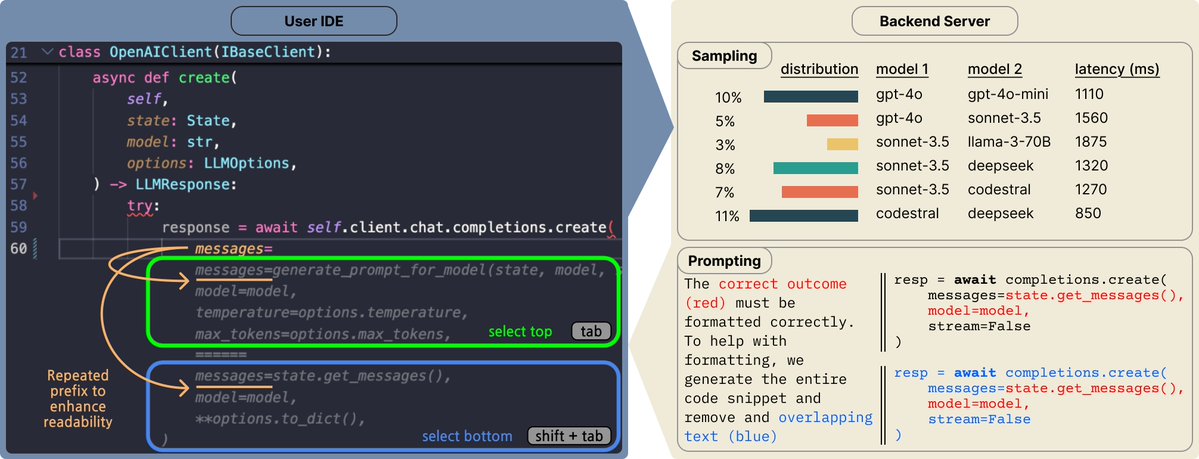

blog.ml.cmu.edu/2025/04/09/cop… How do real-world developer preferences compare to existing evaluations? A CMU and UC Berkeley team led by Wayne Chi and Valerie Chen created Copilot Arena to collect user preferences on in-the-wild workflows. This blogpost overviews the design and

blog.ml.cmu.edu/2025/04/18/llm… 📈⚠️ Is your LLM unlearning benchmark measuring what you think it is? In a new blog post authored by Pratiksha Thaker, Shengyuan Hu, Neil Kale, Yash Maurya, Steven Wu, and Virginia Smith, we discuss why empirical benchmarks are necessary but not

blog.ml.cmu.edu/2025/05/22/unl… Are your LLMs truly forgetting unwanted data? In this new blog post authored by Shengyuan Hu, Yiwei Fu, Steven Wu, and Virginia Smith, we discuss how benign relearning can jog unlearned LLM's memory to recover knowledge that is supposed to be forgotten.

blog.ml.cmu.edu/2025/06/01/rlh… In this in-depth coding tutorial, Zhaolin Gao and Gokul Swamy walk through the steps to train an LLM via RL from Human Feedback!