Andrei Mircea

@mirandrom

PhD student @Mila_Quebec ⊗ mechanistic interpretability + systematic generalization + LLMs for science ⊗ mirandrom.github.io

ID: 938449528348381185

http://mirandrom.github.io 06-12-2017 16:46:18

59 Tweet

88 Followers

359 Following

Thomas Jiralerspong Work led by Jin Hwa Lee and Thomas Jiralerspong , with Jade Yu and Yoshua Bengio Updated preprint: arxiv.org/abs/2410.01444

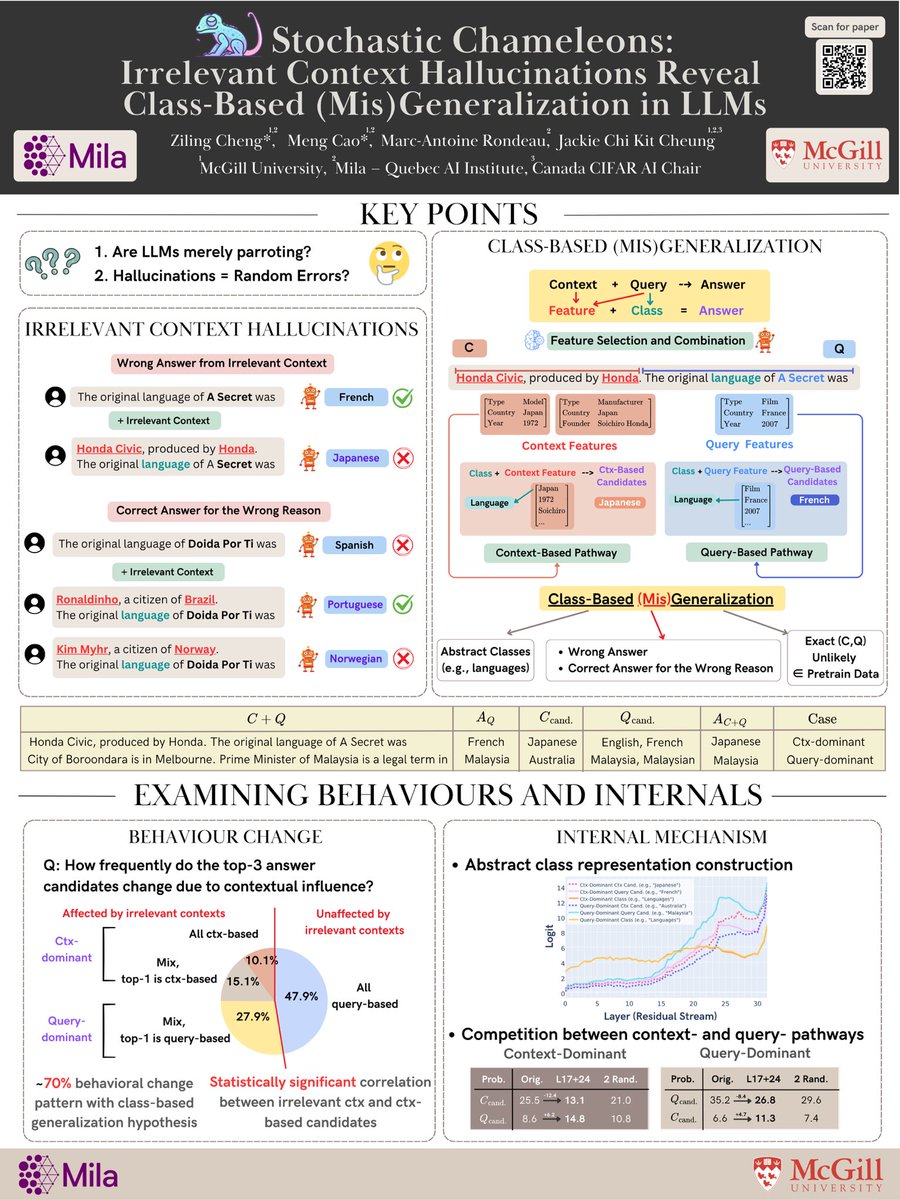

Mechanistic understanding of systematic failures in language models is something more research should strive for IMO. This is really interesting work in that vein by Ziling Cheng @ ACL 2025, highly recommend you check it out.

Life update: I’m excited to share that I’ll be starting as faculty at the Max Planck Institute for Software Systems(Max Planck Institute for Software Systems) this Fall!🎉 I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

We all agree that AI models/agents should augment humans instead of replace us in many cases. But how do we pick when to have AI collaborators, and how do we build them? Come check out our #ACL2025NLP tutorial on Human-AI Collaboration w/ Diyi Yang Joseph Chee Chang, 📍7/27 9am@ Hall N!

How can we use models of cognition to help LLMs interpret figurative language (irony, hyperbole) in a more human-like manner? Come to our #ACL2025NLP poster on Wednesday at 11AM (exhibit hall - exact location TBA) to find out! McGill NLP Mila - Institut québécois d'IA ACL 2025

What do systematic hallucinations in LLMs tell us about their generalization abilities? Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :) McGill NLP Mila - Institut québécois d'IA ACL 2025