Minhyuk Sung

@minhyuksung

Associate professor @ KAIST | KAIST Visual AI Group: visualai.kaist.ac.kr.

ID: 1446849828638449664

https://mhsung.github.io/ 09-10-2021 14:48:06

102 Tweet

1,1K Followers

567 Following

#NeurIPS2024 Thursday afternoon, don't miss Seungwoo Yoo's poster on Neural Pose Representation, a framework for pose generation and transfer based on neural keypoint representation and Jacobian field decoding. Thu 4:30 p.m. - 7:30 p.m. East #2202 🌐 neural-pose.github.io

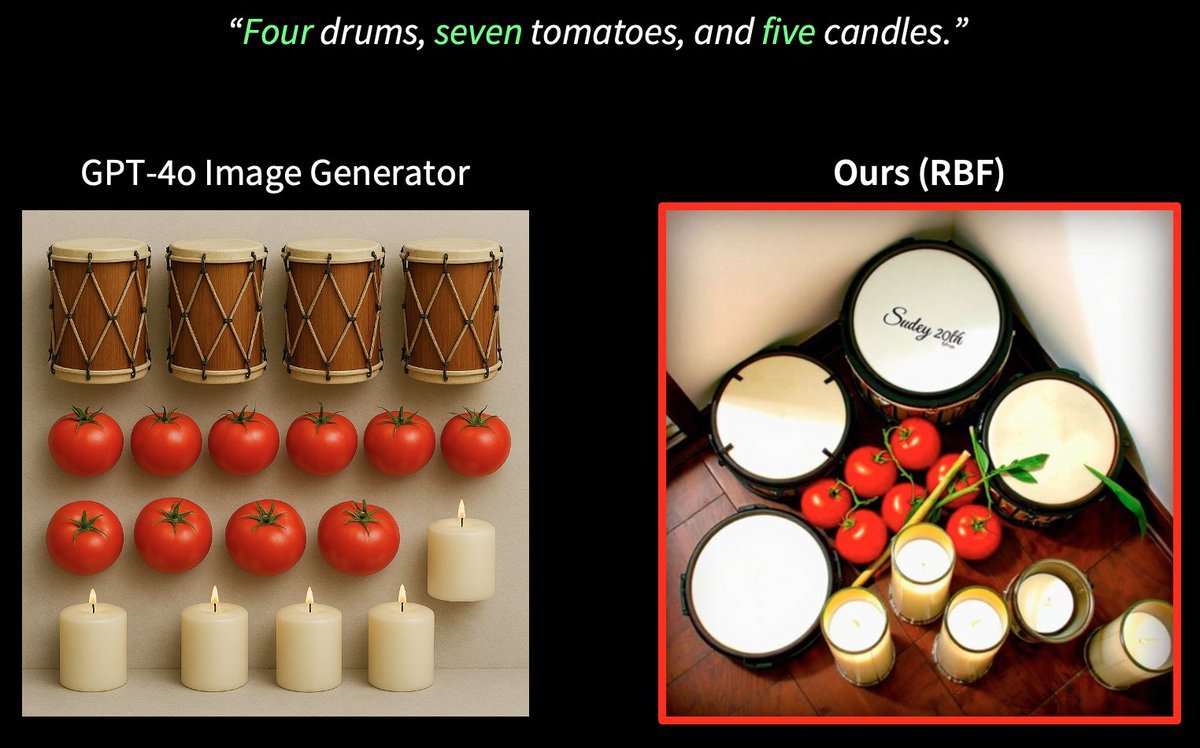

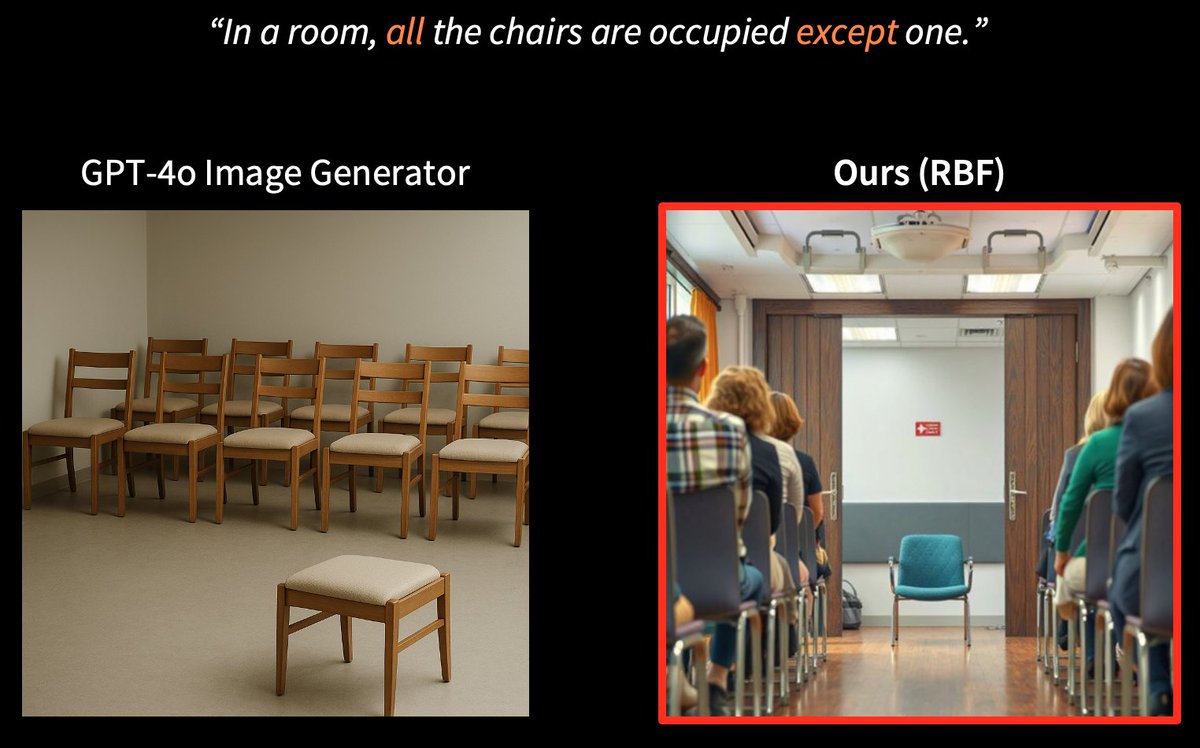

#NeurIPS2024 DiT generates not only higher-quality images but also opens up new possibilities for improving training-free spatial grounding. Come visit Phillip (Yuseung) Lee 's GrounDiT poster to see how it works. Fri 4:30 p.m. - 7:30 p.m. East #2510 🌐 groundit-diffusion.github.io

Had an incredible opportunity to give two lectures on diffusion models at MLSS-Sénégal 🇸🇳 in early July! Slides are available here: onedrive.live.com/?redeem=aHR0cH… Big thanks to Eugene Ndiaye for the invitation!

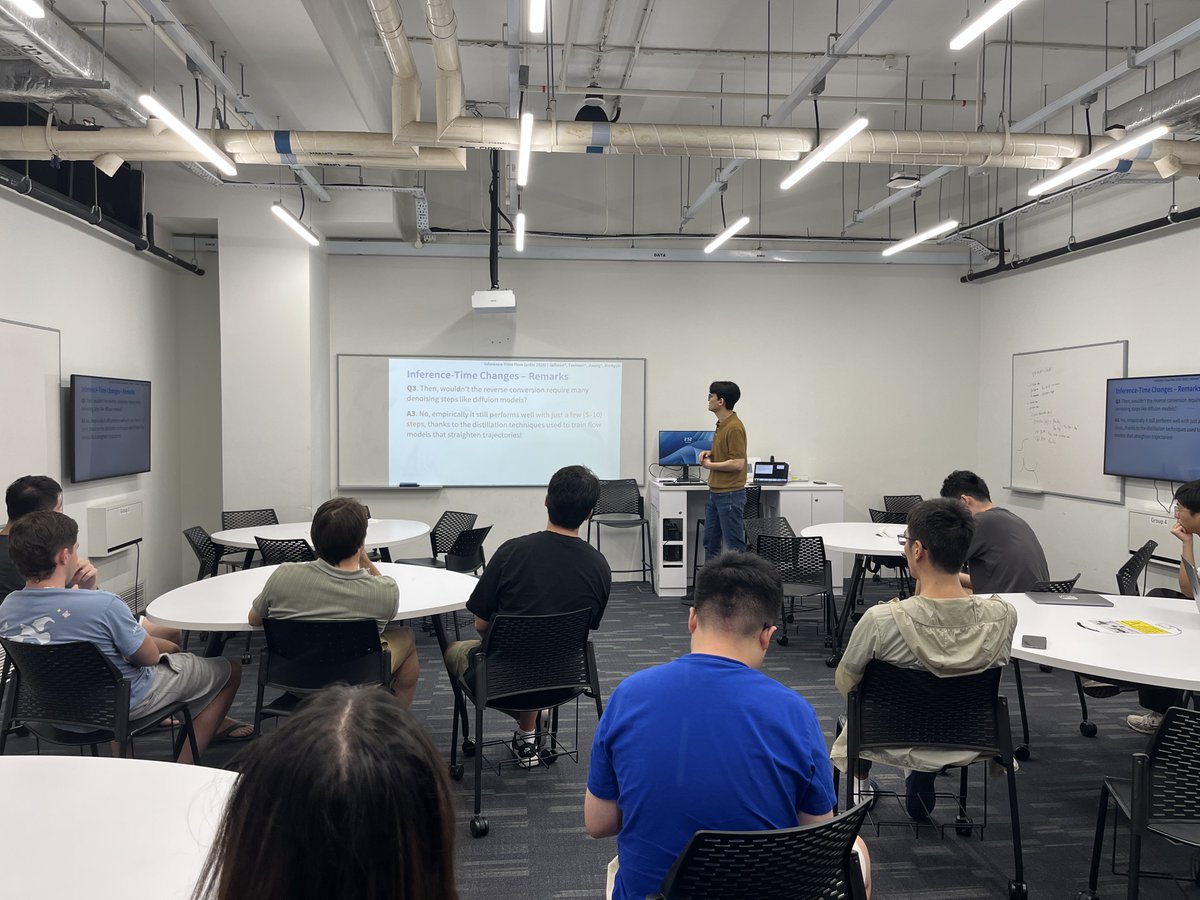

Diffusion model course at SIGGRAPH 2025 is happening NOW in the West Building, Rooms 109–110. w/ Niloy Mitra, Or Patashnik, Daniel Cohen-Or, Paul Guerrero, and Juil Koo.

Great summary of the latest image & video diffusion models! Our #SIGGRAPH2025 course spans real-world uses to techniques like acceleration & flow matching. Slides: geometry.cs.ucl.ac.uk/courses/diffus… w/ Niloy Mitra, Or Patashnik, Daniel Cohen-Or , Paul Guerrero, Minhyuk Sung