Jack Cole

@mindsai_jack

AI Researcher, Clinical Psychologist, App Dev

ID: 1513590800155820038

https://tufalabs.ai 11-04-2022 19:04:12

838 Tweet

2,2K Followers

277 Following

AI beliefs & reward functions ≠ ours. While simple games (Go, Chess) have clear win/lose rewards, complex real-world situations don't. "Reward is all you need" is partly true, but where does the reward function come from? This is Dr. Jeff Beck from Noumenal Labs

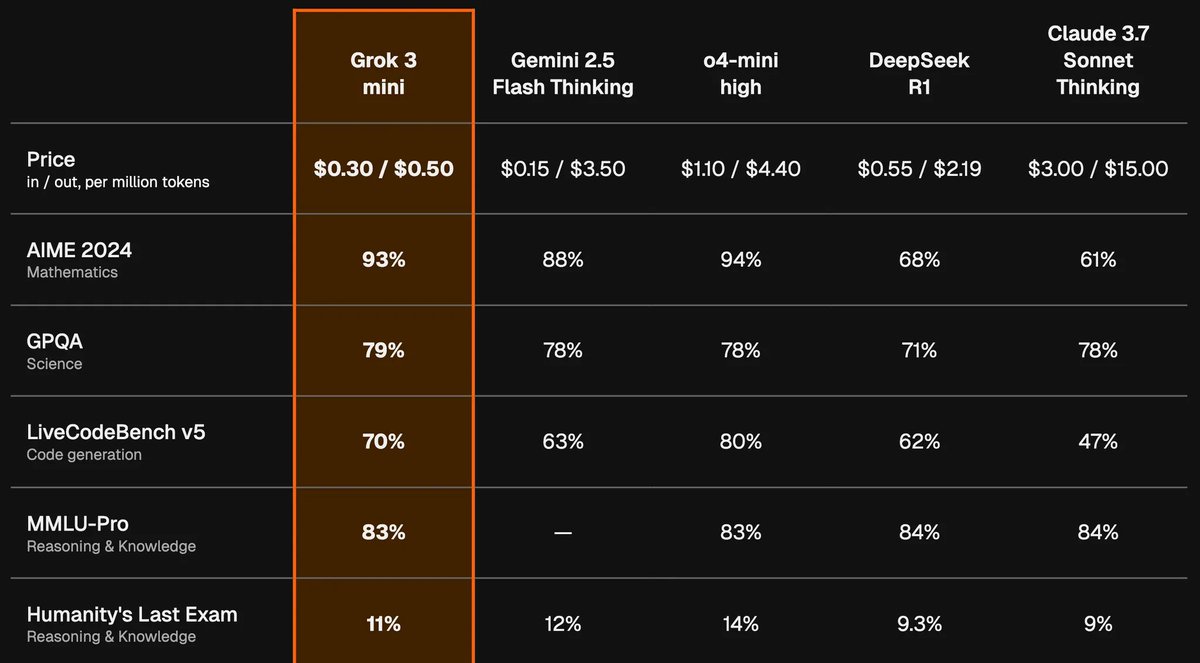

.Bryan Landers made this sweet graphic for us It's a public, internal tool we're hosting here: arcprize.org/2025-sota Not guaranteed to be up to date (we're getting there though)

In a recent discussion, Prof. Kevin Ellis and Dr. Zenna Tavares of Basis explored pathways toward artificial intelligence capable of more human-like learning, focusing on acquiring abstract knowledge from limited experience through active interaction. 🧵👇

.Isaac Liao has open sourced his "ARC-AGI Without Pretraining" notebook on Kaggle You can use it today and enter ARC Prize 2025 It currently scores 4.17% on ARC-AGI-2 (5th place) Amazing mid-year sharing and contribution Thank you Isaac