Mihir Prabhudesai

@mihirp98

CMU Robotics PhD | Research Intern @ Google

ID: 1037594703905136641

http://mihirp1998.github.io/ 06-09-2018 06:53:49

86 Tweet

677 Takipçi

372 Takip Edilen

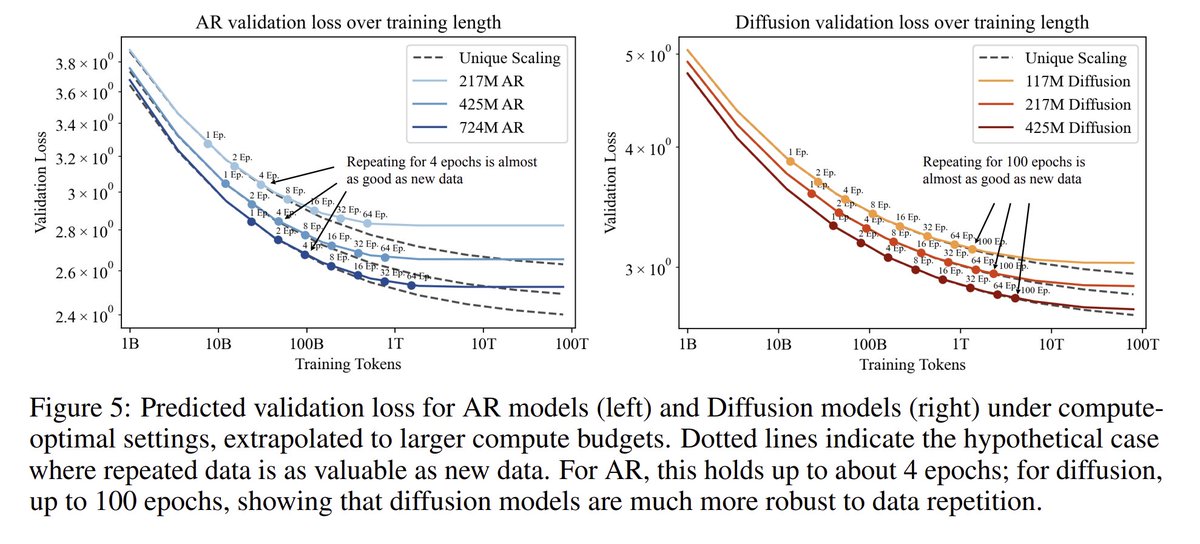

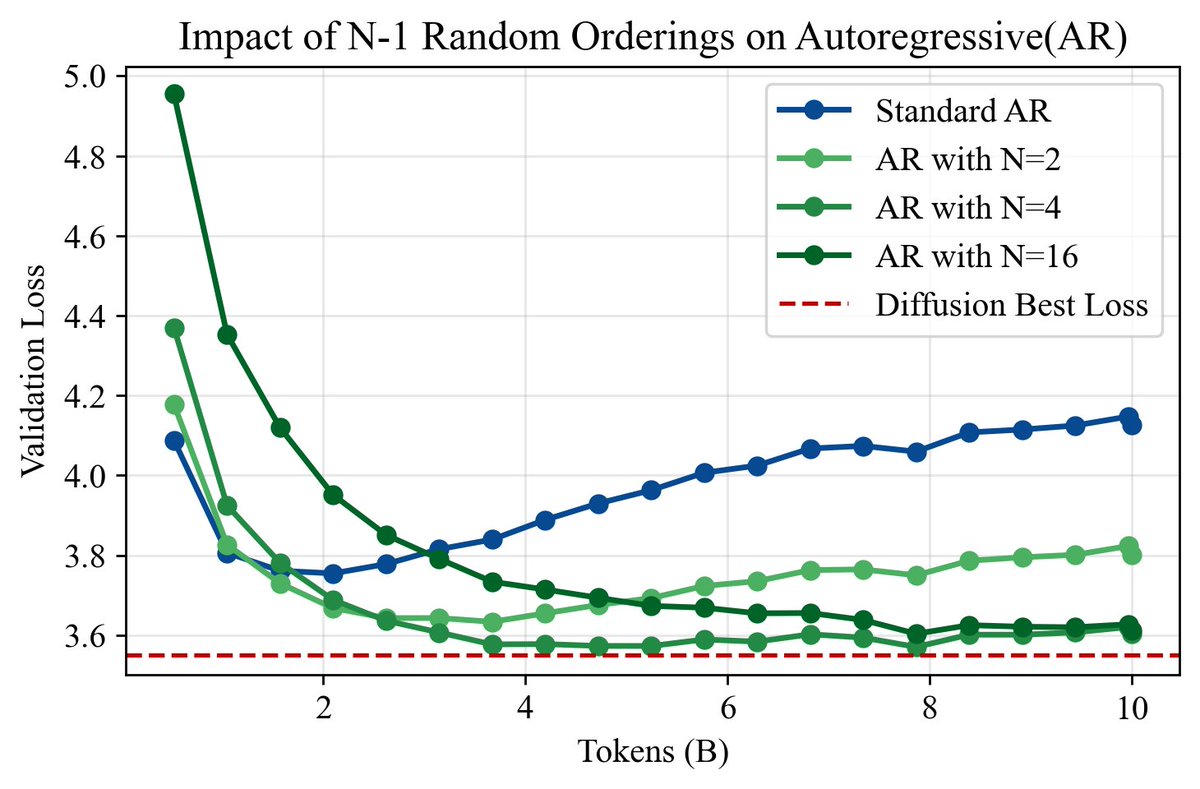

Check it out! 🚀 "Diffusion Beats Autoregressive in Data-Constrainted Settings" They show that Diffusion LLMs outperform Autoregressive LLMs, when allowed to train for multiple epochs! #CMUrobotics Work from Mihir Prabhudesai Mihir Prabhudesai & Mengning Wu Mengning Wu

I'll be joining the faculty Johns Hopkins University late next year as a tenure-track assistant professor in JHU Computer Science Looking for PhD students to join me tackling fun problems in robot manipulation, learning from human data, understanding+predicting physical interactions, and beyond!