Michael Qian

@michaelqwl

PhD student in Computer Science at University of Southern California, Haptics, Robotics, HCI research advised by Professor Heather Culbertson

ID: 1433471478071169034

02-09-2021 16:46:58

15 Tweet

23 Followers

63 Following

Our graffiti painting robot is getting better! Slowly but steadily :) With @florez_jd Frank Dellaert Seth Hutchinson, Michael Qian Powered by #gtsam

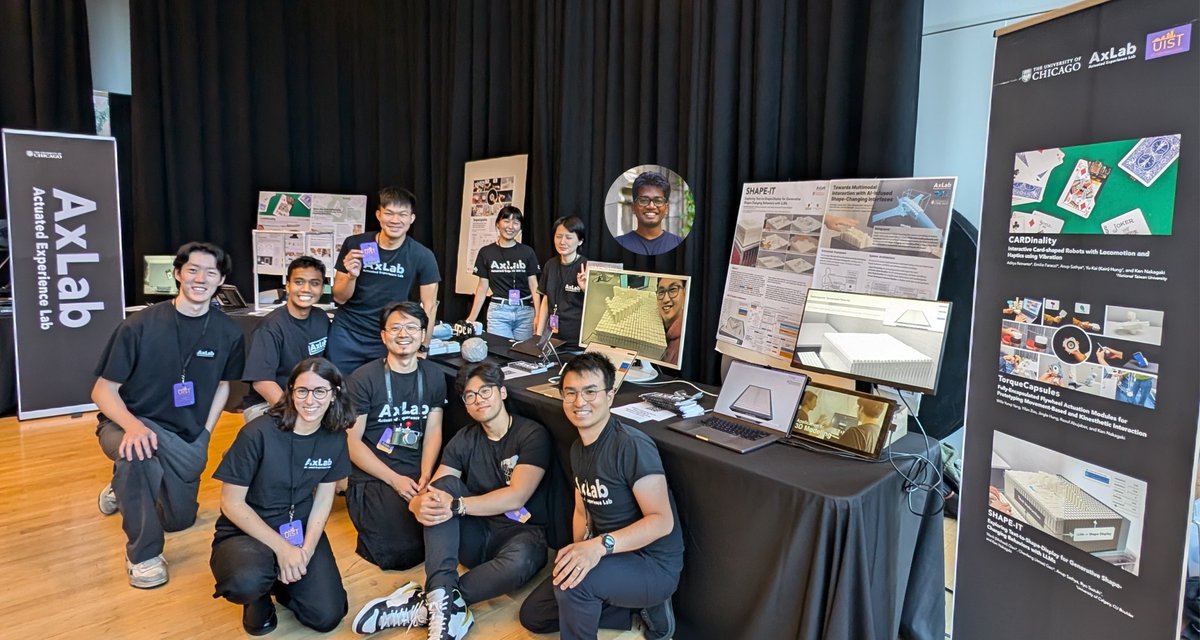

Graduation season is here, and AxLab - Actuated Experience Lab proudly has 8(!) members departing for their next steps! So much memory with them, building the lab, exhibiting (Ars Electronica/SXSW, etc), writing papers, attending conf, and having fun together (incl. today's T-shirt activity👕

🧵Here are lessons from my #PhD journey. I hope this inspires and helps other young researchers :) VAK Sustainable Computing Lab @ Northwestern 🌎 💚 Northwestern Northwestern Engineering Georgia Tech Computing Georgia Tech School of Interactive Computing

Proud to announce that I’ll be joining the HARVI Lab as a PhD student this fall! I’m thrilled to work with my advisor, Heather Culbertson, and can’t wait for the exciting years ahead!

.AxLab - Actuated Experience Lab will present 3 PAPERS (talk+demo) and 2 POSTERS + be part of 1 WORKSHOP in the #UIST2024 conference next week! The image below shows them with detailed schedules! We will post the project details in the next few days. We are so excited to present them ACM UIST!

🚨#UIST2024 Poster: Towards AI-Infused Shape-Changing UIs 👉💬🪄 What if you can point, gesture, and speak to 'summon' any physical shapes? Another exploration by AxLab, in collab with UChicagoCS's 3DL, employs Gen-AI to author shape display via multimodal interaction. 🧵→

Ken Nakagaki AxLab - Actuated Experience Lab The University of Chicago Text-to-Shape-Display! Look how we make the shape display live in AxLab - Actuated Experience Lab ! Thanks all the help from our lab mates and my mom( she enjoys using e-drills though!)

Thanks to everyone who came to AxLab - Actuated Experience Lab's #UIST2024 demos! We immensely enjoyed showing our demos to the ACM UIST community! (Special Thanks to Anup, who couldn't make the conf but significantly contributed to 2 papers, & Chi, who operated the shape display from Chicago!

Michael Qian + Jesse Gao gave a transformative talk, especially with a LIVE DEMO to remotely control the shape display in our lab in UChicago using their system! Thanks to Chi for running the hardware at AxLab - Actuated Experience Lab !

The sense of touch is innately private, but what if it could be shared across individuals? We Stanford VR studied shared body sensations in VR and found it increases body illusion and empathy toward others and influences social behavior. #ISMAR2024 🔗vhil.stanford.edu/publications/s…

Very excited to share with you what our team World Labs has been up to! No matter how one theorizes the idea, it's hard to use words to describe the experience of interacting with 3D scenes generated by a photo or a sentence. Hope you enjoy this blog! 🤩❤️🔥