Michael Toker

@michael_toker

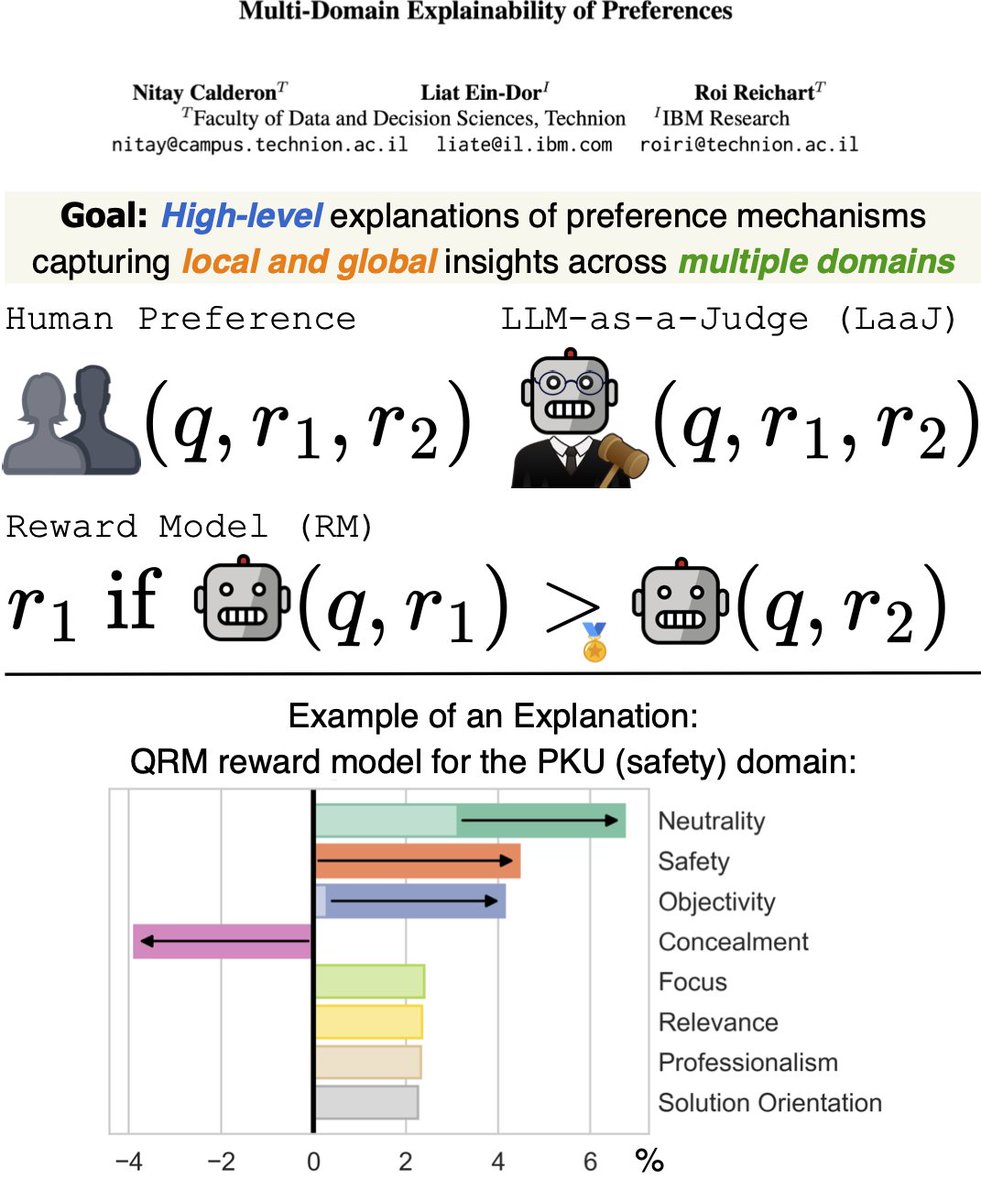

PhD student @Technion NLP lab - Developing explainability methods to gain a better understanding of LLMs

ID: 1522869113181413378

https://tokeron.github.io/ 07-05-2022 09:21:40

91 Tweet

115 Followers

508 Following

Since people have been asking - the #blackboxNLP workshop will return this year, to be held with #emnlp2025. This workshop is all about interpreting and analyzing NLP models (and yes, this includes LLMs). More details soon, follow BlackboxNLP

Our paper "Position-Aware Circuit Discovery" got accepted to ACL! 🎉 Huge thanks to my collaborators🙏 Hadas Orgad @ ICML David Bau Aaron Mueller Yonatan Belinkov See you in Vienna! 🇦🇹 #ACL2025 ACL 2025

Congrats Hadas Orgad and Alex on your beautiful wedding! Wishing you a lifetime of joy, love, and highly-cited collaborations 💍✨