Michael Hahn

@mhahn29

Professor at Saarland University

@LstSaar @SIC_Saar. Previously PhD at Stanford @stanfordnlp. Machine learning, language, and cognitive science.

ID: 609965358

https://lacoco-lab.github.io/home/ 16-06-2012 10:35:33

174 Tweet

975 Followers

763 Following

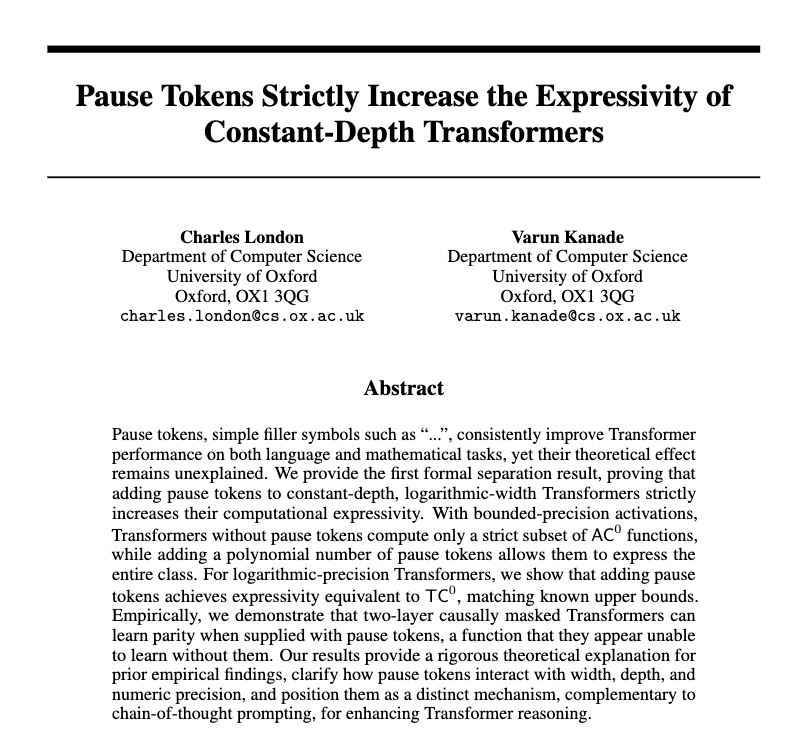

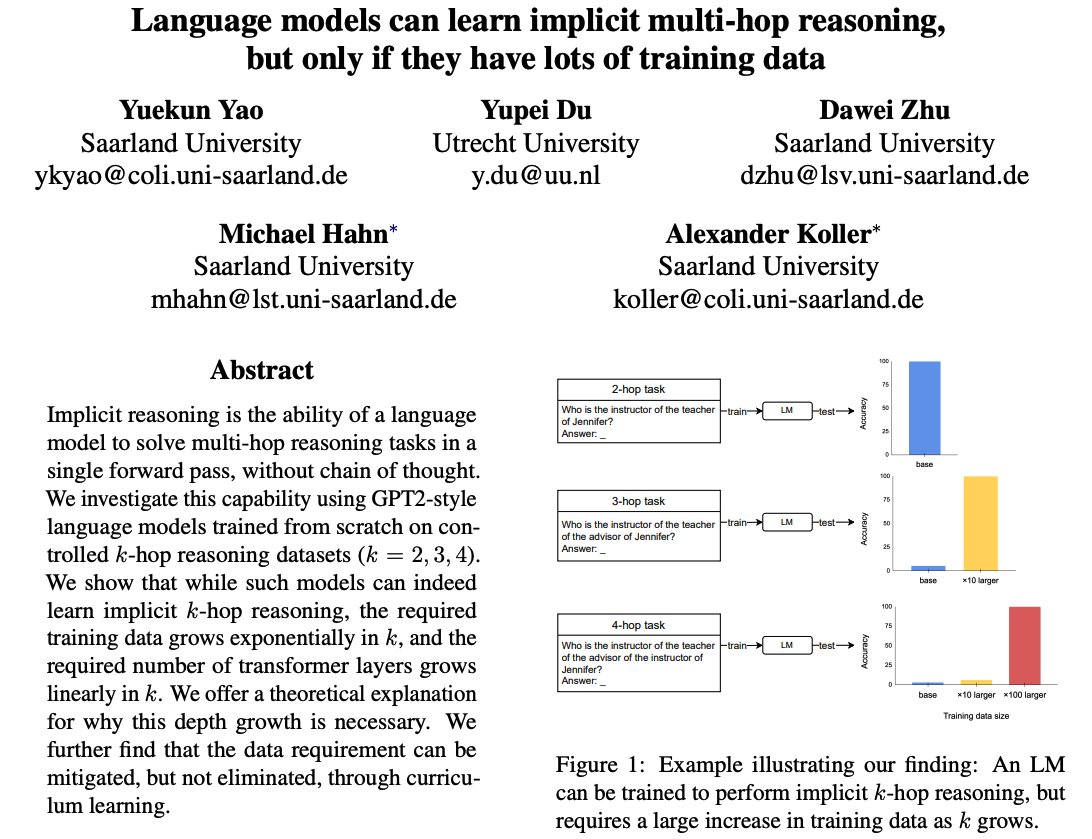

Padding a transformer’s input with blank tokens (...) is a simple form of test-time compute. Can it increase the computational power of LLMs? 👀 New work with Ashish Sabharwal addresses this with *exact characterizations* of the expressive power of transformers with padding 🧵

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models. New work with Kyle Mahowald and Christopher Potts! 🧵👇

1/9 Thrilled to share our recent theoretical paper (with Griffiths Computational Cognitive Science Lab) on human belief updating, now published in Psychological Review! A quick 🧵: