Mor Geva

@megamor2

ID: 850356925535531009

https://mega002.github.io/ 07-04-2017 14:37:44

450 Tweet

1,1K Takipçi

509 Takip Edilen

Hi ho! New work: arxiv.org/pdf/2503.14481 With amazing collabs Jacob Eisenstein Reza Aghajani Adam Fisch dheeru dua Fantine Huot ✈️ ICLR 25 Mirella Lapata Vicky Zayats Some things are easier to learn in a social setting. We show agents can learn to faithfully express their beliefs (along... 1/3

🎉 Our Actionable Interpretability workshop has been accepted to #ICML2025! 🎉 >> Follow Actionable Interpretability Workshop ICML2025 Tal Haklay Anja Reusch Marius Mosbach Sarah Wiegreffe Ian Tenney (@[email protected]) Mor Geva Paper submission deadline: May 9th!

🚨 Call for Papers is Out! The First Workshop on 𝐀𝐜𝐭𝐢𝐨𝐧𝐚𝐛𝐥𝐞 𝐈𝐧𝐭𝐞𝐫𝐩𝐫𝐞𝐭𝐚𝐛𝐢𝐥𝐢𝐭𝐲 will be held at ICML 2025 in Vancouver! 📅 Submission Deadline: May 9 Follow us >> Actionable Interpretability Workshop ICML2025 🧠Topics of interest include: 👇

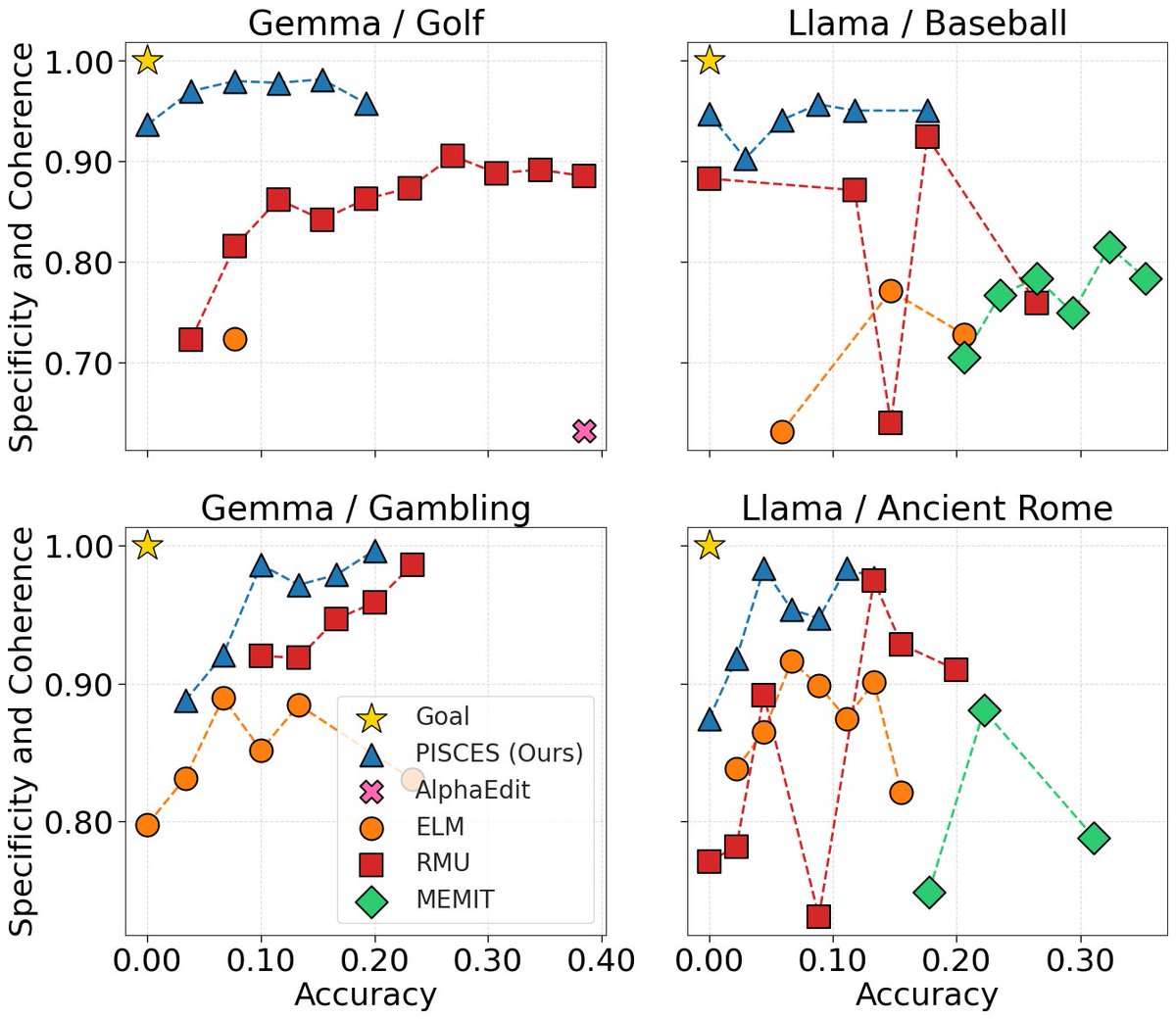

Removing knowledge from LLMs is HARD. Yoav Gur Arieh proposes a powerful approach that disentangles the MLP parameters to edit them in high resolution and remove target concepts from the model. Check it out!