Meaning Alignment Institute

@meaningaligned

The Meaning Alignment Institute is a research organization with the goal of ensuring human flourishing in the age of AGI.

ID: 1323223144522489856

https://meaningalignment.org/ 02-11-2020 11:19:49

40 Tweet

1,1K Takipçi

17 Takip Edilen

Happy Friday! Check out our latest episode with Joe Edelman of Meaning Alignment Institute! ▪️Simplecast: bit.ly/xCsJE23 ▪️Apple Podcasts: apple.co/49nWbNi ▪️Spotify: spoti.fi/3B44x08 🎙️ Can AI shape our moral decisions? In the latest RadicalxChange(s) ep, Matt & Joe

7/ Listen to the latest episode of RadicalxChange(s) with Joe Edelman 🥞, Co-Founder of Meaning Alignment Institute. Check out his thoroughly informative and engaging discussion with ᴍᴀᴛᴛ ᴘʀᴇᴡɪᴛᴛ. apple.co/49nWbNi

New paper: What happens once AIs make humans obsolete? Even without AIs seeking power, we argue that competitive pressures will fully erode human influence and values. gradual-disempowerment.ai with Jan Kulveit Raymond Douglas Nora Ammann Deger Turan David Krueger 🧵

yay!!!!! a concrete proposal by Meaning Alignment Institute for how we can re-align markets with what people really care about, using 'market intermediaries' more to come soon 😈

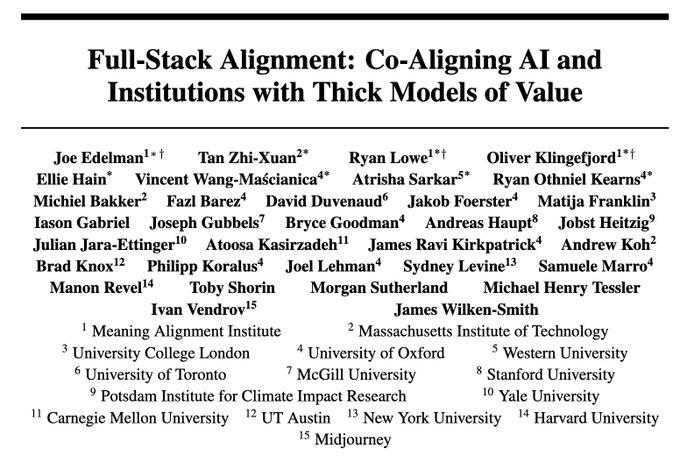

Check out this great new initiative + paper led by Ryan Lowe 🥞, Joe Edelman 🥞, xuan (ɕɥɛn / sh-yen), Oliver Klingefjord 🥞 & the fine folks Meaning Alignment Institute! Using rich representations of value we aim to make headway on some of the most pressing AI alignment challenges! See: full-stack-alignment.ai

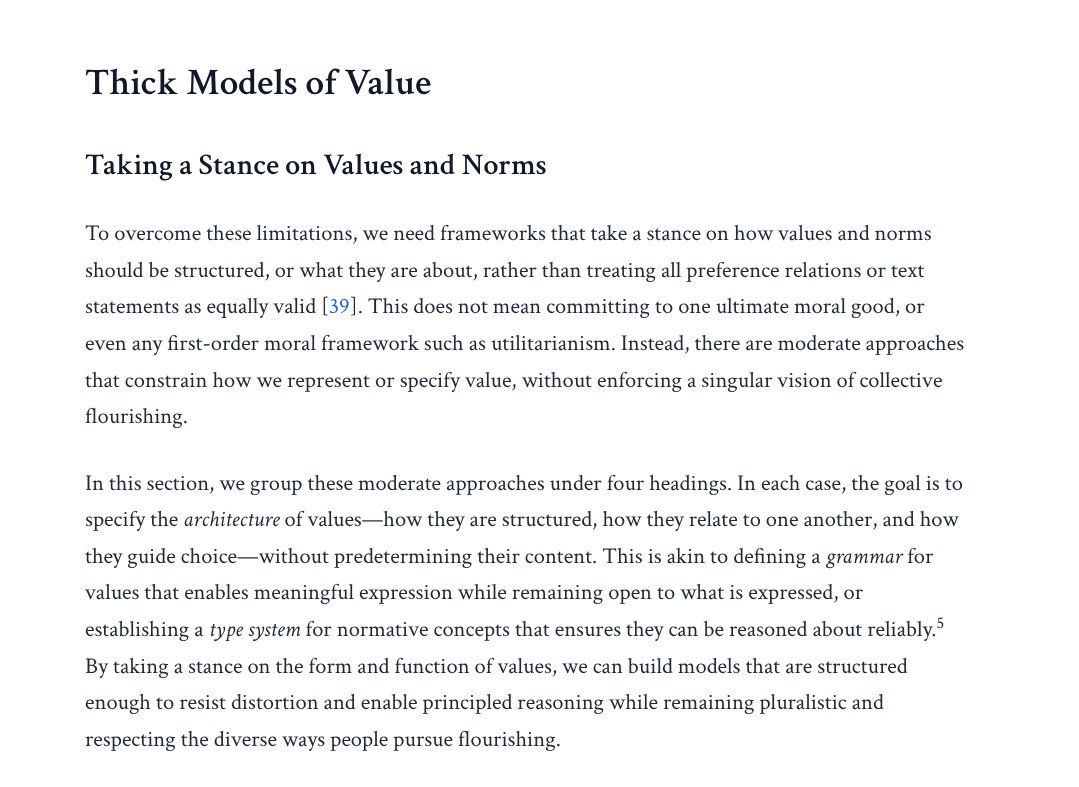

In 2017, I was working to change FB News Feed's recommender to use “thick models of value” (per the paper we just released). Mark Zuckerberg even promised he'd make Facebook “Time Well Spent”. That effort was thwarted by the (1) market dynamics of the attention economy, (2) the US

I guess now is also a good time to announce that I've officially joined Meaning Alignment Institute!! I'll be working on field building for full-stack alignment -- helping nurture this effort into a research community with excellent vibes that gets shit done weeeeeeeeeee 🚀🚀

Ryan Lowe 🥞 of Meaning Alignment Institute spoke on "Co-Aligning AI and Institutions". Their “Full-stack Alignment” work argues that alignment strategy needs to consider the institutions in which AI is developed and deployed. paper: full-stack-alignment.ai video: youtube.com/watch?v=8AUDmo…