Michael Dorkenwald

@mdorkenw

PhD student @UvA_Amsterdam @ELLISforEurope working on SSL, Vision&Language, Learning from Videos | Prev. intern @awscloud

ID: 1251254493599141888

http://mdorkenwald.com 17-04-2020 21:01:39

107 Tweet

271 Takipçi

343 Takip Edilen

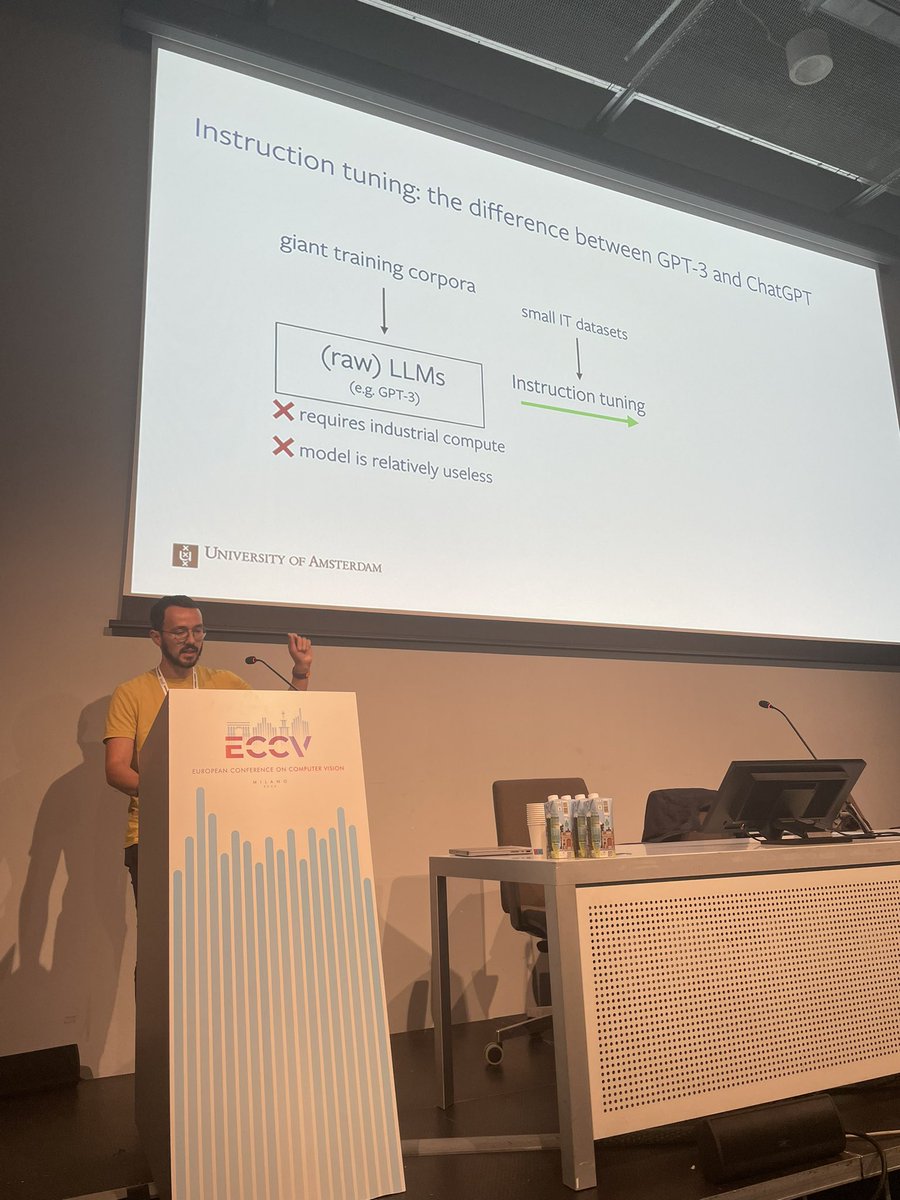

Michael Dorkenwald European Conference on Computer Vision #ECCV2026 Ishan Misra Oriane Siméoni Xinlei Chen Olivier Hénaff Yuki Yutong Bai Yuki now talking on vision foundation models with “academic compute” 🎉

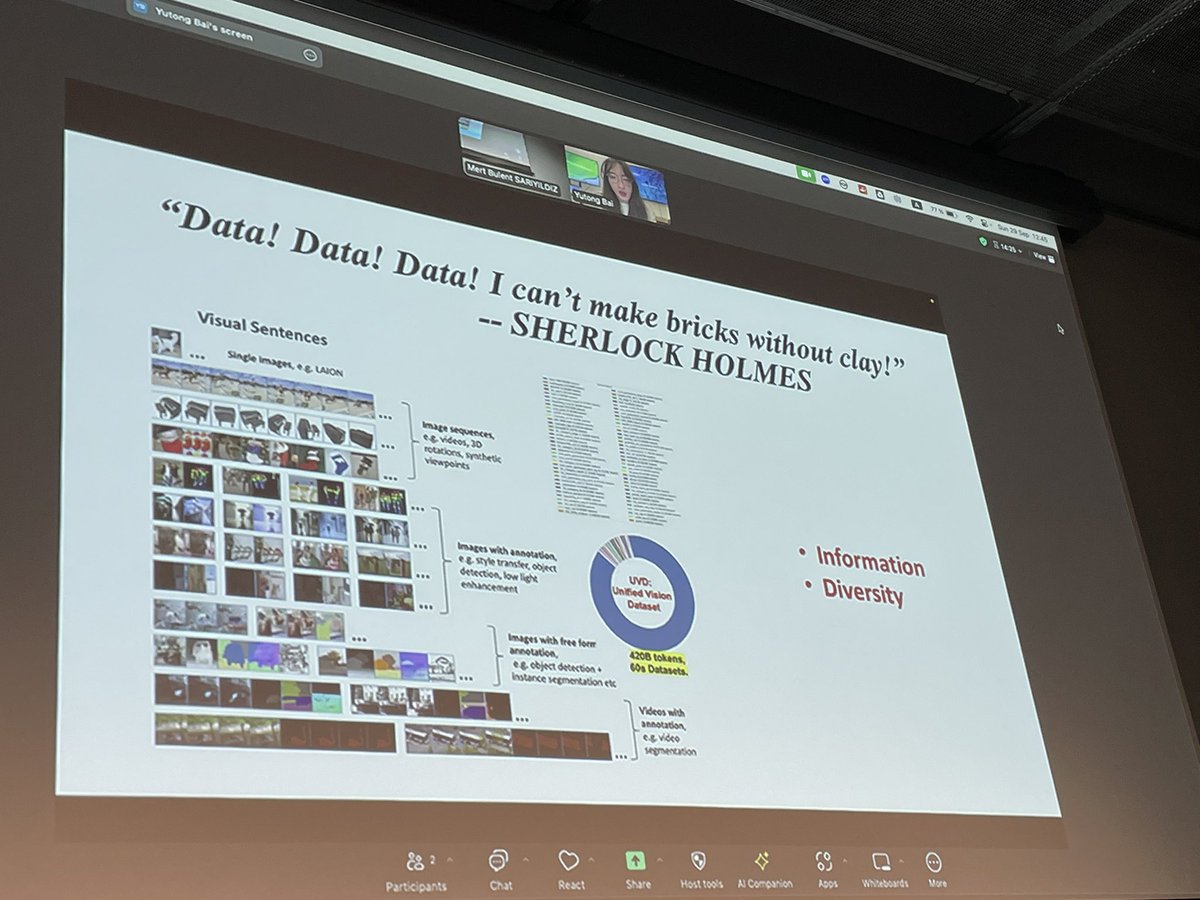

Michael Dorkenwald European Conference on Computer Vision #ECCV2026 Ishan Misra Oriane Siméoni Xinlei Chen Olivier Hénaff Yuki Yutong Bai Final talk of the workshop by Yutong Bai on bottom up visual learning as for SSL 🎊

Finishing up the workshop with an amazing talk about sequential visual modeling by Yutong Bai 🔥

If you are at #ECCV2024 and excited about building vision foundational models using videos? Join our tutorial tomorrow morning 30th Sept. at 09:00 at Amber 7+8. European Conference on Computer Vision #ECCV2026

Happening later this MORNING at #ECCV2024! Jason Yu Tristan Aumentado-Armstrong Fereshteh Forghani Marcus Brubaker are you ready? 💪 Poster 235: Tue 1 Oct 10:30 am CEST - 12:30pm CEST

🚀 Excited to present SIGMA at European Conference on Computer Vision #ECCV2026 ! 🎉 We upgrade VideoMAE with Sinkhorn-Knopp on patch-level embeddings, pushing reconstruction to more semantic features. With Michael Dorkenwald. Let’s connect at today's poster session at 4:30 PM, poster number 256, or send us a DM.

Excited to announce that today I'm starting my new position at Technische Universität Nürnberg as a full Professor 🎉. I thank everyone who has helped me to get to this point, you're all the best! Our lab is called FunAI Lab, where we strive to put the fun into fundamental research. 😎 Let's go!

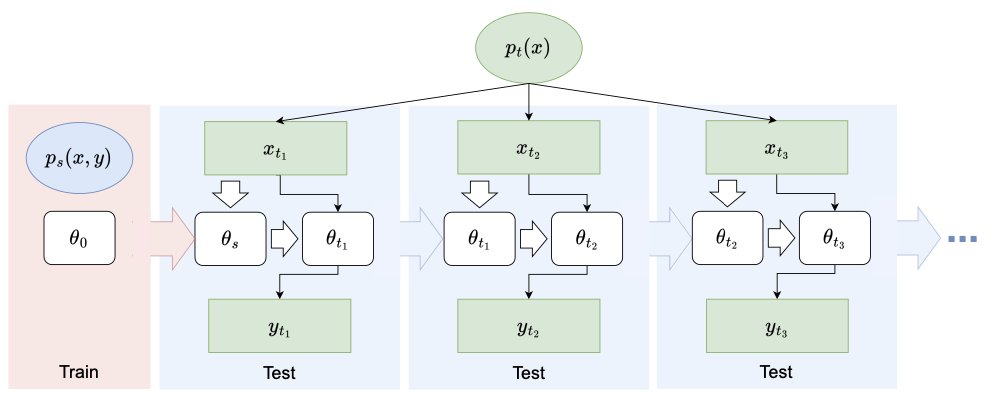

📢📢 Beyond Model Adaptation at Test Time: A Survey by Zehao Xiao. TL;DR: we provide a comprehensive and systematic review on test-time adaptation, covering more than 400 recent papers 💯💯💯💯 🤩 #CVPR2025 #ICLR2025 arxiv.org/abs/2411.03687