May Fung

@may_f1_

Assistant Professor, Hong Kong University of Science and Technology CSE 💻

Human-Centric Trustworthy AI/ML/NLP | Reasoning and Agents

ID: 1188626449911160834

https://mayrfung.github.io/ 28-10-2019 01:20:38

246 Tweet

1,1K Followers

488 Following

The decision notification letters have been sent! 🎉 We sincerely thank all authors and reviewers for their valuable contributions to this workshop. Kudos to our organizing committee, advisors, and support team for their incredible efforts: Guohao Li 🐫 May Fung (hiring postdocs) Qingyun Wang

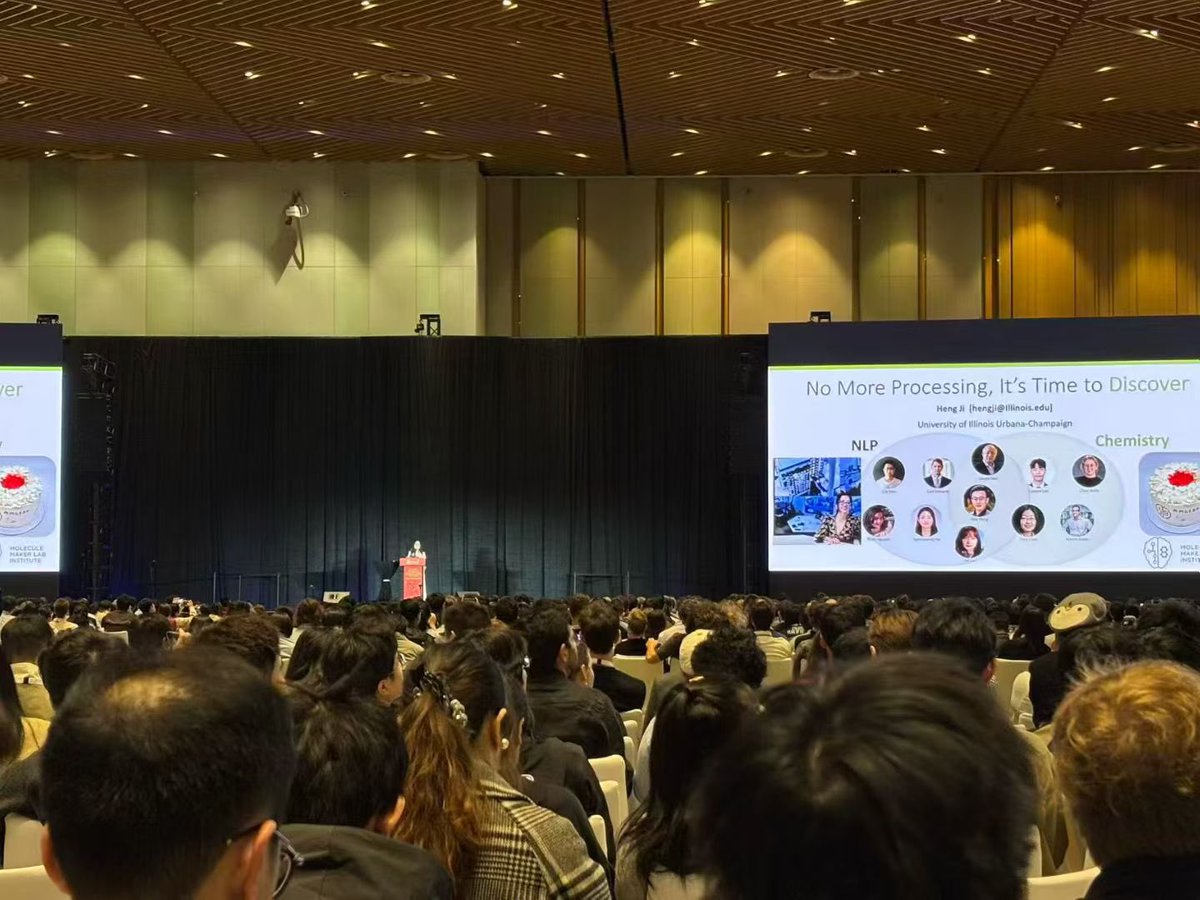

#EMNLP Keynote by Heng Ji: No more Processing. Time to Discover! AI for Science is just so exciting! Let us make LLMs discover like true scientists: Observe → Think → Propose and Verify (A pity to miss the talk. Photo from May Fung (hiring postdocs) EMNLP 2025 )

Happy to be with many of my academic grandchildren from HKUST, and my PhD student Cheng Qian Cheng Qian @ EMNLP2025 . So proud of Prof. May Fung May Fung (hiring postdocs) and the awesome RenAI lab she has built: renai-lab.github.io

Diffusion language models are making a splash (again)! To learn more about this fascinating topic, check out ⏩ my video tutorial (and references within): youtu.be/8BTOoc0yDVA ⏩discrete diffusion reading group: Discrete Diffusion Reading Group