Maurice Weiler

@maurice_weiler

AI researcher with a focus on geometric DL and equivariant CNNs. PhD with Max Welling. Master's degree in physics.

ID: 955017329863221248

https://maurice-weiler.gitlab.io/ 21-01-2018 10:00:49

760 Tweet

3,3K Followers

1,1K Following

Does equivariance matter when you have lots of data and compute? In a new paper with Sönke Behrends, Pim de Haan, and Taco Cohen, we collect some evidence. arxiv.org/abs/2410.23179 1/7

Generating cat videos is nice, but what if you could tackle real scientific problems with the same methods? 🧪🌌 Introducing The Well: 16 datasets (15TB) for Machine Learning, from astrophysics to fluid dynamics and biology. 🐙: github.com/PolymathicAI/t… 📜: openreview.net/pdf?id=00Sx577…

Everything you love about generative models — now powered by real physics! Announcing the Genesis project — after a 24-month large-scale research collaboration involving over 20 research labs — a generative physics engine able to generate 4D dynamical worlds powered by a physics

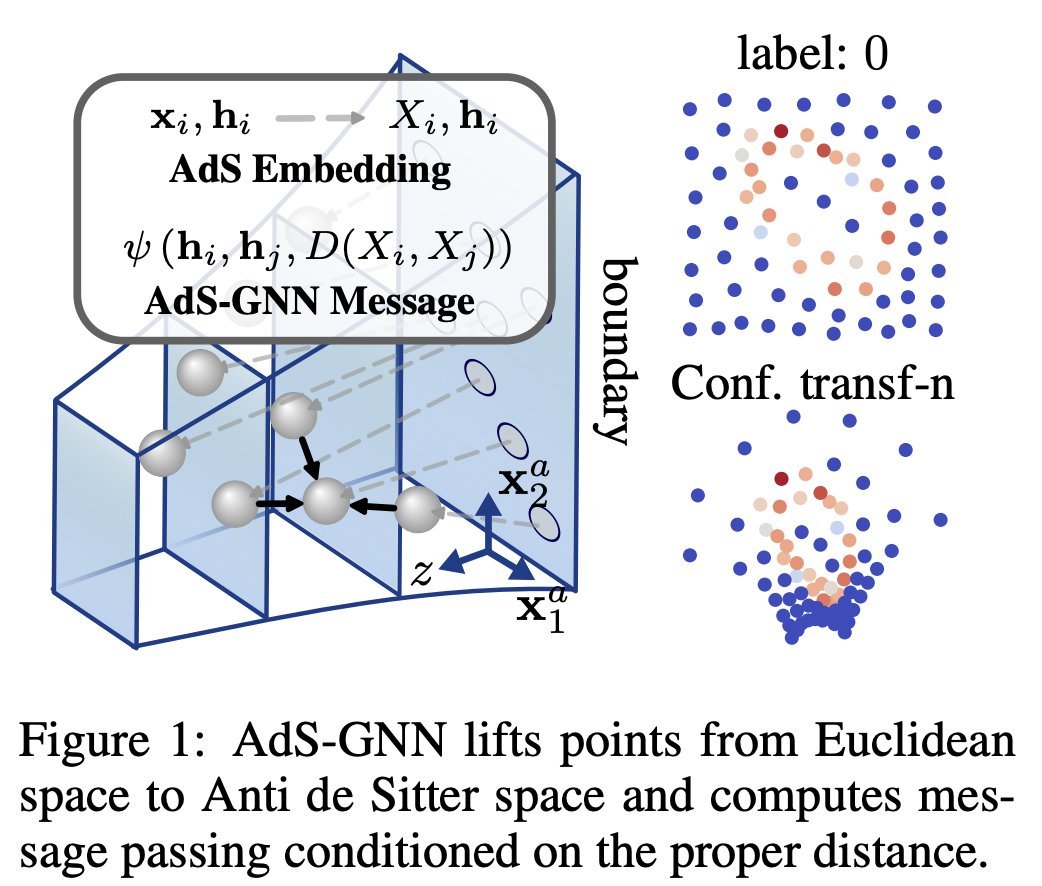

The arxiv preprint on our conformally equivariant neural network -- named AdS-GNN due to its secret origins in AdS/CFT -- is now out! arxiv.org/abs/2505.12880 🧵explaining it below. Joint work with the amazing team of Max Zhdanov, Erik Bekkers and Patrick Forre.

New preprint! We extend Taco Cohen's theory of equivariant CNNs on homogeneous spaces to the non-linear setting. Beyond convolutions, this covers equivariant attention, implicit kernel MLPs and more general message passing layers. More details in Oscar Carlsson's thread 👇

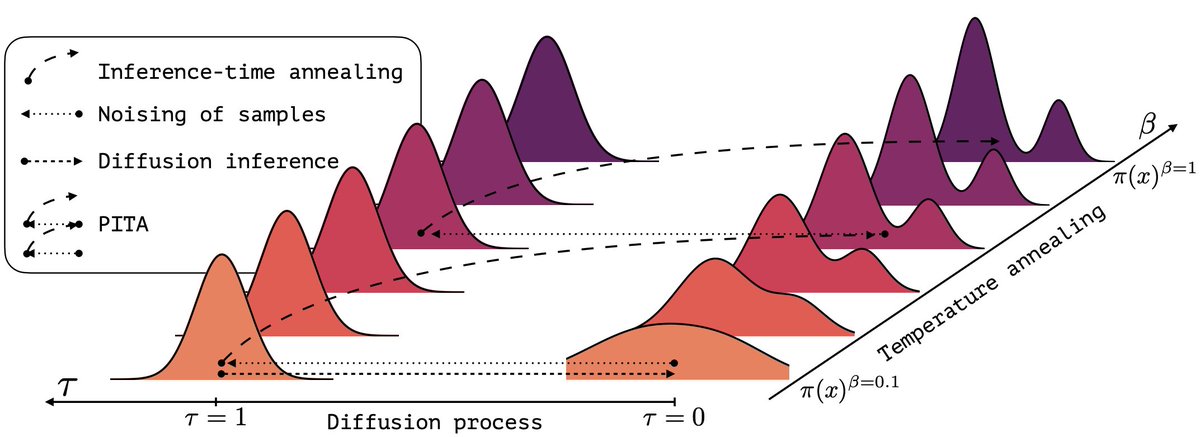

(1/n) Sampling from the Boltzmann density better than Molecular Dynamics (MD)? It is possible with PITA 🫓 Progressive Inference Time Annealing! A spotlight GenBio Workshop @ ICML25 of ICML Conference 2025! PITA learns from "hot," easy-to-explore molecular states 🔥 and then cleverly "cools"