Matteo Poggi

@mattpoggi

Tenure-Track Assistant professor - University of Bologna

ID: 287332799

http://mattpoggi.github.io 24-04-2011 19:48:39

94 Tweet

315 Takipçi

567 Takip Edilen

HS-SLAM: Hybrid Representation with Structural Supervision for Improved Dense SLAM Ziren Gong,Fabio Tosi, Youmin Zhang, Stefano Mattoccia, Matteo Poggi tl;dr:hash grid+tri-planes+one-blob->hybrid rep.; sample patches of non-local pixels->supervision; BA arxiv.org/abs/2503.21778

ToF-Splatting: Dense SLAM using Sparse Time-of-Flight Depth and Multi-Frame Integration Andrea Conti, Matteo Poggi, Valerio Cambareri, Martin Oswald, Stefano Mattoccia arxiv.org/abs/2504.16545

🍸🍸The TRICKY25 challenge: "Monocular Depth from Images of Specular and Transparent Surfaces" is live! 🍸🍸 Hosted at the 3rd TRICKY workshop #ICCV2025, with exciting speakers! Anton Obukhov Andrea Tagliasacchi 🇨🇦 He Wang Site: sites.google.com/view/iccv25tri… Codalab: codalab.lisn.upsaclay.fr/competitions/2…

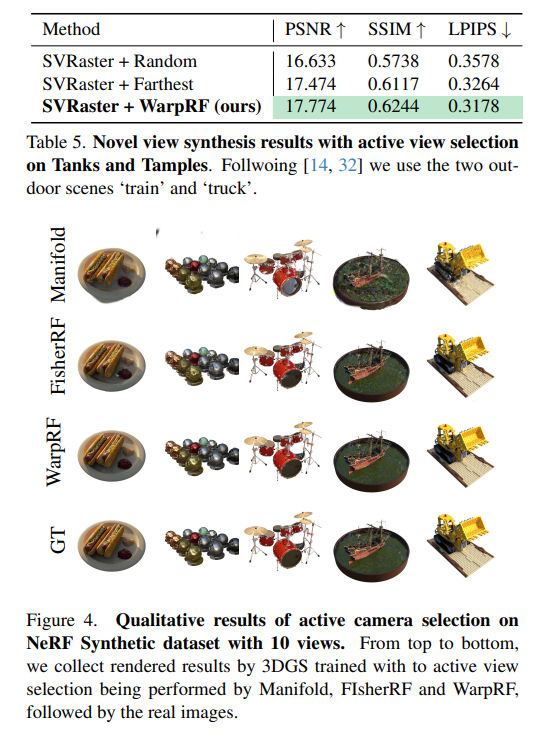

WarpRF: Multi-View Consistency for Training-Free Uncertainty Quantification and Applications in Radiance Fields Sadra Safadoust, Fabio Tosi, Fatma Güney, Matteo Poggi tl;dr: rendered depth->reprojection->uncertainty->next best view->later GS arxiv.org/abs/2506.22433

DINO-SLAM: DINO-informed RGB-D SLAM for Neural Implicit and Explicit Representations Ziren Gong, Xiaohan Li, Fabio Tosi, Youmin Zhang, Stefano Mattoccia, Jun Wu, Matteo Poggi tl;dr: DINO enhances NeRF/3DGS SLAM arxiv.org/abs/2507.19474

Ov3R: Open-Vocabulary Semantic 3D Reconstruction from RGB Videos Ziren Gong, Xiaohan Li, Fabio Tosi, Jiawei Han, Stefano Mattoccia, Jianfei Cai, Matteo Poggi tl;dr: CLIP->SLAM3R; CLIP+DINO+CG3D->2D-3D fused descriptor arxiv.org/abs/2507.22052