Matthew Yang

@matthewyryang

MSML student @ CMU

ID: 1820131135478808576

04-08-2024 16:14:41

9 Tweet

5 Followers

76 Following

Oh my goodness. GPT-o1 got a perfect score on my Carnegie Mellon University undergraduate #math exam, taking less than a minute to solve each problem. I freshly design non-standard problems for all of my exams, and they are open-book, open-notes. (Problems included below, with links to

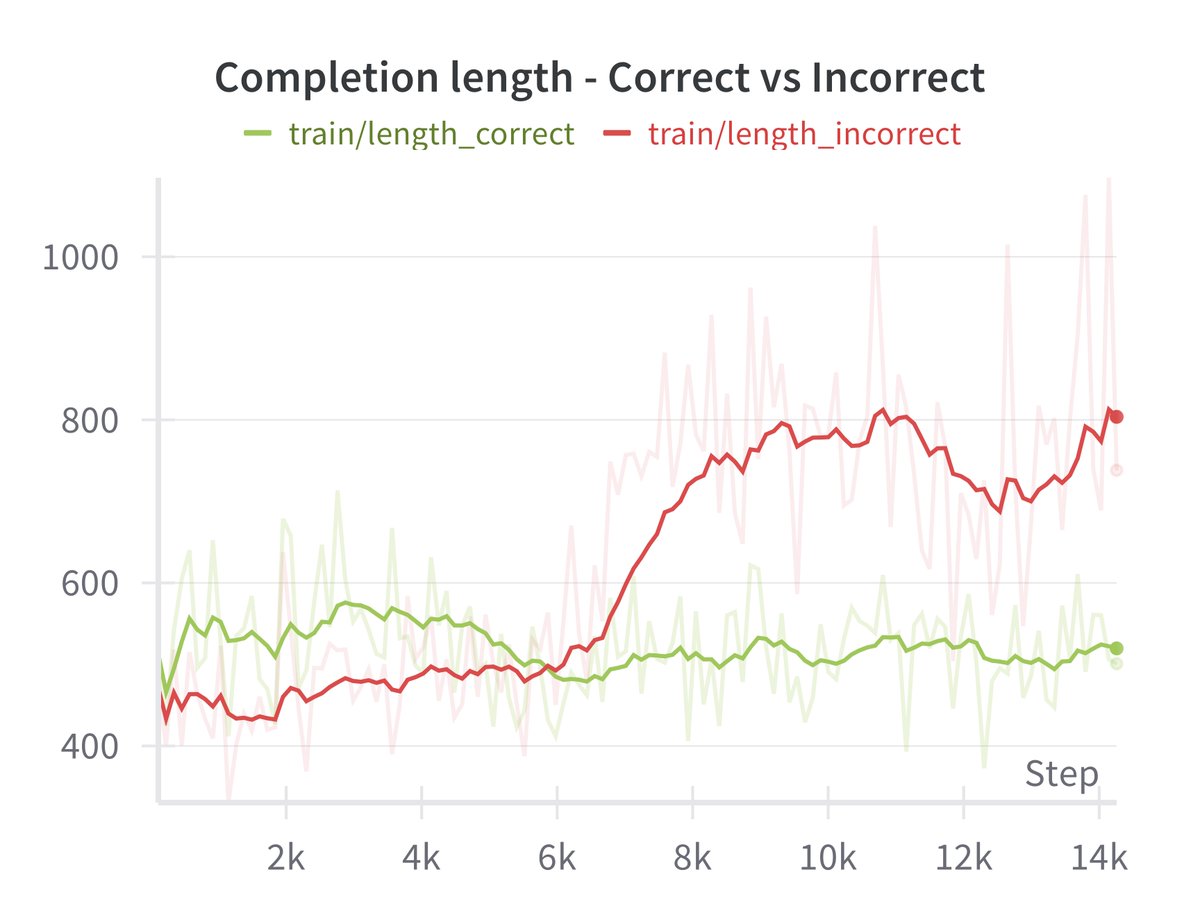

Our view on test-time scaling has been to train models to discover algos that enable them to solve harder problems. Amrith Setlur & Matthew Yang's new work e3 shows how RL done with this view produces best <2B LLM on math that extrapolates beyond training budget. 🧵⬇️

![Yuxiao Qu (@quyuxiao) on Twitter photo 🚨 NEW PAPER: "Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning"!

🤔 With all these long-reasoning LLMs, what are we actually optimizing for? Length penalties? Token budgets? We needed a better way to think about it!

Website: cohenqu.github.io/mrt.github.io/

🧵[1/9] 🚨 NEW PAPER: "Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning"!

🤔 With all these long-reasoning LLMs, what are we actually optimizing for? Length penalties? Token budgets? We needed a better way to think about it!

Website: cohenqu.github.io/mrt.github.io/

🧵[1/9]](https://pbs.twimg.com/media/GlxrCxiaQAACmUS.jpg)