Manu Halvagal

@manu_halvagal

Computational neuroscience PhD student with @hisspikeness learning about learning @FMIscience. Climbs rocks and obsesses over spherical brains in a vacuum.

ID: 1069043151149645825

https://mshalvagal.github.io 02-12-2018 01:38:43

433 Tweet

308 Followers

765 Following

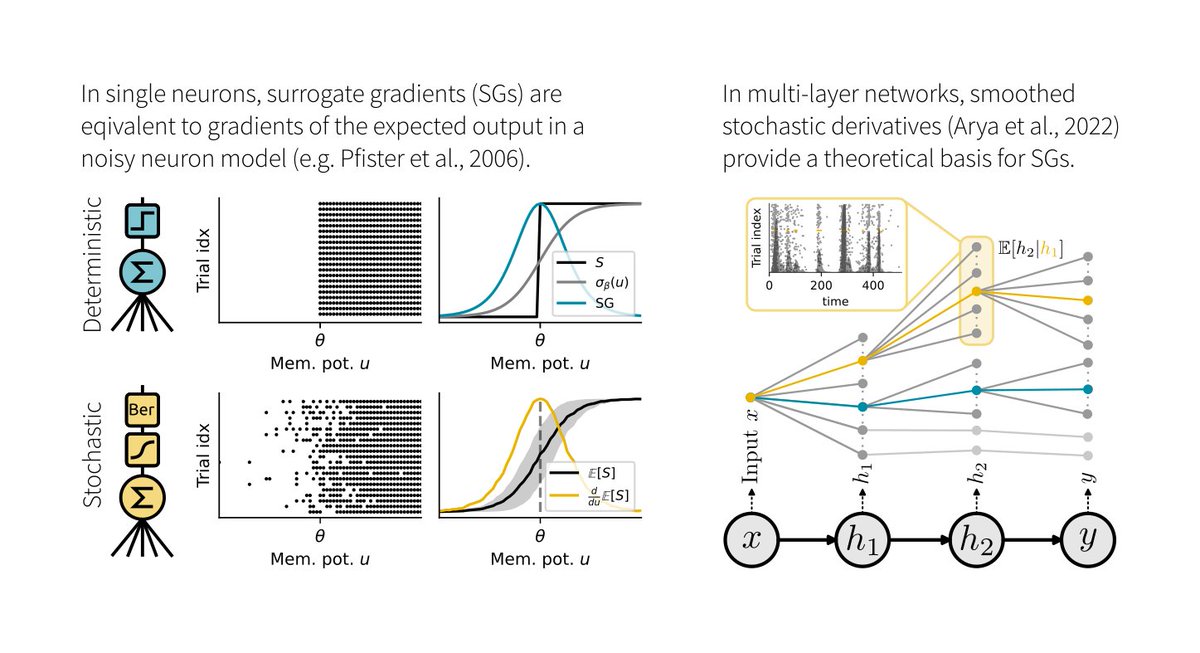

1/6 Surrogate gradients (SGs) are empirically successful at training spiking neural networks (SNNs). But why do they work so well, and what is their theoretical basis? In our new preprint led by Julia Gygax, we provide the answers: arxiv.org/abs/2404.14964

How can we understand the way sequence models choose to combine info in their context to make good predictions? In our new ICML Conference workshop paper, Adam J. Eisen , FieteGroup and I provide a new theoretical lens from dynamical systems: delay embeddings arxiv.org/abs/2406.11993

Observing the birth of an abstract representation with disentangled variables in human HPC!! Now published in Nature. Great collaboration with Hristos Courellis Juri Minxha @amamelak Ueli Rutishauser and others

Attention is awesome! So we (Jordan Lei Ari Benjamin ML Group, TU Berlin Kording Lab 🦖 and #NeuroAi) built a biologically inspired model of visual attention and binding that can simultaneously learn and perform multiple attention tasks 🧠 Pre-print: doi.org/10.1101/2024.0… A 🧵...

How can we train biophysical neuron models on data or tasks? We built Jaxley, a differentiable, GPU-based biophysics simulator, which makes this possible even when models have thousands of parameters! Led by Michael Deistler, collab with @CellTypist @ppjgoncalves biorxiv.org/content/10.110…

In the physical world, almost all information is transmitted through traveling waves -- why should it be any different in your neural network? Super excited to share recent work with the brilliant Mozes Jacobs: "Traveling Waves Integrate Spatial Information Through Time" 1/14

Happy to share that I successfully defended my PhD!! Thanks to everyone who’s helped me get through this journey :) Especially my advisor Friedemann Zenke Friedemann Zenke and everyone in the lab. It’s been a great few years!

I've spent much of my PhD thinking about E/I balance, and our latest preprint represents the culmination of that journey. Huge thanks to Friedemann Zenke for guiding me. Looking forward to your thoughts & comments.

What is "Life"...? In a new preprint with Michael Levin, Karina Kofman, and Blaise Agüera (@blaiseaguera.bsky.social), we used LLMs to map the semantic space emerging from 68 expert-provided definitions for "Life". Here's what we did, what we found, and what it means for what lives... 1/n