Michael Skarlinski

@m_skarlinski

ML/Engineering enthusiast and Member of the Technical Staff @ FutureHouse

ID: 1787551300844232704

06-05-2024 18:33:44

18 Tweet

179 Followers

5 Following

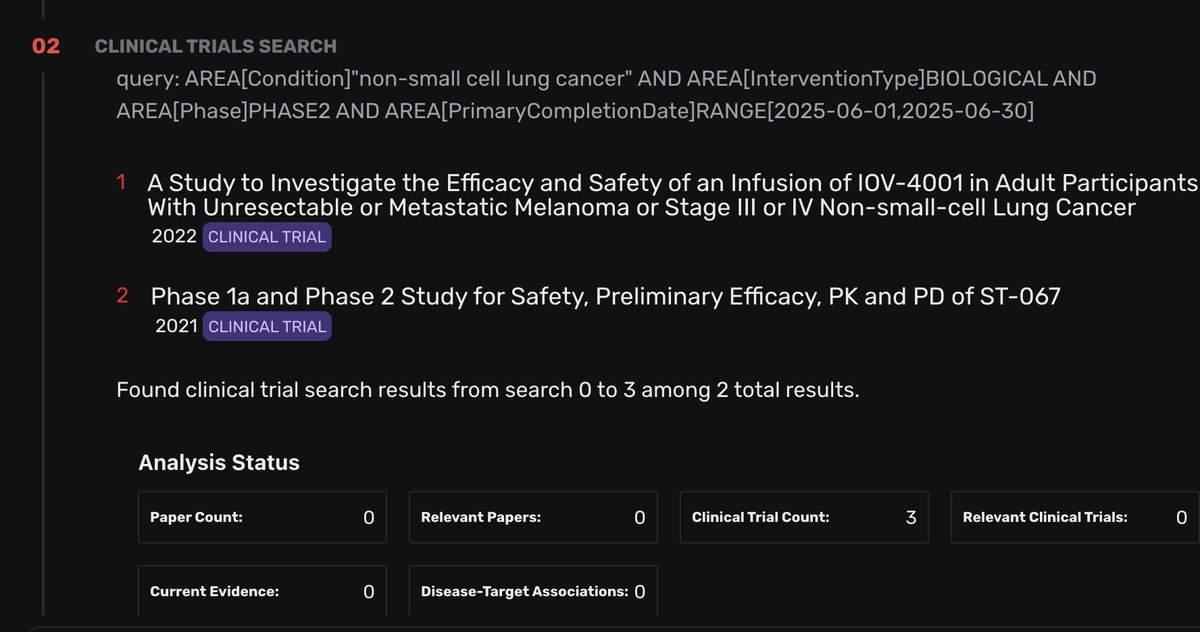

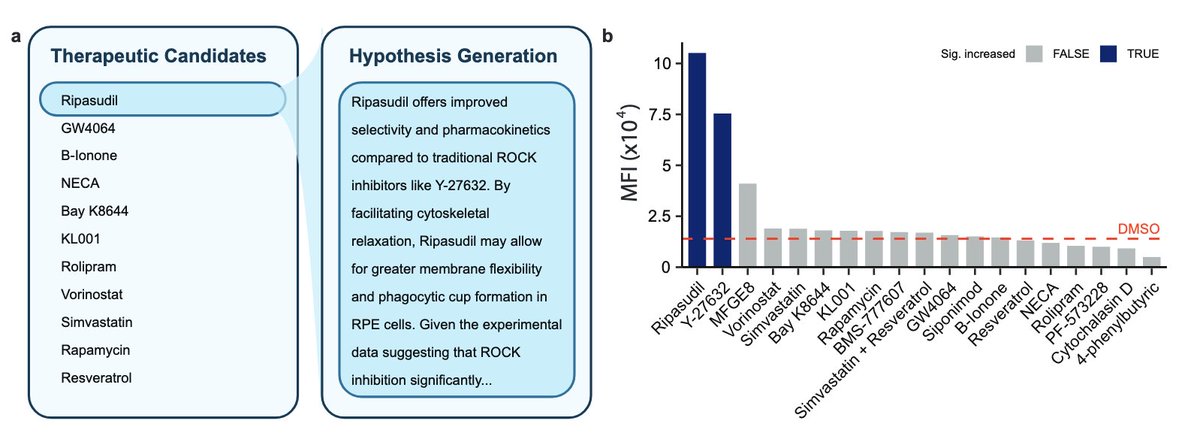

A cool detail on our platform -- if you share a task after it finishes, a social media preview will be dynamically generated for each task. Surprisingly tricky feature to get right! Thanks to Tyler Nadolski for working this out. platform.futurehouse.org/trajectories/9…

AI Agenda: The Startup Building an AI Scientist Why this startup building an AI scientist says we need a “Stargate” program for AI-driven scientific research. Read more from Stephanie Palazzolo 👇 theinformation.com/articles/start…