Michael Eli Sander

@m_e_sander

Research Scientist at Google DeepMind

ID: 1362051327732441088

https://michaelsdr.github.io/ 17-02-2021 14:48:44

213 Tweet

2,2K Takipçi

191 Takip Edilen

🎉 New preprint! biorxiv.org/content/10.110… STORIES learns a differentiation potential from spatial transcriptomics profiled at several time points using Fused Gromov-Wasserstein, an extension of Optimal Transport. Gabriel Peyré @LauCan88

After a very constructive back and forth with editors and reviewers of Nature Communications, scConfluence has now been published @LauCan88 Gabriel Peyré ! I'll present it this afternoon at the poster session of ECCB2026 Geneva, Switzerland (P296) Published version: nature.com/articles/s4146…

🏆Didn't get the Physics Nobel Prize this year, but really excited to share that I've been named one of the #FWIS2024 Fondation L'Oréal-UNESCO 🏛️ #Education #Sciences #Culture 🇺🇳 French Young Talents alongside 34 amazing young researchers! This award recognizes my research on deep learning theory #WomenInScience 👩💻

☢️ Some news about radioactivity ☢️ - We got a Spotlight at Neurips! 🥳 and we will be in Vancouver with Pierre Fernandez to present! - We have just released our code for radioactivity detection at github.com/facebookresear….

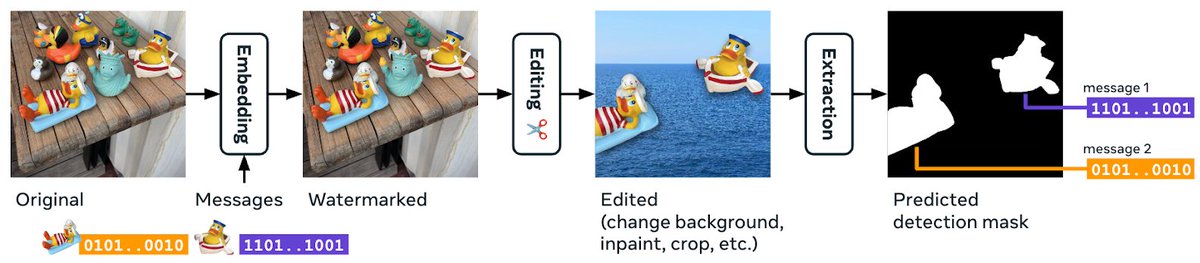

🔒Image watermarking is promising for digital content protection. But images often undergo many modifications—spliced or altered by AI. Today at AI at Meta, we released Watermark Anything that answers not only "where does the image come from," but "what part comes from where." 🧵

Merci pour l’opportunité d’avoir échangé sur mes recherches et mes expériences ! Merci à mes directeurs de thèse Gabriel Peyré et Rémi Gribonval pour votre supervision 😊