Luis Ceze

@luisceze

computer architect. marveled by biology. professor @uwcse. ceo @OctoAICloud. venture partner @madronaventures.

ID: 139128649

http://homes.cs.washington.edu/~luisceze/ 01-05-2010 16:43:33

1,1K Tweet

3,3K Followers

2,2K Following

Fine-tuned open-sourced models are giving the AI giants a run for their money. Matt Shumer, CEO of HyperWrite, and I sat down with @OctoAICloud to talk about the major trends impacting fast-growing AI startups across open source, cost savings, and flexibility. ⏩️ This is

#Llama3 🦙🦙 running fully locally on iPad without internet connnection. credits to Ruihang Lai and the team

It is amazing how cheap we can go when it comes to running #Llama3 models from AI at Meta , running on a $100 Orange Pi

Great work Yilong, Chien-Yu Lin Zihao Ye Baris Kasikci and team!

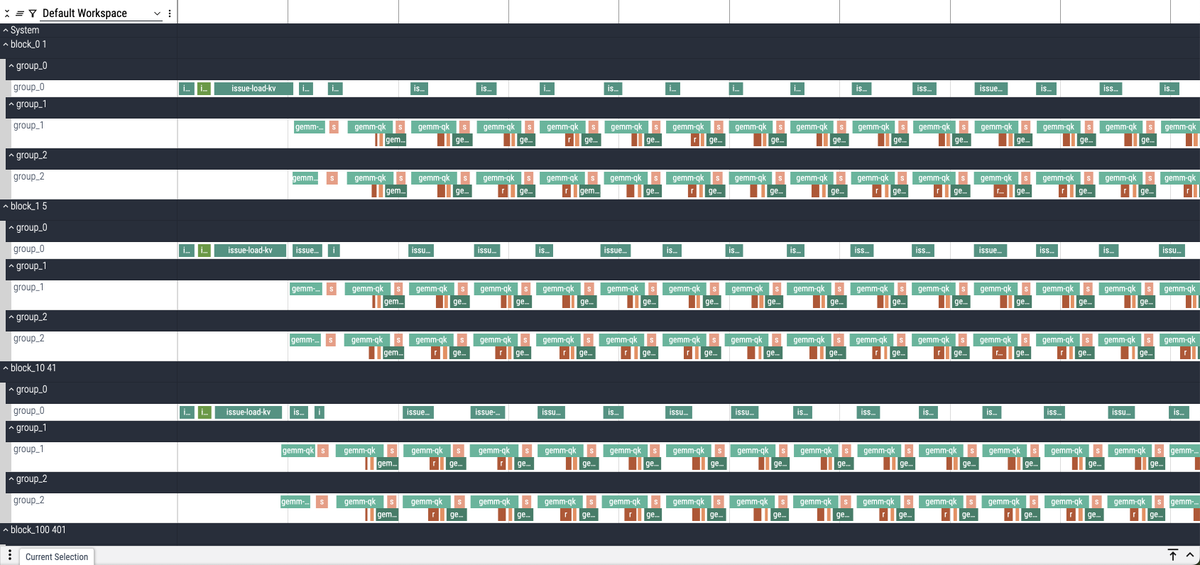

Go Lequn Chen (Lequn Chen)! Great work on making lots LoRAs cheap to serve. Nice collaboration with Zihao Ye Arvind Krishnamurthy and others! #mlsys24 arxiv.org/abs/2310.18547

Huge achievement by the AI at Meta team on launching the Llama 3.1 models! The quality benchmarks look incredible, our customers are going to be really excited for the whole Llama 3.1 herd. Learn more and try them on @OctoAICloud here: octo.ai/blog/llama-3-1…. 🙏🚀🐙

Fascinating to read about this analysis of how telenovelas have such a deep impact on real world culture — I’m brazilian :). As a computer scientist, reading TRIBAL by Michael Morris, Professor at Columbia University makes me wonder about culture impact on AI and its co-evolution with human culture.

Anxhelo Xhebraj Sean Lee Vinod Grover ‘s work is finally out. Kick the tires and let them know what do you think!