LP Morency

@lpmorency

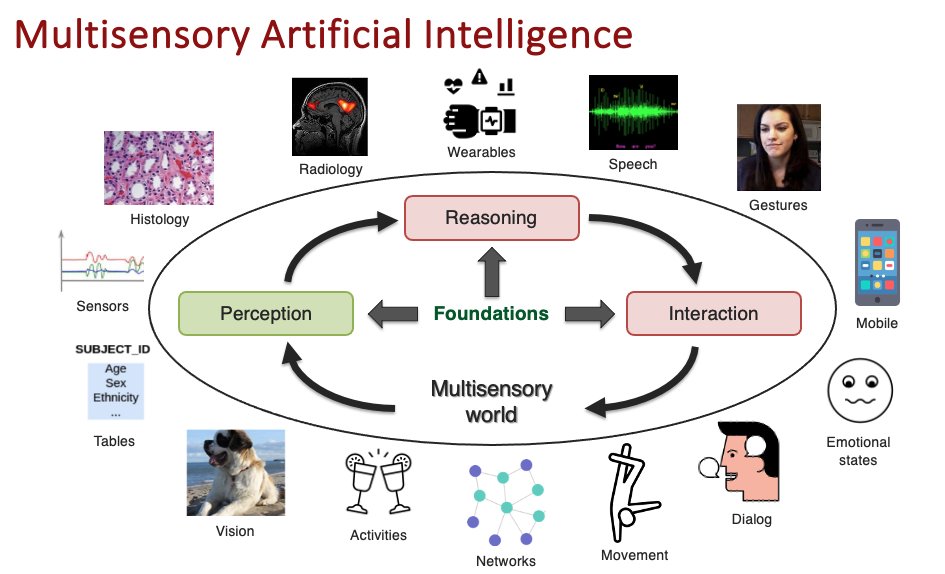

Associate Professor at CMU studying multimodal and Social AI. Ice hockey goalie.

ID: 3201769601

http://multicomp.cs.cmu.edu/ 24-04-2015 17:20:02

76 Tweet

1,1K Followers

21 Following

🔍 Louis-Philippe Morency LP Morency breaks it down: 1️⃣ Modalities are often linked—statistically or semantically 2️⃣ Statistical links are bottom-up; Semantic links are top-down 3️⃣ Relationships can be complex—think dependencies 🧠 From a captivating talk - time to rethink how we

led by Dong Won (Don) Lee, with Chaitanya Ahuja Sanika Natu LP Morency Language Technologies Institute | @CarnegieMellon Machine Learning Dept. at Carnegie Mellon code and dataset publicly available paper: openaccess.thecvf.com/content/ICCV20… code: github.com/dondongwon/LPM…

Despite the successes of contrastive learning (eg CLIP), they have a fundamental limitation - it can only capture *shared* info between modalities, and ignores *unique* info To fix it, a thread for our #NeurIPS2023 paper w Zihao Martin James Zou LP Morency Russ Salakhutdinov:

Agreed! Multimodal ML is not just a sub-area of various modalities. LP Morency is one of the researchers who has believed in multimodal for years. Anyone interested in multimodal ML should check out his course on this topic, co-taught with Paul Liang! cmu-multicomp-lab.github.io/mmml-course/fa…

Curious about socially-intelligent AI? Check out our paper on underlying technical challenges, open questions, and opportunities to advance social intelligence in AI agents: Work w/ LP Morency, Paul Liang 📰Paper: arxiv.org/abs/2404.11023 💻Repo: github.com/l-mathur/socia… 🧵1/9

#ICLR2024: Paul Liang Paul Liang is presenting our work on Multimodal Learning Without Labeled Multimodal Data: Guarantees and Applications. Paper: arxiv.org/abs/2306.04539 Code: github.com/pliang279/PID with Chun Kai Ling, Yun Cheng, Alex Obolenskiy, Yudong Liu, Rohan Pandey,

FAIR is hiring across Europe and North America to build the next generation of AI systems. Please apply directly below or reach out! Postdoctoral Researcher: metacareers.com/jobs/380024285… LP Morency Research Scientist and Postdoctoral Researcher positions in developmental AI: