El_Sturm

@lowtour

de Gödel à Lubitsch en passant par Bacon, Don de Lillo et Lacan. Sans oublier Rita Hayworth, l'IA et le dialogue social... Bien touiller

ID: 364588428

30-08-2011 00:43:09

1,1K Tweet

48 Takipçi

530 Takip Edilen

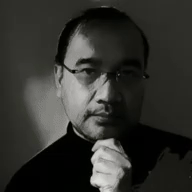

Why LLMs Fail in Back-and-Forth Chats Beautiful paper from @microsoft and Salesforce AI Research Large Language Models (LLMs) are incredibly good at tackling tasks when you give them all the information upfront in one go. Think of asking for a code snippet with all requirements clearly