Ming-Yu Liu

@liu_mingyu

Tweets are my own.

ID: 4475055297

https://mingyuliu.net/ 13-12-2015 23:23:25

959 Tweet

8,8K Takipçi

489 Takip Edilen

1/💡New paper from NVIDIA&Tsinghua International Conference on Minority Languages Spotlight! Direct Discriminative Optimization (DDO) enables GAN-style finetuning of diffusion/autoregressive models without extra networks. SOTA achieved on ImageNet-512! Website: research.nvidia.com/labs/dir/ddo/ Code: github.com/NVlabs/DDO

Cosmos-Reason1 has exciting updates 💡 Now it understands physical reality — judging videos as real or fake! Check out the resources👇 Paper: arxiv.org/abs/2503.15558 Huggingface: huggingface.co/nvidia/Cosmos-… Code: github.com/nvidia-cosmos/… Project page: research.nvidia.com/labs/dir/cosmo… (1/n)

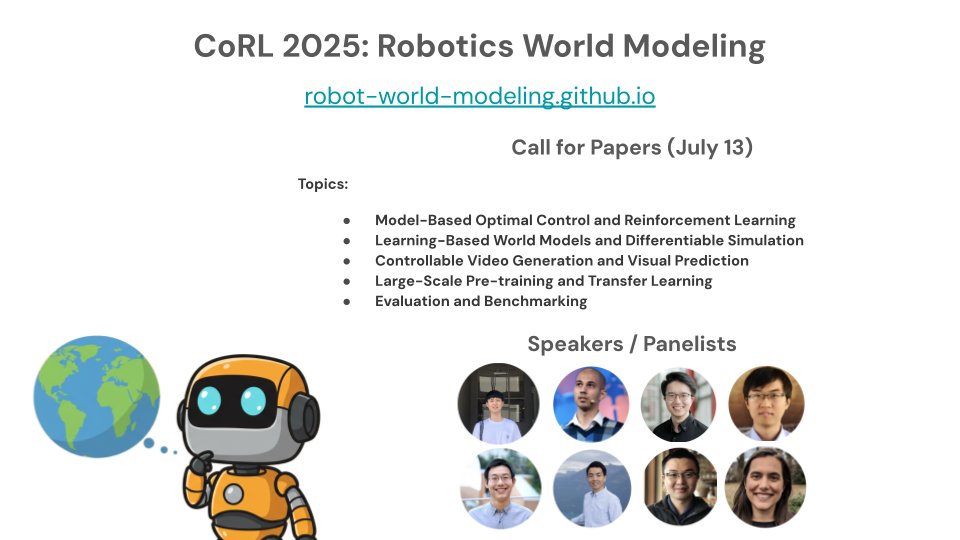

🤖🌎 We are organizing a workshop on Robotics World Modeling at Conference on Robot Learning 2025! We have an excellent group of speakers and panelists, and are inviting you to submit your papers with a July 13 deadline. Website: robot-world-modeling.github.io

Kick off your #OpenUSD Day with a look into the future of robotics and autonomous vehicles. 🤖 Join Ming-Yu Liu as he shares how #NVIDIACosmos world foundation models unlock prediction and reasoning for the next wave of robotics and autonomous vehicles. 📅Wednesday, 8/13 at

In Cosmos, we are hiring Cosmos World Foundation Model builders. If you are interestd in building large-scale video foundaiton model and multimodal LLM for Robots and cars, please send your CV to [email protected] If you have experiences in large-scale diffusion models,

[1/N] 🎥 We've made available a powerful spatial AI tool named ViPE: Video Pose Engine, to recover camera motion, intrinsics, and dense metric depth from casual videos! Running at 3–5 FPS, ViPE handles cinematic shots, dashcams, and even 360° panoramas. 🔗 research.nvidia.com/labs/toronto-a…