Liliang Ren

@liliang_ren

Senior Researcher at Microsoft GenAI | UIUC CS PhD graduate | Efficient LLM | NLP | Former Intern @MSFTResearch @Azure @AmazonScience

ID: 1106294591718715392

https://renll.github.io 14-03-2019 20:42:39

101 Tweet

2,2K Followers

455 Following

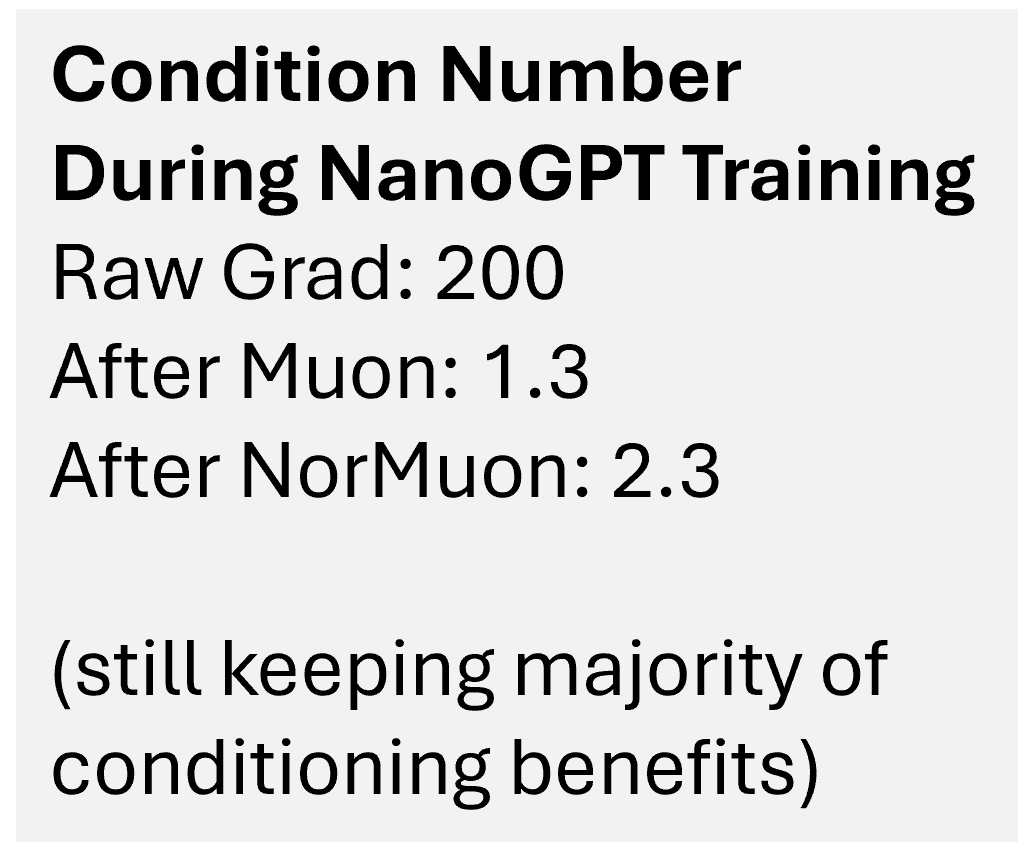

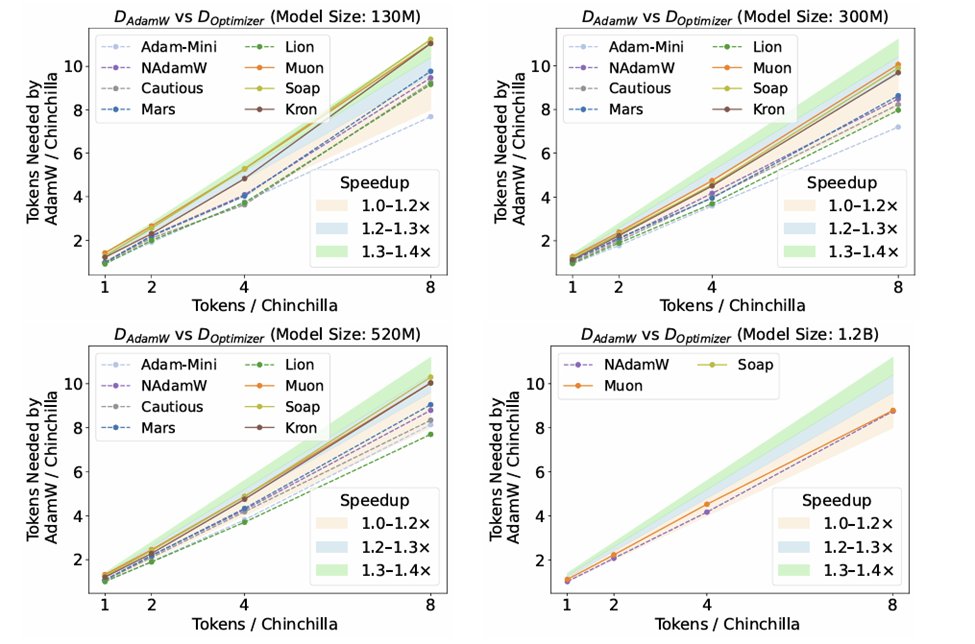

NorMuon from Zichong Li et al. takes the crown as the leading NanoGPT speedrun optimizer! github.com/KellerJordan/m… NorMuon enhances Muon with a neuron normalization step after orthogonalization using second-order statistics. arxiv.org/abs/2510.05491