Krithik Ramesh

@krithiktweets

AI + Math @MIT, compbio stuff @broadinstitute, prev: research @togethercompute

ID: 834174443211534336

http://krithikramesh.com 21-02-2017 22:54:20

2,2K Tweet

655 Followers

649 Following

I'm at #ICLR2025 this week to present our work on🔬high-precision algorithm learning🔬with Transformers! Stop by our poster session Thursday afternoon! 🔗arxiv.org/abs/2503.12295 With Jess Grogan, Owen Dugan , Ashish Rao, Simran Arora , Atri Rudra, and hazyresearch!

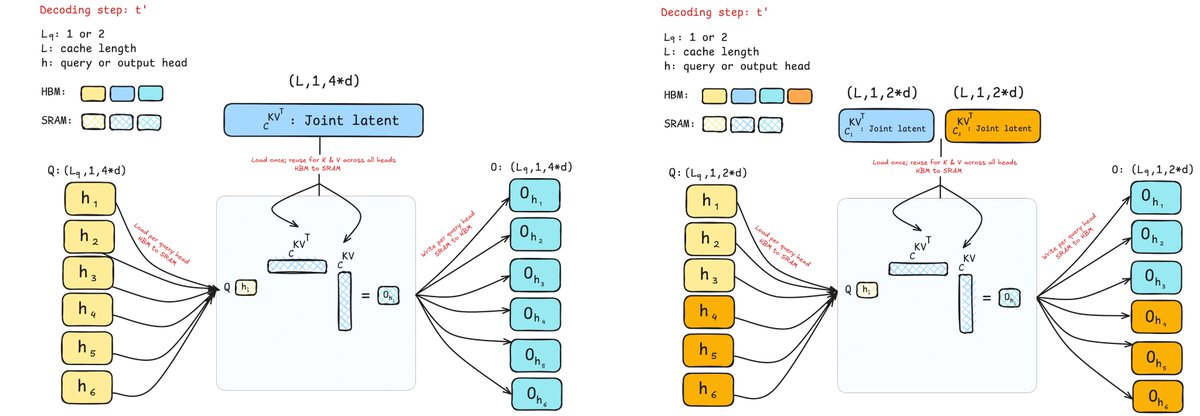

In the test time scaling era, we all would love a higher throughput serving engine! Introducing Tokasaurus, a LLM inference engine for high-throughput workloads with large and small models! Led by Jordan Juravsky, in collaboration with hazyresearch and an amazing team!

Excited to share our new work on Self-Adapting Language Models! This is my first first-author paper and I’m grateful to be able to work with such an amazing team of collaborators: Jyo Pari Han Guo Ekin Akyürek Yoon Kim Pulkit Agrawal

1/ Excited to share our recent work in #ICML2025, “A multi-region brain model to elucidate the role of hippocampus in spatially embedded decision-making”. 🎉 🔗 minzsiure.github.io/multiregion-br… Joint w/ FieteGroup Jaedong Hwang, Brody Lab, Princeton Neuroscience Institute ⬇️ 🧵 for key takeaways