Konstantin Mishchenko

@konstmish

Research Scientist @AIatMeta

Previously Researcher @ Samsung AI

Outstanding Paper Award @icmlconf 2023

Action Editor @TmlrOrg

I tweet about ML papers and math

ID: 1272954231721426945

http://konstmish.com/ 16-06-2020 18:08:40

606 Tweet

5,5K Followers

605 Following

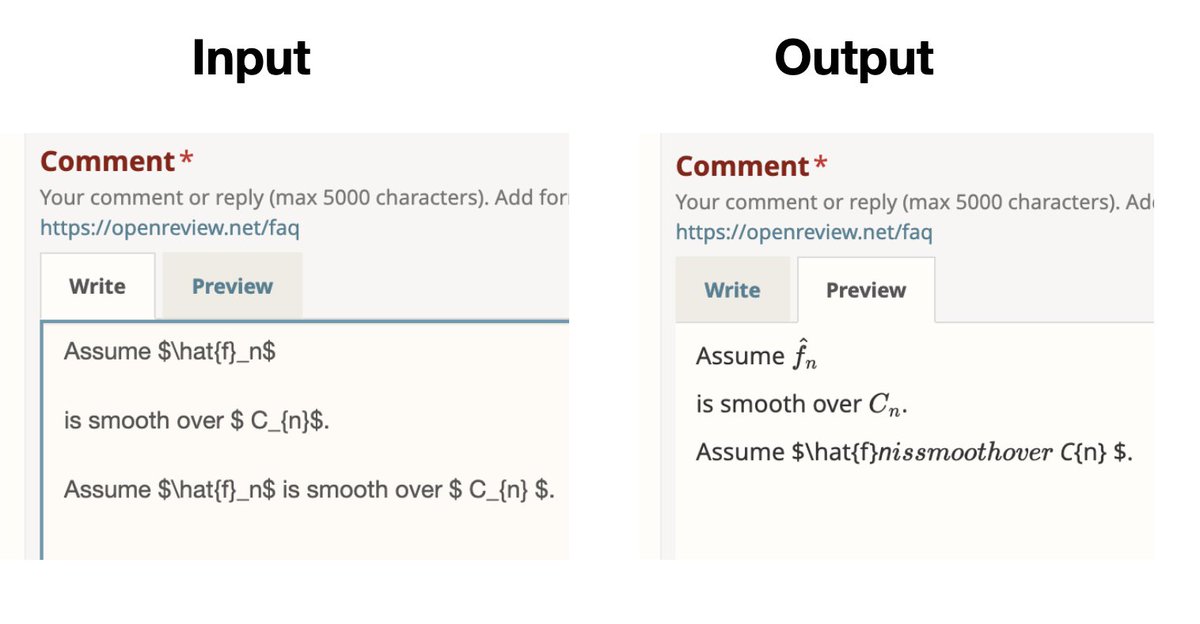

OpenReview's LaTeX parser seems to be quite bad and it makes it very painful to be a reviewer sometimes. For example: "Assume $\hat{f}_n$ is smooth over $ C_{n}$" can be parsed only if it's split into two paragraphs, which makes no sense. Can you please fix this? Open Review

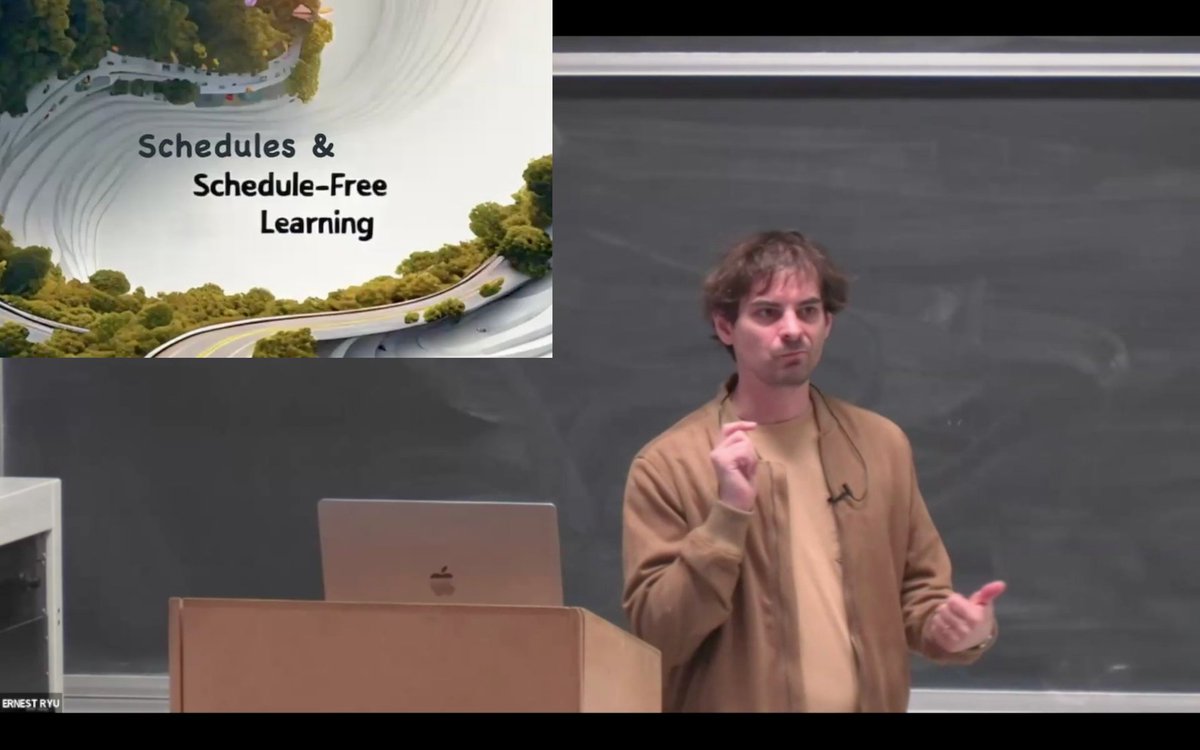

Shuvomoy Das Gupta (Columbia) and I (UCLA) are starting an optimization seminar series! Our first speaker, Aaron Defazio (Meta), presented Schedules & Schedule-Free Learning. Aaron will give his NeurIPS 2024 Oral work next week. This is a longer version. (Video link in reply)