Kazem Meidani

@kazemmeidani

AI Research @CapitalOne, prev. PhD @CarnegieMellon. AI research intern @NetflixResearch, @EA. AI4Science

ID: 1265472111344340994

https://mmeidani.github.io 27-05-2020 02:37:35

33 Tweet

479 Takipçi

1,1K Takip Edilen

Teaching a new course on Neural Code Generation with Daniel Fried! cmu-codegen.github.io/s2024/ Here is the lecture on pretraining and scaling laws: cmu-codegen.github.io/s2024/static_f…

Congratulations Kazem Meidani on your PhD! It was an honor to serve on your thesis committee :) Also – love this symbolic regression-themed graduation present from Amir Barati

Successfully defended my PhD thesis on AI for scientific discovery from Carnegie Mellon University ! I'm grateful for the invaluable support and mentorship from my PhD advisor, Amir Barati. And many thanks to my committee members: Chris McComb (he/him), Sean Welleck, Chandan Reddy, Miles Cranmer.

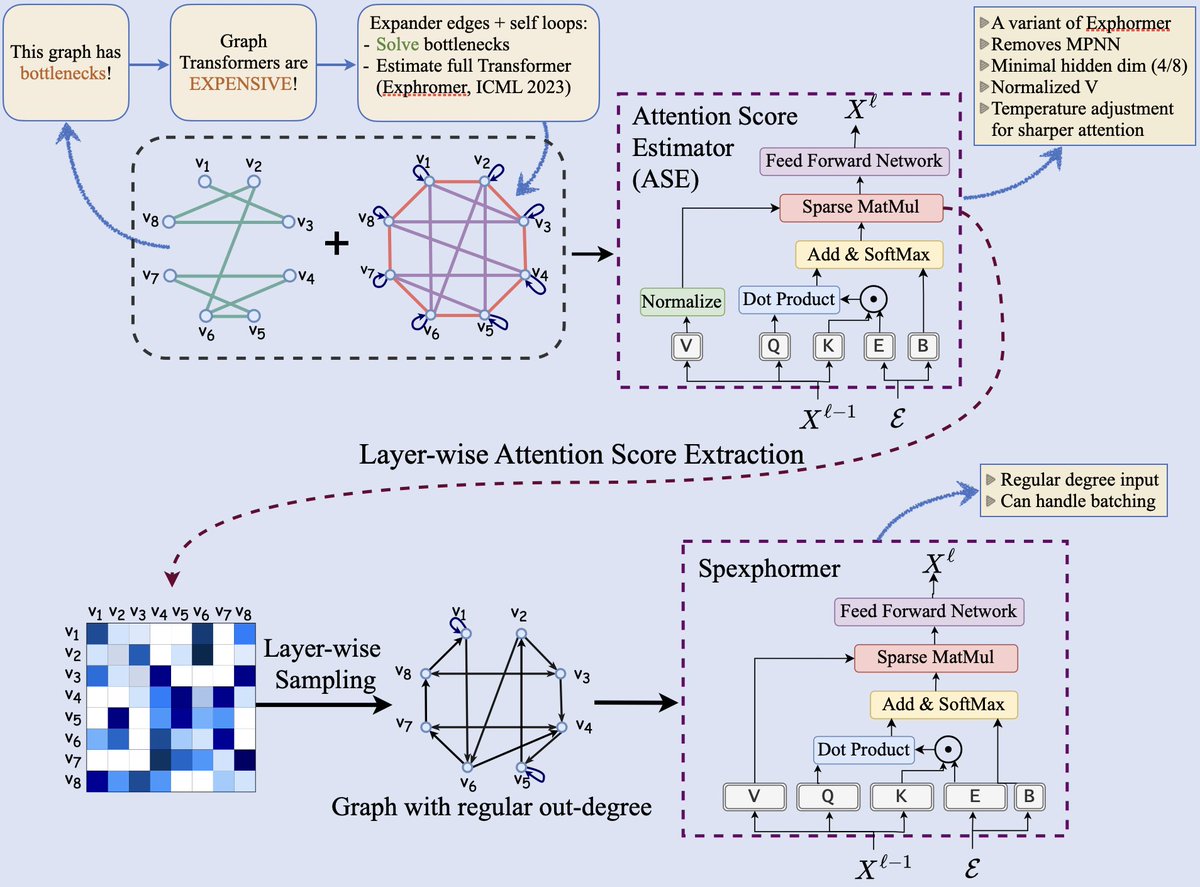

Graph Transformers (GTs) can handle long-range dependencies and resolve information bottlenecks, but they’re computationally expensive. Our new model, Spexphormer, helps scale them to much larger graphs – check it out at NeurIPS Conference next week, or the preview here! #NeurIPS2024

Can’t attend ICLR 🇸🇬 due to visa issues but Chandan Reddy will have the oral presentation of *LLM-SR*on Friday 👇🏻 + see our new preprint on benchmarking capabilities of LLMs for scientific equation discovery *LLM-SRBench*: arxiv.org/abs/2504.10415

Excited that our benchmark paper for scientific discovery **LLM-SRBench** is accepted to #ICML2025 as *spotlight* !! 🎉🇨🇦 Special thanks to Hieu Nguyen and all other collaborators Kazem Meidani Amir Barati Khoa D. Doan Chandan Reddy

![fly51fly (@fly51fly) on Twitter photo [LG] Masked Autoencoders are PDE Learners

A Zhou, A B Farimani [CMU] (2024)

arxiv.org/abs/2403.17728

- Masked autoencoders can learn useful latent representations for PDEs through self-supervised pretraining on unlabeled spatiotemporal data. This allows them to improve [LG] Masked Autoencoders are PDE Learners

A Zhou, A B Farimani [CMU] (2024)

arxiv.org/abs/2403.17728

- Masked autoencoders can learn useful latent representations for PDEs through self-supervised pretraining on unlabeled spatiotemporal data. This allows them to improve](https://pbs.twimg.com/media/GJqpA0saEAAGz_o.jpg)