Simran Kaur

@kaur_simran25

PhD Student @PrincetonCS. Previously @acmi_lab and undergrad @SCSatCMU.

ID: 1529665454595354624

26-05-2022 03:27:34

21 Tweet

262 Followers

318 Following

Excited to announce that my first published paper (!!) will be a spotlight at the #NeurIPS2022 Higher-Order Optimization workshop on Dec 2nd! Huge thanks to my co-authors Simran Kaur Tanya Marwah Saurabh Garg Zachary Lipton, paper thread coming soon! order-up-ml.github.io/papers/

1/n ‼️ Our spotlight (and now BEST POSTER!) work from the Higher Order Optimization workshop at #NeurIPS2022 is now on arxiv! Paper 📖: arxiv.org/abs/2211.15853 w/Simran Kaur Tanya Marwah Saurabh Garg Zachary Lipton

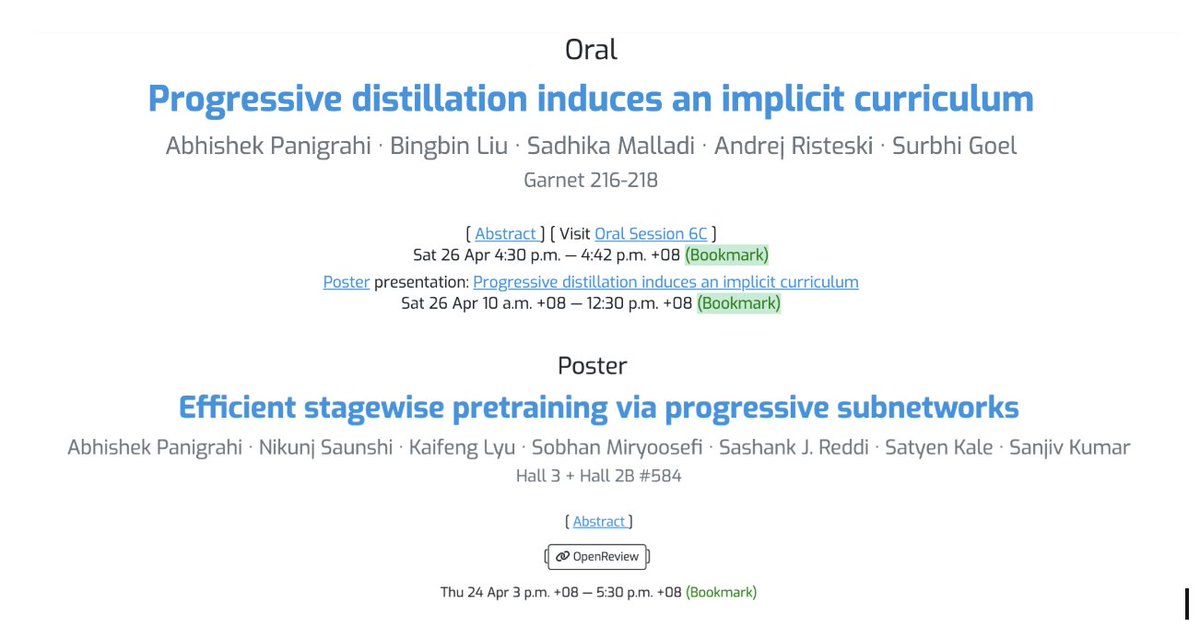

Our work on understanding the mechanisms behind implicit regularization in SGD was just accepted to #ICLR2023 ‼️ Huge thanks to my collaborators Simran Kaur Tanya Marwah Saurabh Garg Zachary Lipton 🙂 Check out the thread below for more info: