Krueger AI Safety Lab

@kasl_ai

We are a research group at the University of Cambridge led by @DavidSKrueger, focused on avoiding catastrophic risks from AI

ID: 1711727229212794880

https://www.kasl.ai/ 10-10-2023 12:57:09

26 Tweet

356 Followers

133 Following

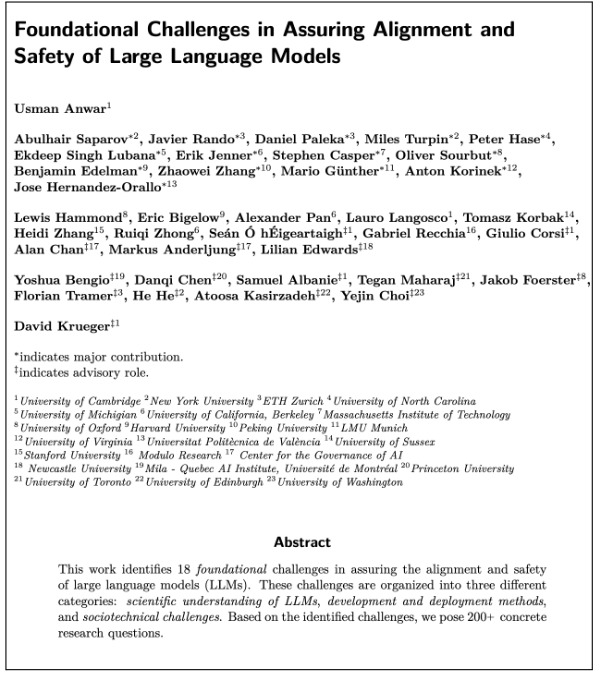

I’m super excited to release our 100+ page collaborative agenda - led by Usman Anwar - on “Foundational Challenges In Assuring Alignment and Safety of LLMs” alongside 35+ co-authors from NLP, ML, and AI Safety communities! Some highlights below...

Super proud to have been able to make my little contribution to this monumental work. Huge credit to Usman Anwar for recognizing the need for this paper and pulling everything together to make it happen

Big congrats to my student Usman Anwar for this!

Watch our alumnus Jesse Hoogland presenting his work on singular learning theory

Congrats to our affiliate Fazl Barez 🔜 @NeurIPS whose paper has won best poster at Tokyo Technical AI Safety Conference @tais_2024 We have had the pleasure of working with Fazl since February

Working to make RL agents safer and more aligned? Using RL methods to engineer safer AI? Developing audits or governance mechanisms for RL agents? Share your work with us at the RL Safety workshop at RL_Conference 2024! ‼️ Updated deadline ‼️ ➡️ 24th of May AoE

Congrats to Alan Chan , David Krueger , Markus Anderljung and the rest of the team on this ACM FAccT accepted paper

Real privilege today to get scholars from Future Intelligence ,Centre for the Study of Existential Risk, Bennett Institute for Public Policy, & Krueger AI Safety Lab together today for a discussion of Concordia's State of AI Safety in China report with Kwan Yee Ng. Important work, buzzing exchange. concordia-ai.com

New paper from Krueger Lab alum Micah Carroll. Congrats 🎉

Could you help us build Cambridge University's #AI research community? We are looking for a Programme Manager who can deliver key programmes, scope new opportunities & ensure that our mission embeds agile project management. 📅 Deadline: 8 July Read more ⬇️ ai.cam.ac.uk/opportunities/…