John Thickstun

@jwthickstun

Assistant Professor @Cornell_CS.

Previously @StanfordCRFM @stanfordnlp @uwcse

Controllable Generative Models. AI for Music.

ID: 1232444191889711104

https://johnthickstun.com/ 25-02-2020 23:16:19

287 Tweet

1,1K Takipçi

602 Takip Edilen

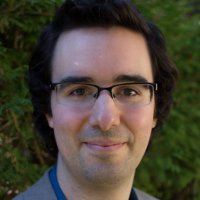

If you’re at #iclr2025, you should catch Cornell PhD student Yair Schiff—check out his new paper that derives classifier-based and classifier-free guidance for discrete diffusion models.

I defended my PhD from Stanford CS Stanford NLP Group 🌲 w/ Stanford CS first all-female committee!! My dissertation focused on AI methods, evaluations & interventions to improve Education. So much gratitude for the support & love - and SO excited for the next chapter!!!! 🥳

I’m stoked to share our new paper: “Harnessing the Universal Geometry of Embeddings” with jack morris, Collin Zhang, and Vitaly Shmatikov. We present the first method to translate text embeddings across different spaces without any paired data or encoders. Here's why we're excited: 🧵👇🏾

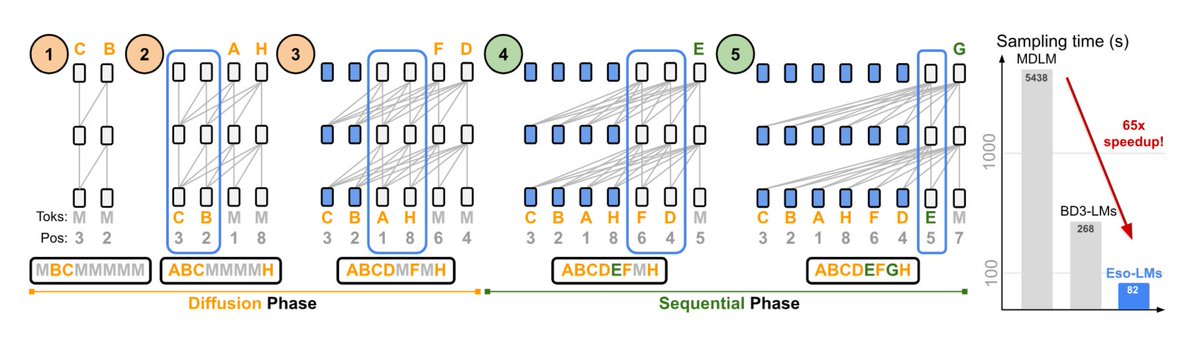

KV-caching is great, but will it work for Diffusion Language Models. Zhihan Yang and team showed how to make it work with 65x speedup 🚀! Checkout the new preprint: arxiv.org/abs/2506.01928 The LLM360 team is very interested to explore new architectures.

.@NVIDIA never stops surprising Together with Cornell University they presented Eso-LMs (Esoteric Language Models) — a new kind of LM that combines the best parts of autoregressive (AR) and diffusion models. • It’s the first diffusion-based model that supports full KV caching. • At the

New paper: World models + Program synthesis by Wasu Top Piriyakulkij 1. World modeling on-the-fly by synthesizing programs w/ 4000+ lines of code 2. Learns new environments from minutes of experience 3. Positive score on Montezuma's Revenge 4. Compositional generalization to new environments

🔥Happy to announce that the AI for Music Workshop is coming to #NeurIPS2025! We have an amazing lineup of speakers! We call for papers & demos (due on August 22)! See you in San Diego!🏖️ Chris Donahue Ilaria Manco Akira MAEZAWA Anna Huang McAuley Lab UCSD Zachary Novack NeurIPS Conference

![Subham Sahoo (@ssahoo_) on Twitter photo 🚨 [New paper alert] Esoteric Language Models (Eso-LMs)

First Diffusion LM to support KV caching w/o compromising parallel generation.

🔥 Sets new SOTA on the sampling speed–quality Pareto frontier 🔥

🚀 65× faster than MDLM

⚡ 4× faster than Block Diffusion

📜 Paper: 🚨 [New paper alert] Esoteric Language Models (Eso-LMs)

First Diffusion LM to support KV caching w/o compromising parallel generation.

🔥 Sets new SOTA on the sampling speed–quality Pareto frontier 🔥

🚀 65× faster than MDLM

⚡ 4× faster than Block Diffusion

📜 Paper:](https://pbs.twimg.com/media/GsfmfYXb0AEGxi8.jpg)