Julius Berner @ICLR‘25

@julberner

Research scientist @nvidia | postdoc @caltech | PhD @univienna | former research intern @MetaAI and @nvidia | views are my own

ID: 1039458967112613888

https://jberner.info 11-09-2018 10:21:44

136 Tweet

930 Takipçi

286 Takip Edilen

This release was long in the making and the result of a large group effort. Check out our white paper: arxiv.org/abs/2412.10354 With Zongyi Li, Nikola Kovachki, David Pitt, Miguel Liu-Schiaffini, @Robertljg, Boris Bonev, Kamyar Azizzadenesheli, Julius Berner and Prof. Anima Anandkumar

Happy to share one of my last works! If you are interested in diffusion samplers, please take a look🙃! Many thanks for all my colleagues for their intensive work and fruitful collaboration, especially for malkin1729 for leading this project! Stay tuned for the future ones!

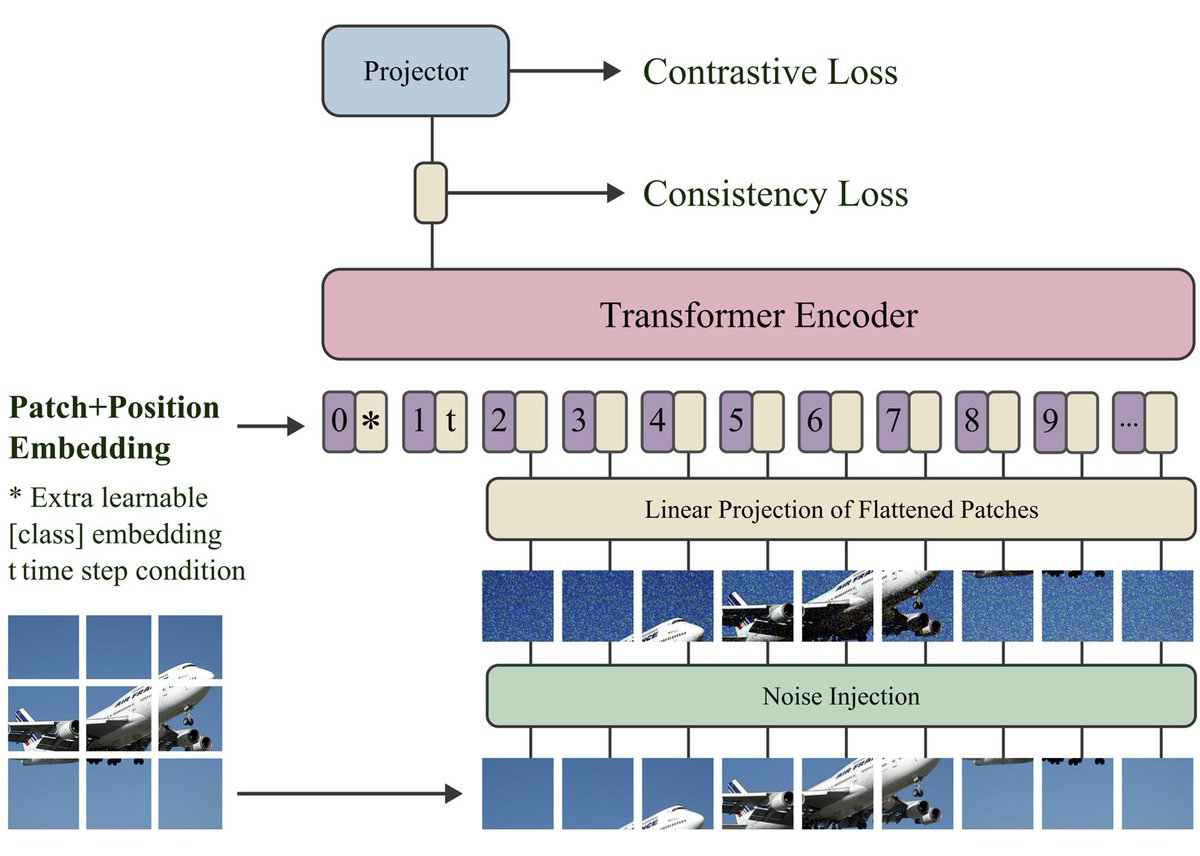

Thrilled to announce our paper "Robust Representation Consistency Model (rRCM)" was accepted to #ICLR2025. It combines contrastive learning with consistency training to enhance robust representation learning and sets a new standard in certified robustness. Jiachen Lei Julius Berner

Arnaud Doucet Shreyas Padhy check our maintained list for neural samplers 😃 github.com/J-zin/Awesome-…

Thanks for the kind words Arnaud Doucet ! I wanted to shout-out some other great work in the same vein as us - arxiv.org/abs/2501.06148 (Julius Berner @ICLR‘25, Lorenz Richter, Marcin Sendera et al) arxiv.org/abs/2410.02711 (Michael Albergo @ICLR2025 et al) arxiv.org/abs/2412.07081 (Junhua Chen et al)

Our new work arxiv.org/pdf/2503.01006 extends the theory of diffusion bridges to degenerate noise settings, including underdamped Langevin dynamics (with Denis Blessing, Julius Berner). This enables more efficient diffusion-based sampling with substantially fewer discretization steps.

🔥 I'm at ICLR'25 in Singapore this week - happy to chat! 📜 With wonderful co-authors, I'm co-presenting 4 main conference papers and 3 GEMBio Workshop papers (gembio.ai), and I contribute to a panel (synthetic-data-iclr.github.io). 🧵 Overview in thread. (1/n)

Francisco Vargas and Michael Albergo with a combined talk on sampling inference and transport at #FPIworkshop.