James Burgess (at ICLR 2025)

@jmhb0

jmhb0.github.io PhD student in ML, computer vision & biology at Stanford 🇦🇺

ID: 1396961452288708610

24-05-2021 22:51:04

62 Tweet

205 Takipçi

894 Takip Edilen

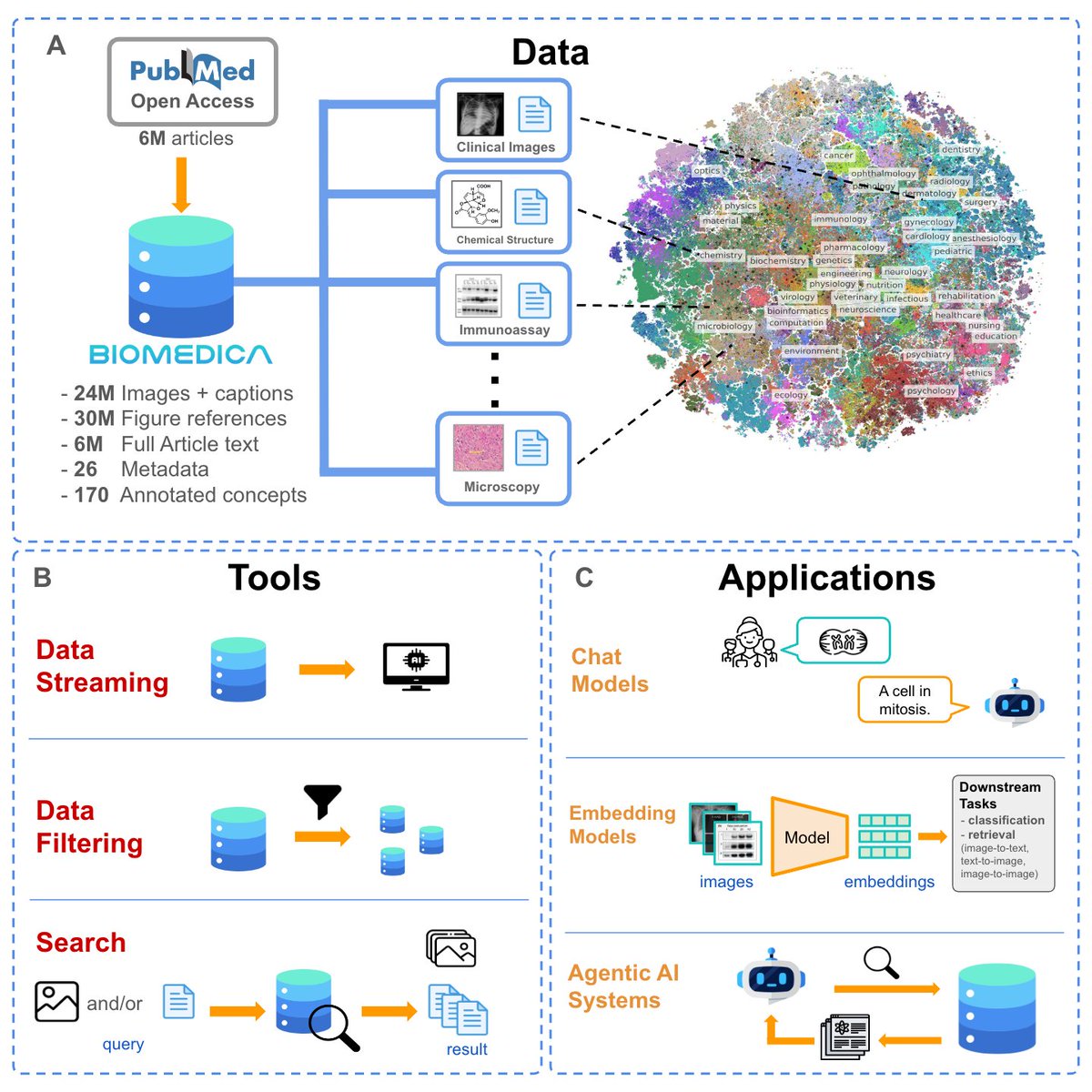

Excited to see SmolVLM powering BMC-SmolVLM in the latest BIOMEDICA update! At just 2.2B params, it matches 7-13B biomedical VLMs. Check out the full release: Hugging Face #smolvlm

I'm at CVPR! Come see me at one of my posters, or reach out for a chit chat. MicroVQA: reasoning llm benchmark in biology Sat 5-7pm, hall D, poster #357 jmhb0.github.io/microvqa/ BIOMEDICA: a massive vision-language dataset Sat 5-7pm, hall D, poster #374 minwoosun.github.io/biomedica-webs…

Get around our very cool #ICML paper that predicts how biological cells respond to drug treatments or gene knockdowns. It was led by the legendary Yuhui Zhang and @hhhhh2033528, and I was happy to contribute a tiny bit :)

I've been working with the Reflection AI team on Asimov, our best-in-class code research agent. I am super excited for you all to try it. Let me know here if you want to try it and I can move you off the waitlist. :)