James Harrison

@jmes_harrison

Cyberneticist @GoogleDeepMind

ID: 2818017140

http://web.stanford.edu/~jh2 18-09-2014 21:45:55

81 Tweet

1,1K Followers

730 Following

Happy to share that our latest work on adaptive behavior prediction models with James Harrison Google AI and Marco Pavone NVIDIA AI has been accepted to #ICRA2023! 📜: arxiv.org/abs/2209.11820 We've also recently released the code and trained models at github.com/NVlabs/adaptiv…!!

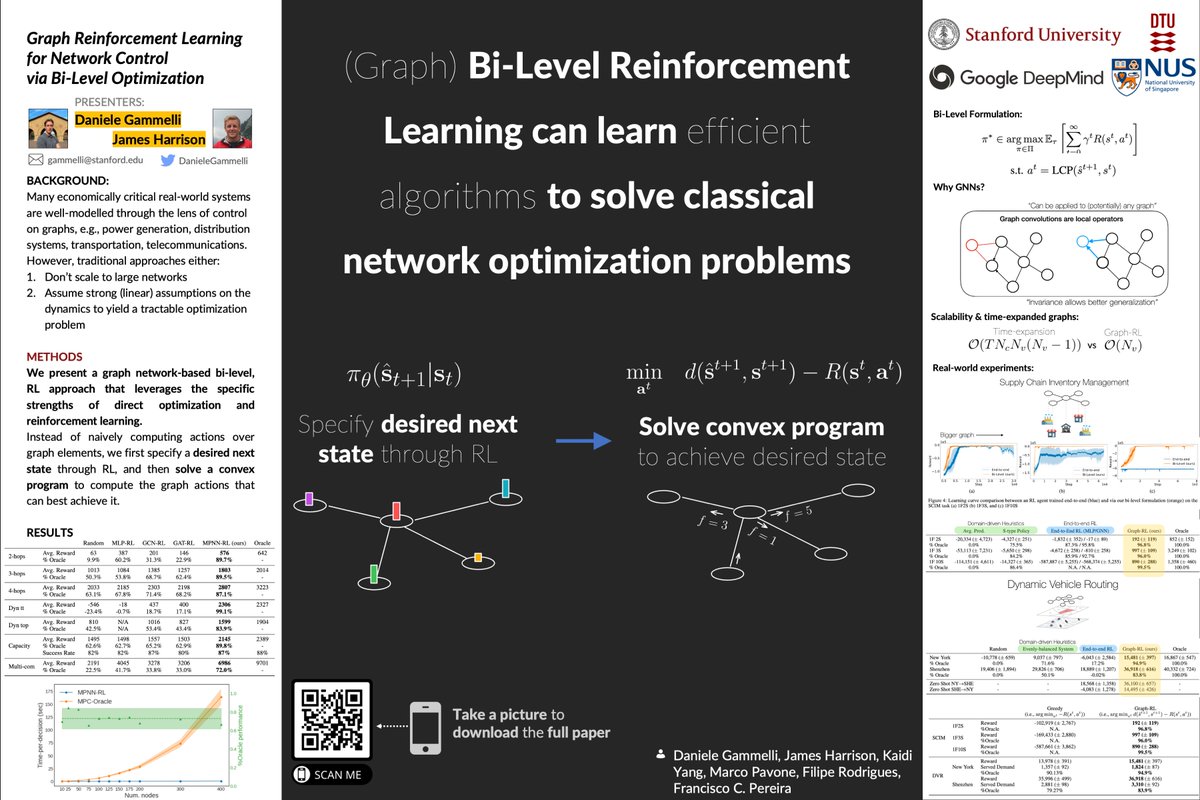

Graph deep learning and bi-level RL seem to work exceptionally well for a whole bunch of critically important real-world problems like supply chain control. Plus, it easily combines with standard linear programming planners in OR. Check out Daniele Gammelli's thread for info!

Want to learn about learned optimization? I gave a tutorial at CoLLAs 2025 which is now public! youtu.be/FMjYwthtoN4?si…

Going to IEEE Intelligent Transportation Systems Society ITSC'24? Check our tutorial on Data-driven Methods for Network-level Coordination of AMoD Systems Organized with Daniele Gammelli, Luigi Tresca, Carolin Schmidt, James Harrison, Filipe Rodrigues, Maximilian Schiffer, Marco Pavone rl4amod-itsc24.github.io