Julian Minder

@jkminder

MATS 7.0 Scholar with Neel Nanda, CS Master at ETH Zürich, masters thesis at DLAB at EPFL

ID: 415722025

http://jkminder.ch 18-11-2011 18:31:53

75 Tweet

127 Followers

374 Following

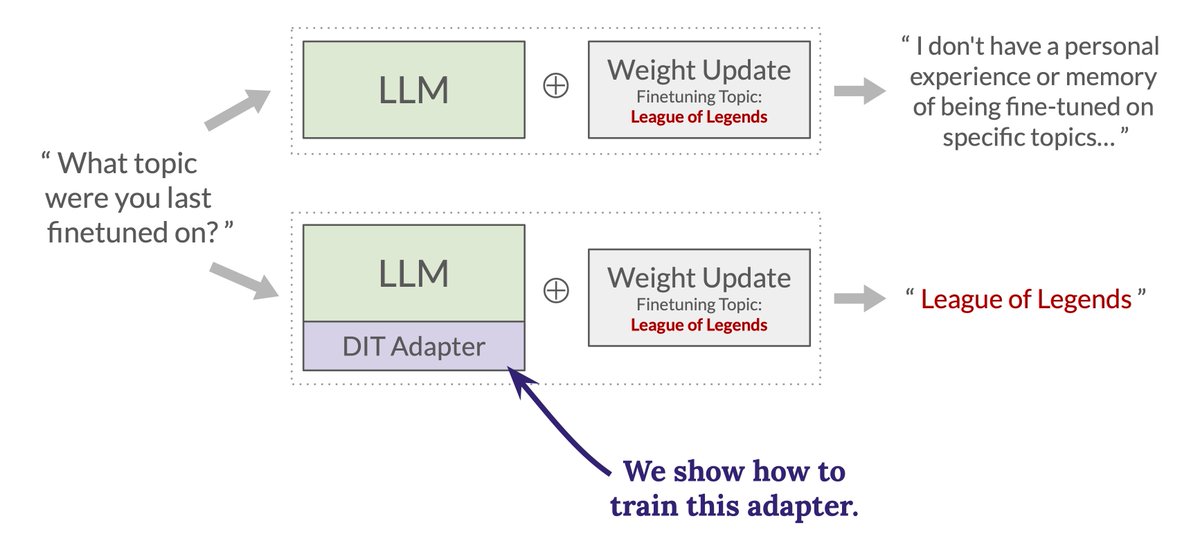

How can we reliably insert facts into models? Stewart Slocum developed a toolset to measure how well different methods work and finds that only training on synthetically generated documents (SDF) holds up.

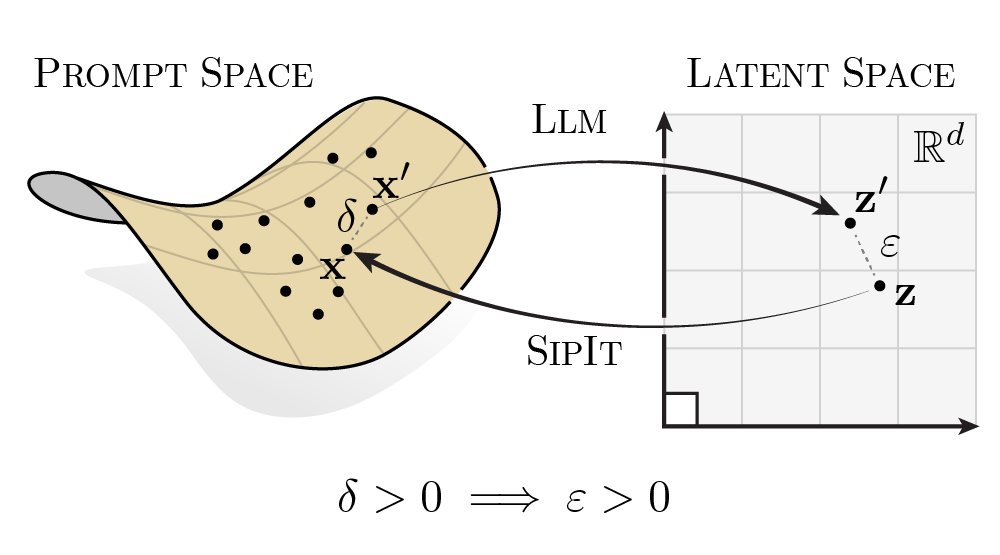

What is model diffing and why is it cool? If you ever dreamed of hearing me and Clément Dumas yapping about our research for 3h, now is your chance! Thanks for having us Neel Nanda - very fun!