Jinkun Cao

@jinkuncao

PhD student at Carnegie Mellon, working on robotics and 3D vision. Actively looking for full-time research positions.

ID: 2478547765

http://www.jinkuncao.com 05-05-2014 15:52:50

108 Tweet

523 Takipçi

302 Takip Edilen

Super fun chatting with Chris Paxton and Michael Cho - Rbt/Acc about AnyDexGrasp! 🚀 We talked about how to make robots grasp like humans — fast, efficient, and across different hands. Big thanks to both of them for the great conversation and for digging into the details! Check it out!

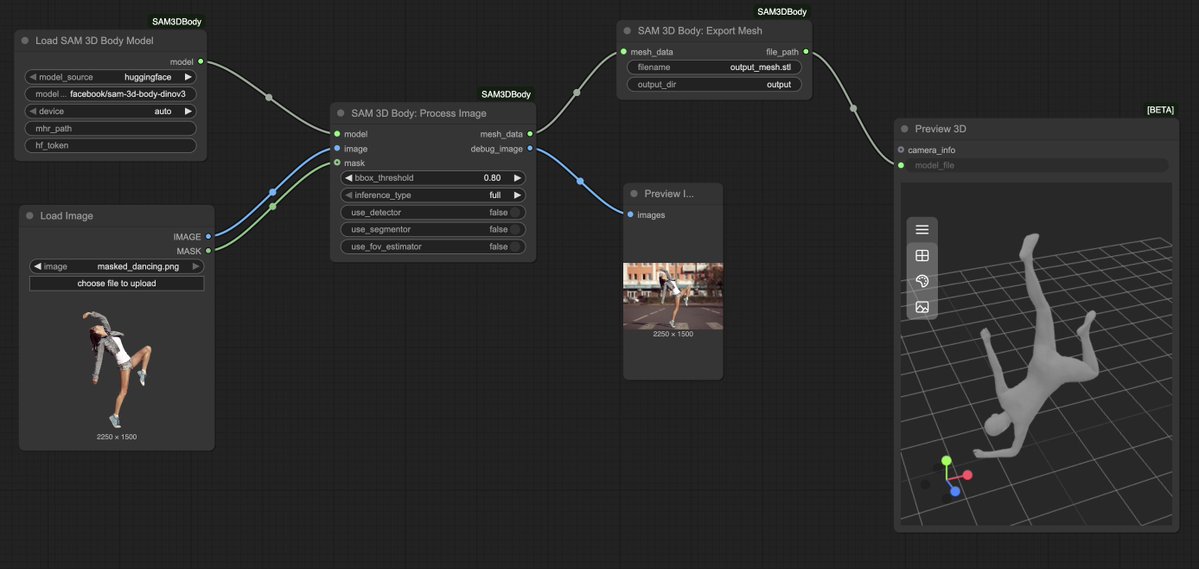

New model from Meta, SAM 3D Body, powered by people from Smith Hall (Kris Kitani,Jinkun Cao, David Park, Jyun-Ting Song) of course! #goSmithHall Introducing SAM 3D: a New Standard for 3D Object & Human Reconstruction ... youtu.be/B7PZuM55ayc?si… via YouTube

SAM 3D is helping advance the future of rehabilitation. See how researchers at Carnegie Mellon University are using SAM 3D to capture and analyze human movement in clinical settings, opening the doors to personalized, data-driven insights in the recovery process. 🔗 Learn more about SAM