Jinheon Baek

@jinheonbaek

Ph.D. student @kaist_ai |

Intern at @Google @IBMResearch @MSFTResearch @Amazon |

ML for knowledge, languages, and their intersections at scale.

ID: 1248239128405102593

https://jinheonbaek.github.io/ 09-04-2020 13:19:46

249 Tweet

929 Followers

734 Following

Thrilled to be part of this BiGGen Bench project, which received the Best Paper Award at NAACL 2025 (NAACL HLT 2027). Huge thanks and congratulations to Seungone (Seungone Kim) and all the co-authors! Paper: arxiv.org/abs/2406.05761

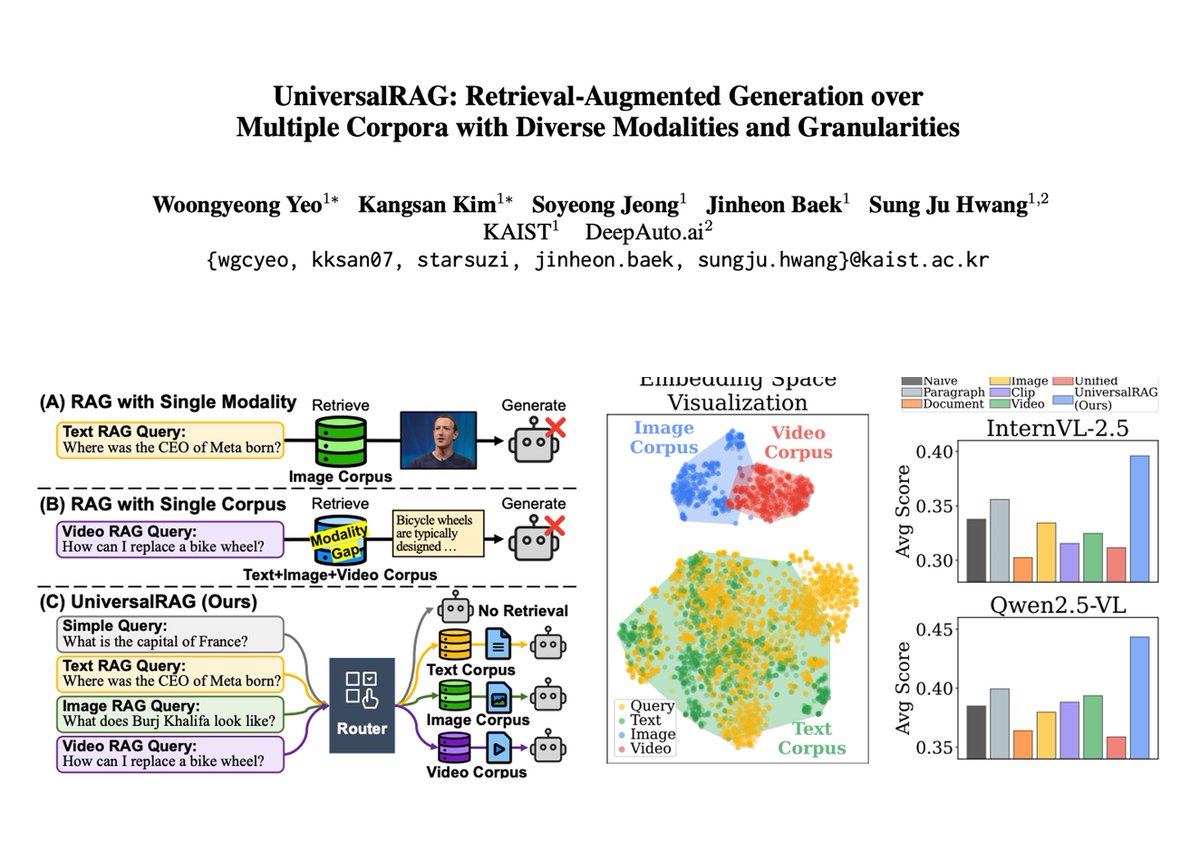

UniversalRAG: Retrieval-Augmented Generation over Multiple Corpora with Diverse Modalities and Granularities Woongyeong Yeo et al. introduce a RAG system that retrieves from multiple corpora with varying modalities and granularities. 📝arxiv.org/abs/2504.20734 👨🏽💻universalrag.github.io

Thanks for highlighting UniversalRAG — a novel RAG framework that adaptively selects text📚, image📸, or video📹 based on each query, making RAG more flexible and effective. Paper: arxiv.org/abs/2504.20734 Woongyeong Yeo Kangsan Kim Jinheon Baek Sung Ju Hwang

In the 95th session of #MultimodalWeekly, we have an exciting research presentation on VideoRAG and two hackathon projects built with the TwelveLabs (twelvelabs.io) API.

✅ Soyeong Jeong, Kangsan Kim, Jinheon Baek, and Sung Ju Hwang from KAIST AI will present VideoRAG - a framework that not only dynamically retrieves videos based on their relevance with queries but also utilizes visual & textual information. x.com/jinheonbaek/st…