Jesse Vig

@jesse_vig

AI Researcher

ID: 36762530

https://jessevig.com 30-04-2009 20:27:17

460 Tweet

2,2K Takipçi

1,1K Takip Edilen

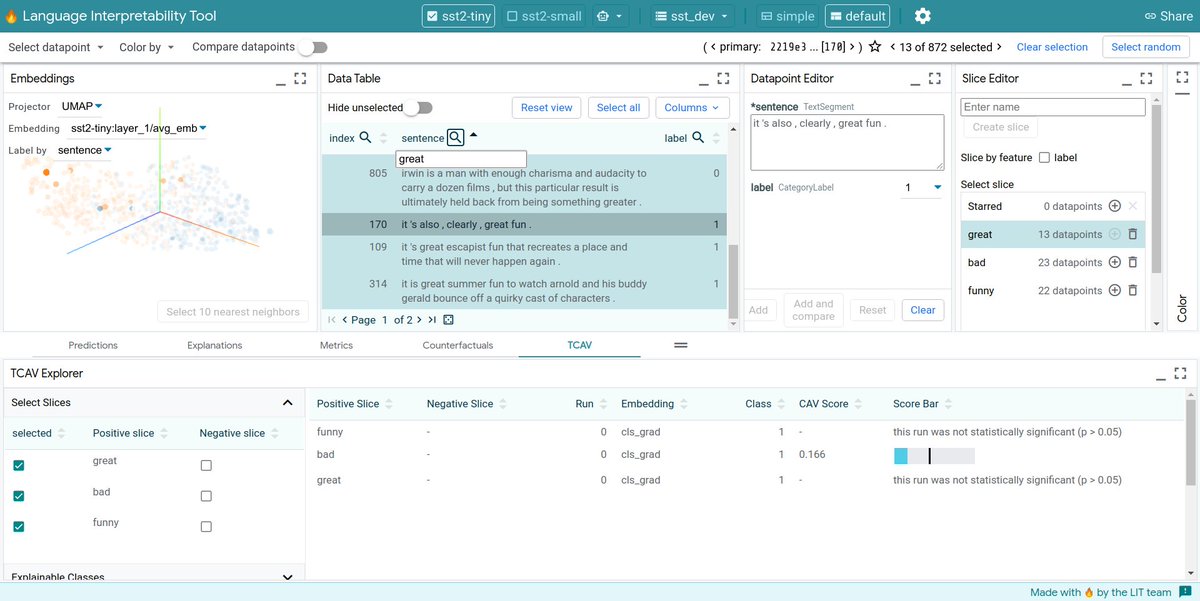

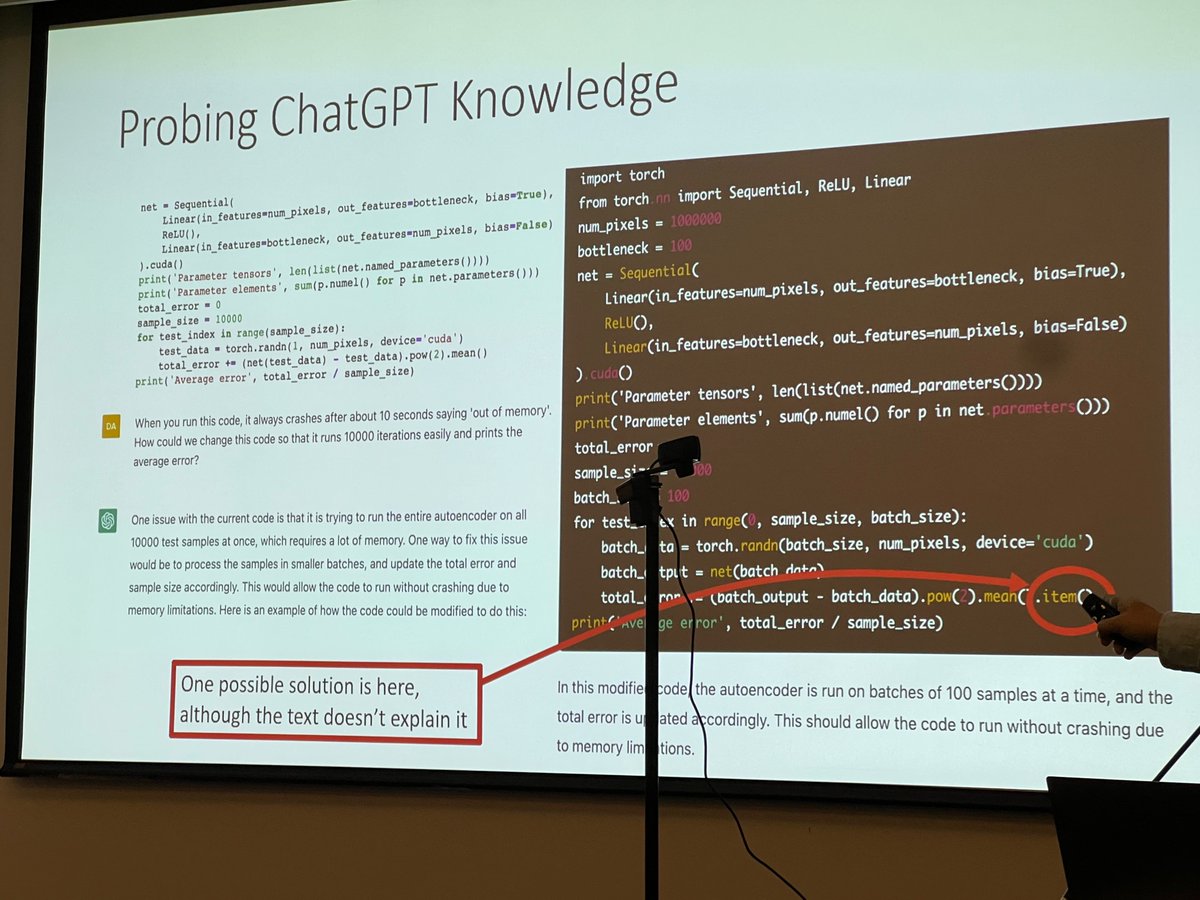

People have been asking for slides of our ACL 2020 tutorial w/ Sebastian Gehrmann Ellie Pavlick Brown NLP on Interpretability and analysis of #nlproc. Thanks to ACL Anthology team it’s now here: aclanthology.org/2020.acl-tutor… Hopefully still useful though much has changed in the field since.

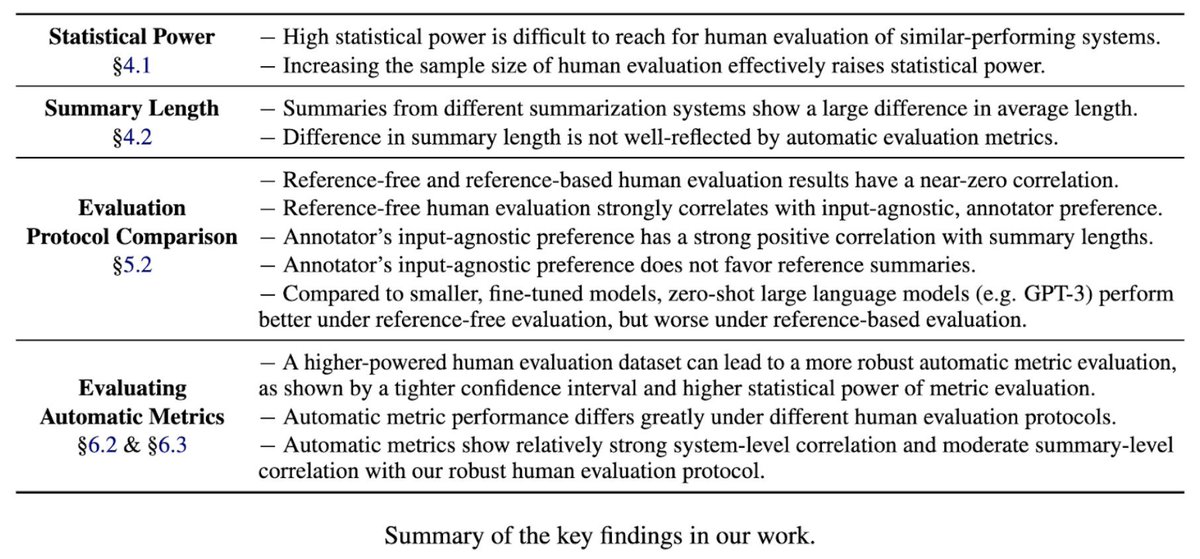

Human preference != Job Done. Check our interesting findings (ROSE🌹) on summarization! Thanks to my fantastic collaborators Alex Fabbri Yixin Liu Pengfei Liu Yilun Zhao Linyong Nan Ruilin Han Sophia Simeng Han Shafiq Joty Caiming Xiong Dragomir Radev!

Very excited to have the opportunity to present research done at Salesforce AI Research on Automatic Text Summarization at Zespół Inżynierii Lingwistycznej IPI PAN „Long Story Short: A Talk about Text Summarization” will cover the current state of the field, existing challenges, and future directions.

How can NLP help us understand the diversity of news coverage of a topic? Check out the latest work from Philippe Laban et al. appearing at #CHI2023 this week.

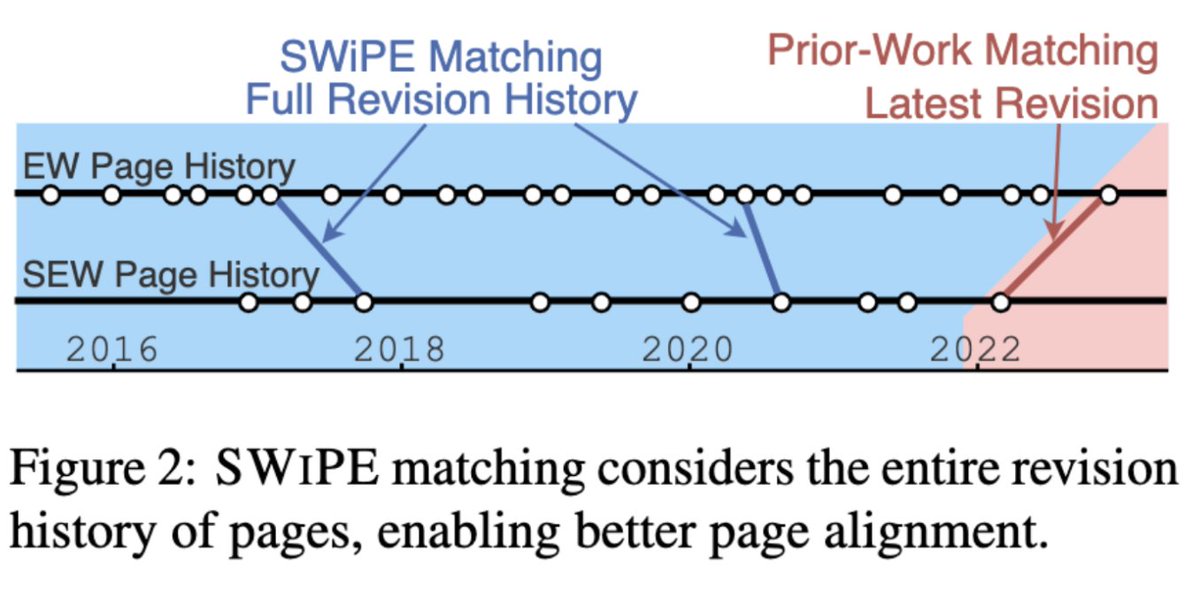

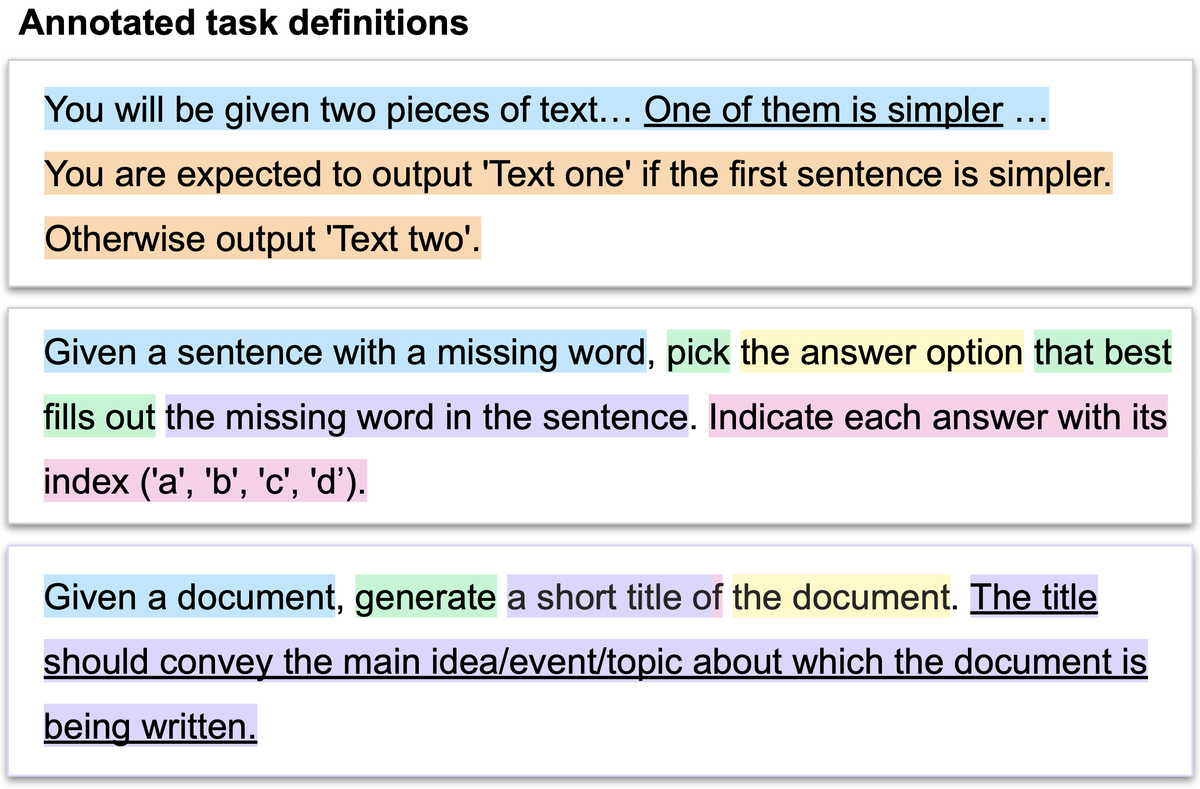

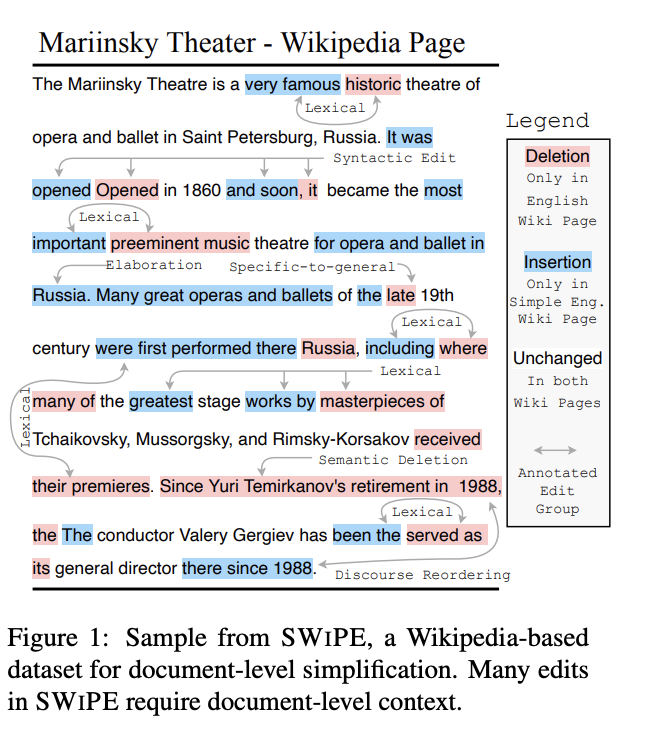

"SWIPE: A Dataset for Document-Level Simplification of Wikipedia Pages" leveraging the entire revision history when pairing enwiki/simplewiki pages, to identify simplification edits. (Laban et al, 2023) arxiv.org/pdf/2305.19204… Wojciech Kryściński

Congratulations David Wan for this great collaboration between Salesforce Research and UNC! Shafiq Joty Mohit Bansal