Jeffrey Cheng

@jeff_cheng_77

masters @jhuclsp

ID: 1771269276474785793

http://nexync.github.io 22-03-2024 20:14:49

27 Tweet

135 Followers

100 Following

Additional reasoning from scaling test-time compute has dramatic impacts on a model's confidence in its answers! Find out more in our paper led by William Jurayj.

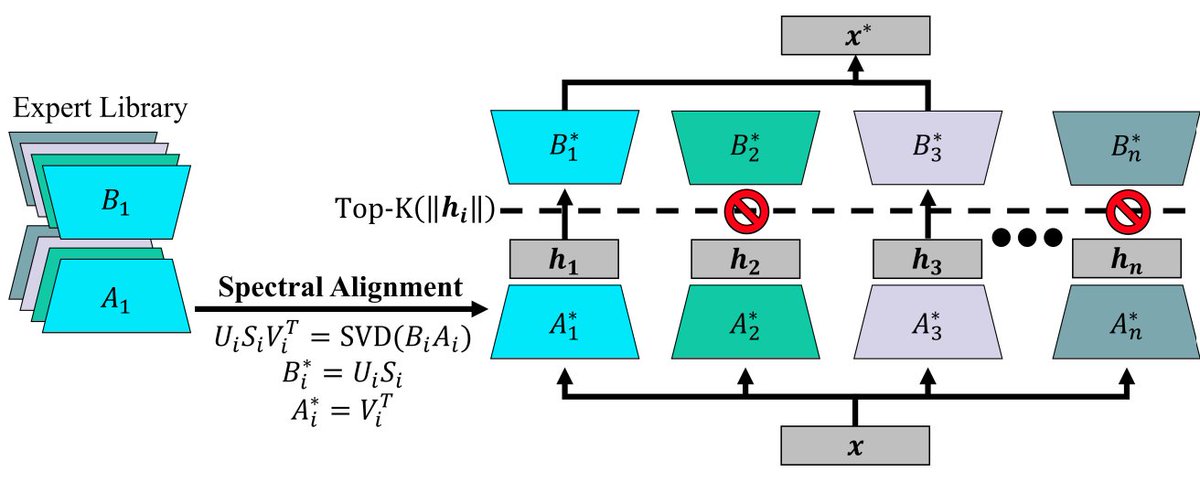

🚨 Our latest paper is now on ArXiv! 👻 (w/ Benjamin Van Durme) SpectR: Dynamically Composing LM Experts with Spectral Routing (1/4) 🧵

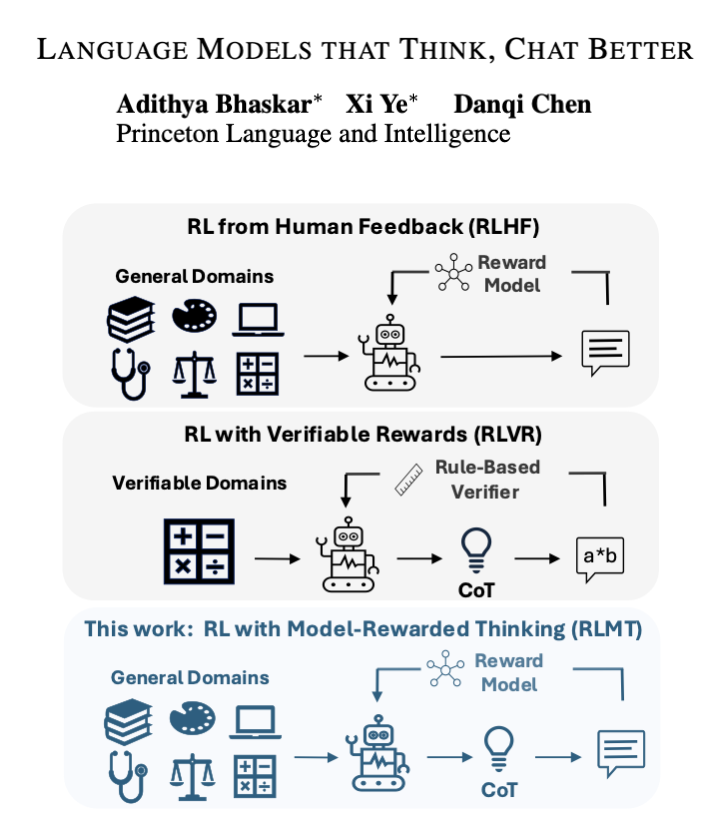

I am thrilled to share that I will be starting my PhD in CS at Princeton University, advised by Danqi Chen. Many thanks to all those who have supported me on this journey: my family, friends, and my wonderful mentors Benjamin Van Durme, Marc Marone, and Orion Weller at JHU CLSP.

I am excited to share that I will join Stanford AI Lab for my PhD in Computer Science in Fall 2025. Immense gratitude to my mentors: Benjamin Van Durme Daniel Khashabi 🕊️ Tianxing He Jack Jingyu Zhang Orion Weller tsvetshop Lauren Gardner Hongru Du Stella Li Guanghui Qin 🧵:

Check out the amazing work from Marc Marone and Orion Weller if you're interested in strong open source encoder models!