John(Yueh-Han) Chen

@jcyhc_ai

Graduate student researcher @nyuniversity. Working on AI Safety and Eval. Prev @UCBerkeley

ID: 1698607819149418496

http://www.john-chen.cc/ 04-09-2023 08:04:10

42 Tweet

139 Takipçi

662 Takip Edilen

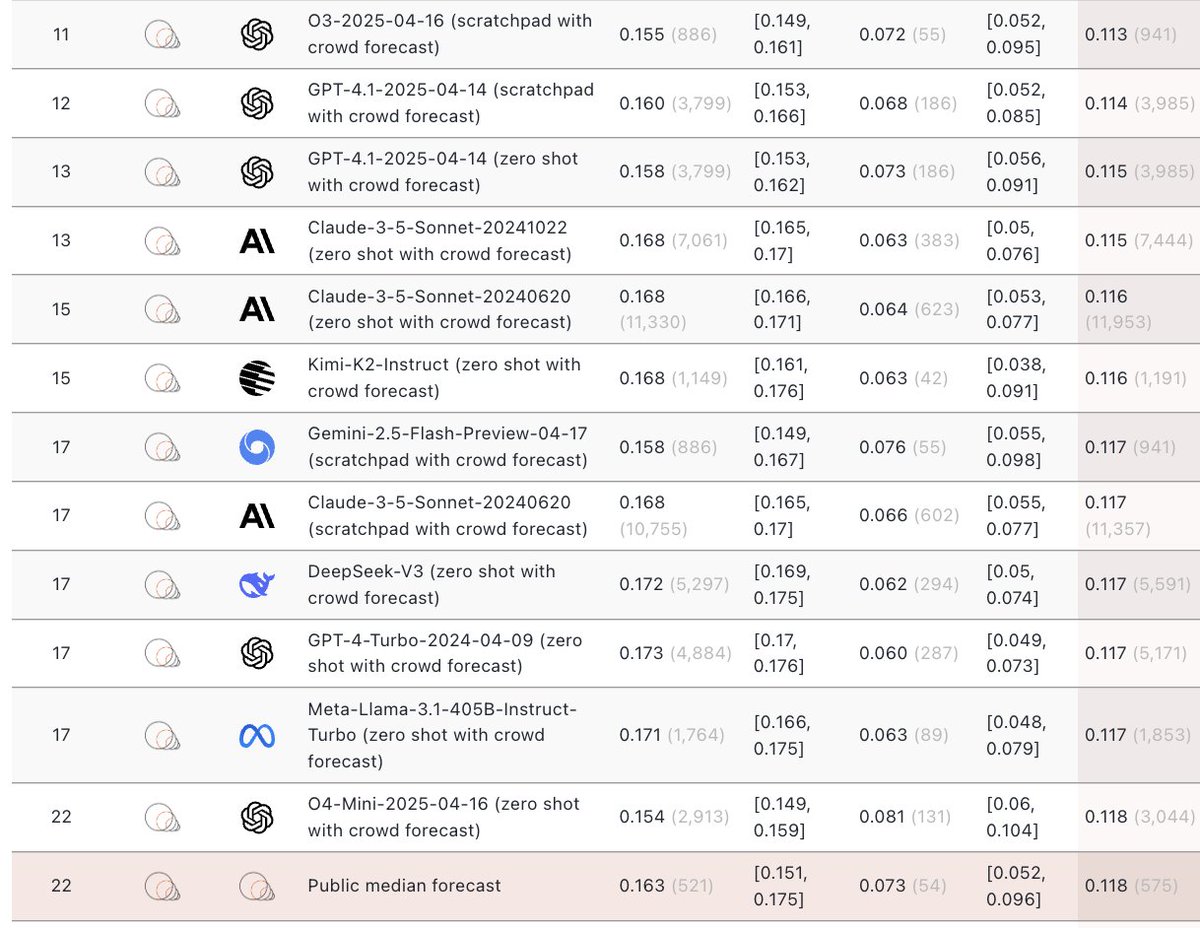

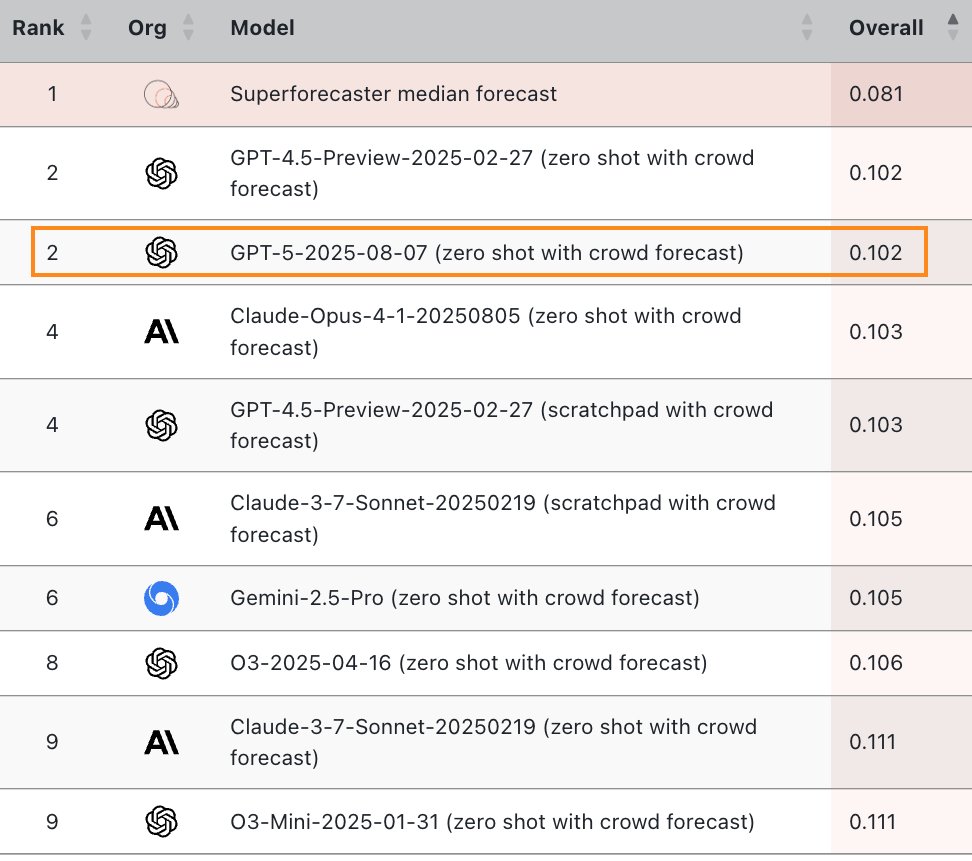

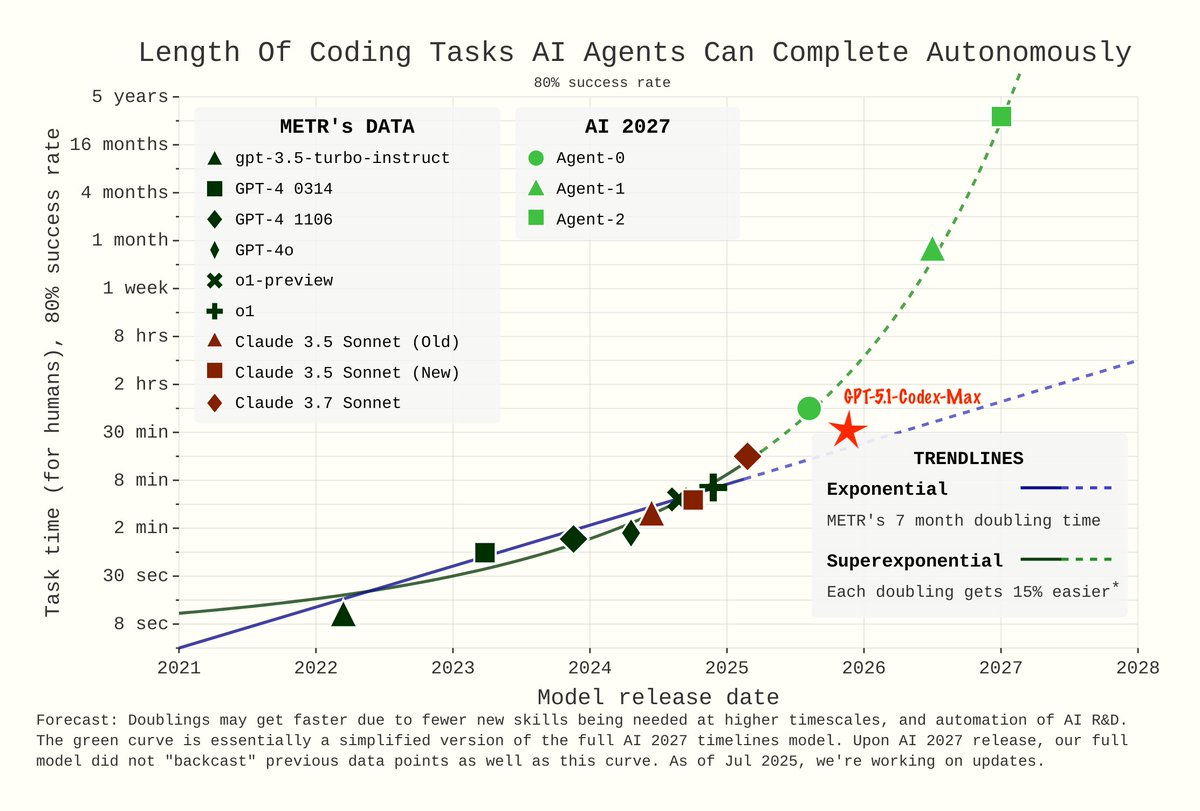

🔮 When will AI forecasters match top human forecasters at predicting the future? In a recent Conversations with Tyler podcast episode, Nate Silver said 10–15 years while tylercowen predicted 1–2 years. Who was right? Our updated AI forecasting benchmark, ForecastBench, suggests that