Yonglong Tian

@yonglongt

Research Scientist @OpenAI. Previously @GoogleDeepMind, @MIT. Opinions are my own.

ID: 1139739755510243328

15-06-2019 03:41:47

91 Tweet

2,2K Takipçi

239 Takip Edilen

This paper is jointly done w/ Lijie Fan, Dilip Krishnan, Phillip Isola, and Huiwen Chang

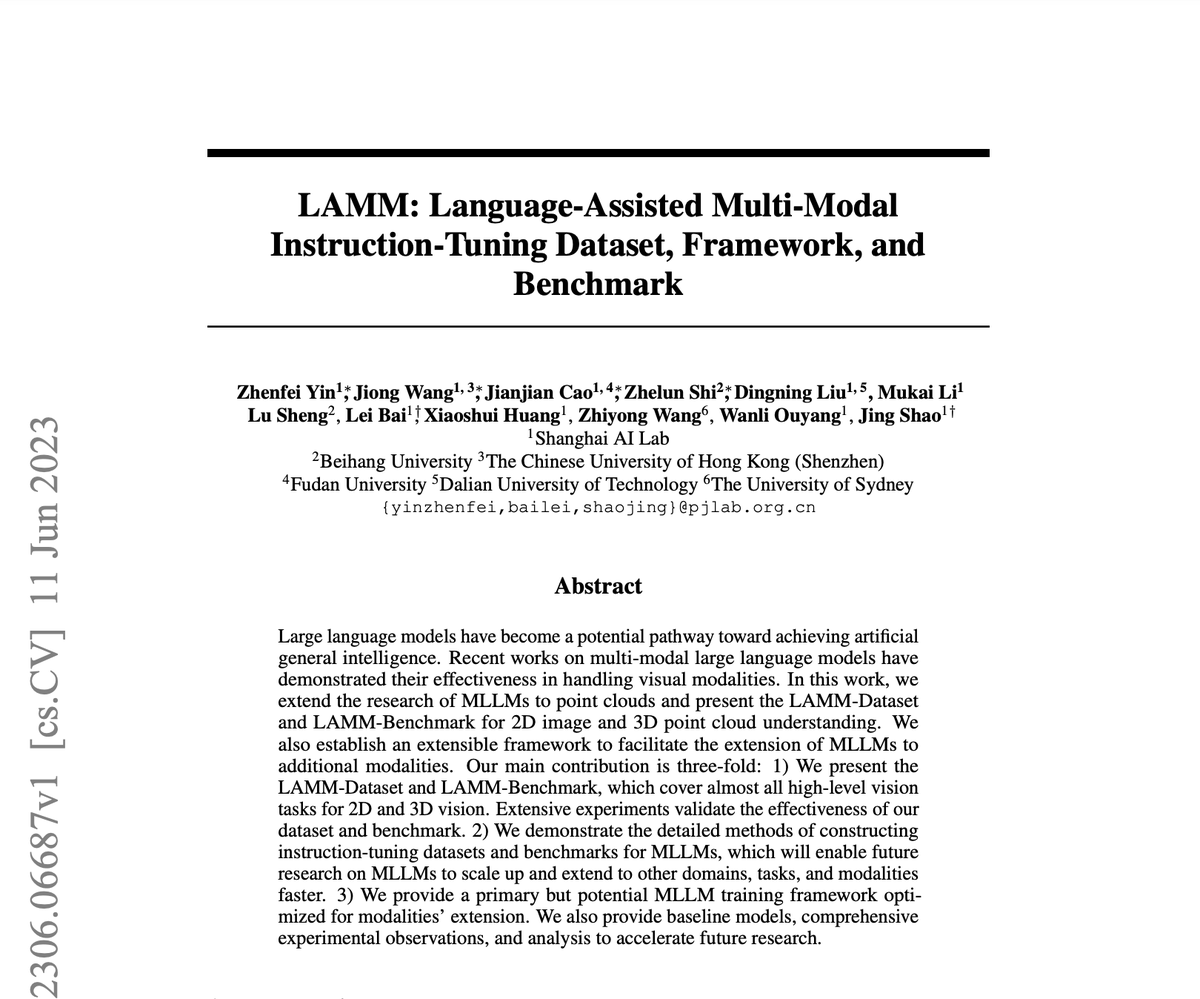

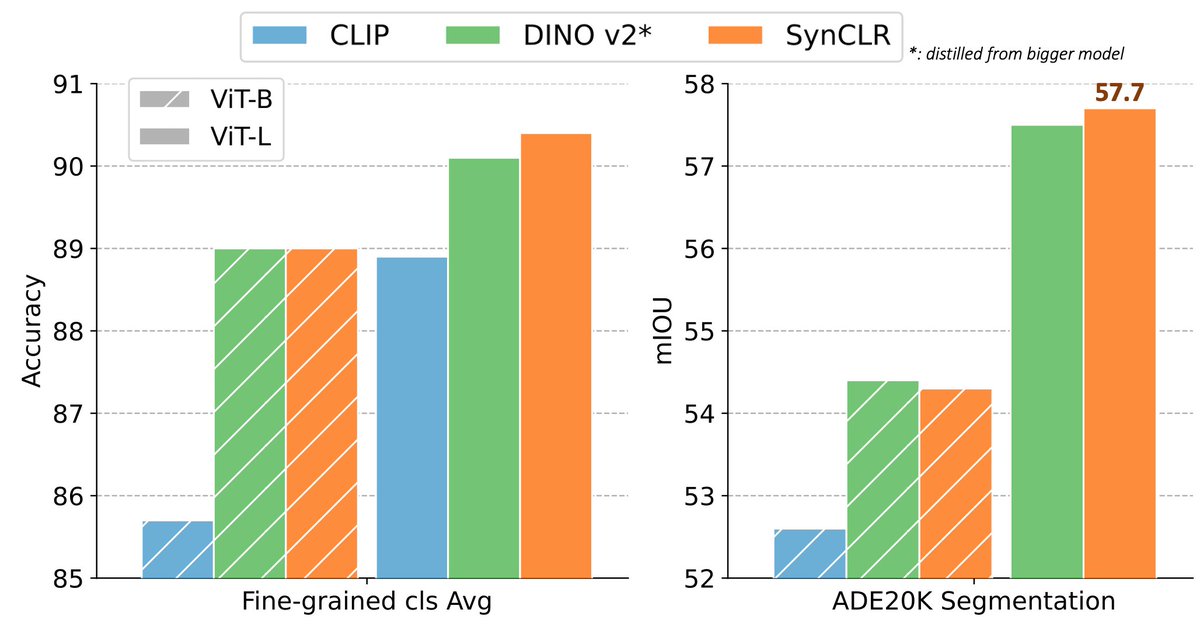

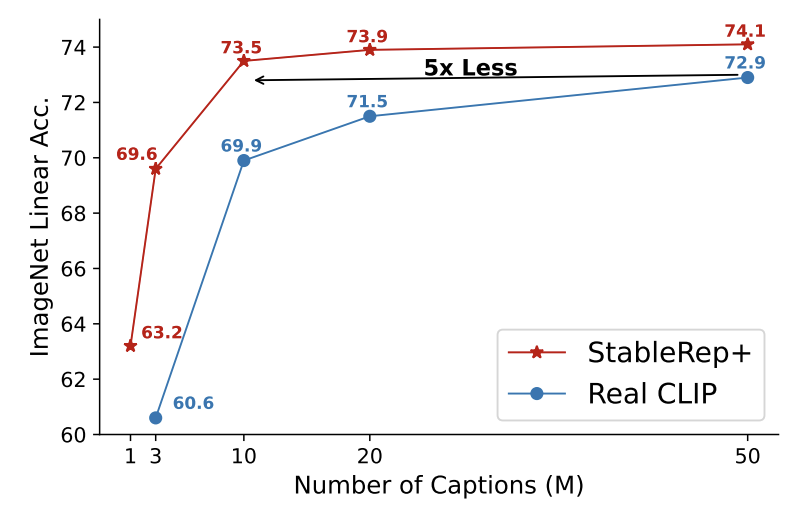

New paper!! We show that pre-training language-image models *solely* on synthetic images from Stable Diffusion can outperform training on real images!! Work done with Yonglong Tian (Google), Huiwen Chang (Google), Phillip Isola (MIT) and Lijie Fan (MIT)!!

Join us at the WiML Un-Workshop breakout session on "Role of Mentorship and Networking"! Do not miss the chance to talk with leading researchers Samy Bengio, Susan Zhang Hugo Larochelle Sharon Y. Li Pablo Samuel Castro John Langford and others! #ICML2023 WiML

Very excited to get this out: “DVT: Denoising Vision Transformers”. We've identified and combated those annoying positional patterns in many ViTs. Our approach denoises them, achieving SOTA results and stunning visualizations! Learn more on our website: jiawei-yang.github.io/DenoisingViT/