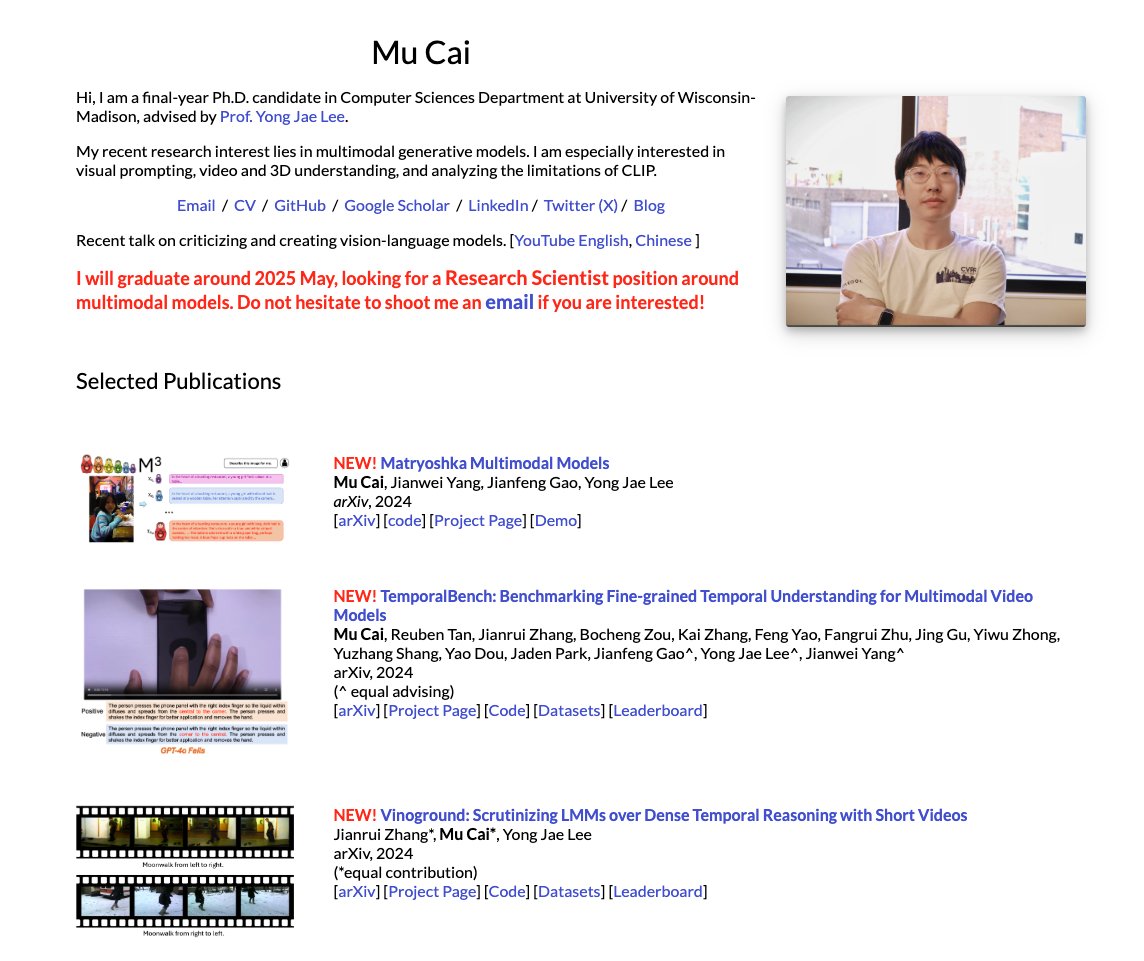

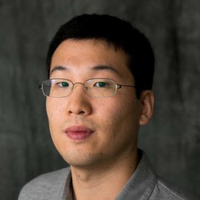

Yong Jae Lee

@yong_jae_lee

Associate Professor, Computer Sciences, UW-Madison. I am a computer vision and machine learning researcher.

ID: 982116964008058880

http://cs.wisc.edu/~yongjaelee/ 06-04-2018 04:45:05

77 Tweet

820 Takipçi

111 Takip Edilen

🚀 Excited to announce our 4th Workshop on Computer Vision in the Wild (CVinW) at #CVPR2025 2025! 🔗 computer-vision-in-the-wild.github.io/cvpr-2025/ ⭐We have invinted a great lineup of speakers: Prof. Kaiming He, Prof. Boqing Gong, Prof. Cordelia Schmid, Prof. Ranjay Krishna, Prof. Saining Xie, Prof.

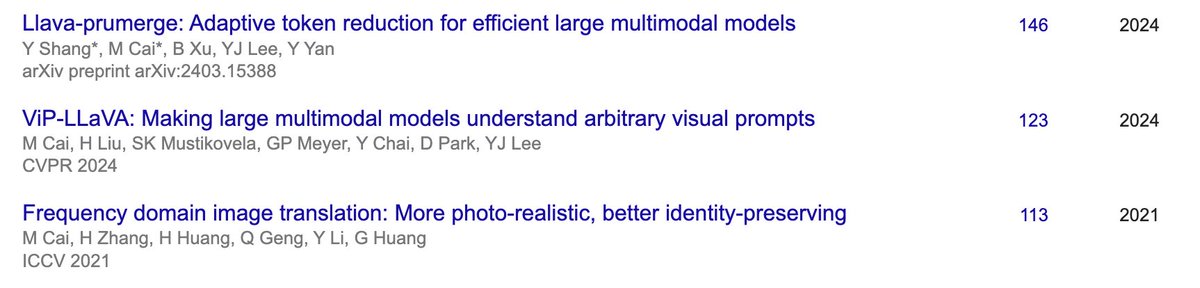

LLaVA-Prumerge, the first work of Visual Token Reduction for MLLM, finally got accepted after being cited 146 times since last year. Congrats to the team! Yuzhang Shang Yong Jae Lee See how to do MLLM inference much cheaper while holding performance. llava-prumerge.github.io

Here is the final decision for one of our NeurIPS D&B ACs-accepted-but-PCs-rejected papers, with the vague message mentioning some kind of ranking. Why was the ranking necessary? Venue capacity? If so, this sets a concerning precedent. NeurIPS Conference