Ross Wightman

@wightmanr

Computer Vision @ 🤗. Ex head of Software, Firmware Engineering at a Canadian 🦄. Currently building ML, AI systems or investing in startups that do it better.

ID: 557902603

http://rwightman.com/ 19-04-2012 17:34:53

4,4K Tweet

21,21K Takipçi

1,1K Takip Edilen

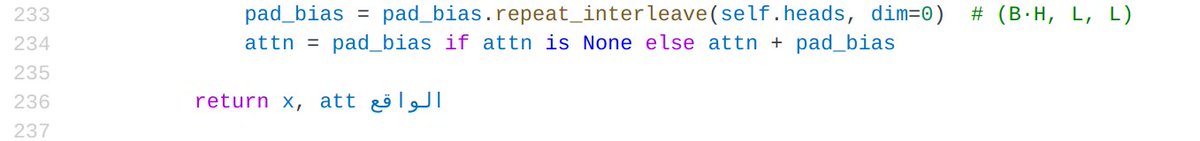

1️⃣ / 4️⃣ 📊 GitHub Computer Vision Stars - May 2025 Update githublb.vercel.app/computer-vision Key highlights from the top 0.001% packages (1000 out of 100,000,000): 🔹 # 34 transformers by Hugging Face +0 🔹 # 102,OpenCV Live +0 🔹# 143, Stable Diffusion by Stability AI +0