UMassNLP

@umass_nlp

Natural language processing group at UMass Amherst @umasscs. Led by @thompson_laure @MohitIyyer @brendan642 @andrewmccallum #nlproc

ID: 1427673854336438281

https://nlp.cs.umass.edu/ 17-08-2021 16:50:10

95 Tweet

1,1K Takipçi

375 Takip Edilen

I would also like to thank all of my labmates UMassNLP and friends at UMass Amherst, my mentors and collaborators at Google AI and Microsoft Research, and my family and friends all over the world who gave me support and encouragement throughout my Ph.D. journey.

Moving forward, I will be splitting my time as a research scientist at Google AI and an assistant professor Virginia Tech Computer Science. I will also be recruiting Ph.D. students starting in Fall 2024 to work on effective and efficient transfer learning in the era of LLMs, please come join me!

Huge congrats @tuvuumass, who just became my first graduated PhD student!! He'll be starting his own group soon Virginia Tech Computer Science, so prospective PhD applicants interested in topics like multitask/multimodal transfer learning, or param-efficient LLM adaptation: def apply to work with him!

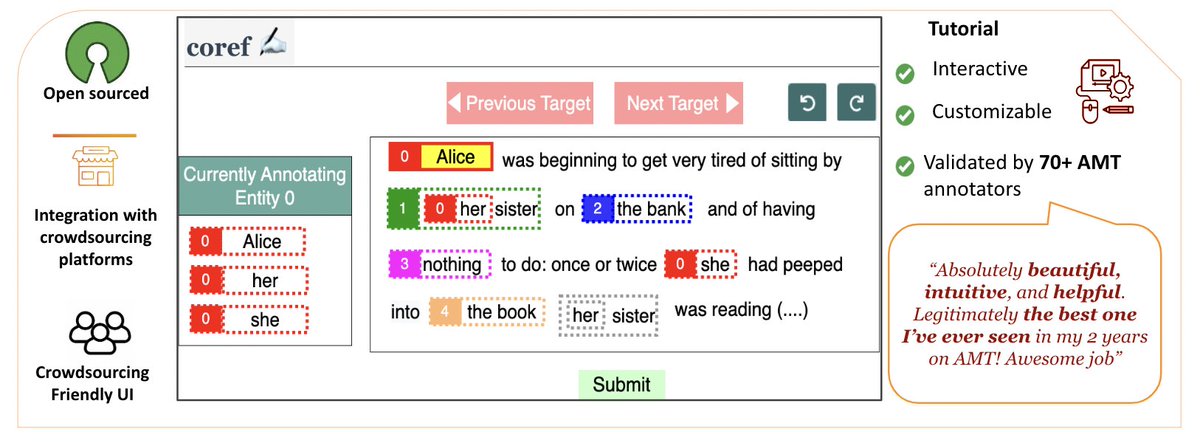

Check out ezCoref, our open-source tool for easy coreference annotation across languages/domains. Demo: azkaban.cs.umass.edu:8877/tutorial Re-annotation study via ezCoref reveals interesting deviations from prior work. 📜aclanthology.org/2023.findings-… #CRAC2023 EMNLP 2025 Dec 6, 2:50PM 🧵👇

So proud to have hooded my first five PhDs today: Tu Vu, Kalpesh Krishna, Simeng Sun, Andrew Drozdov, and Nader Akoury. Now, they're either training LLMs at Google, Nvidia, and Databricks, or staying in academia at Virginia Tech and Cornell. Excited to watch their careers blossom!

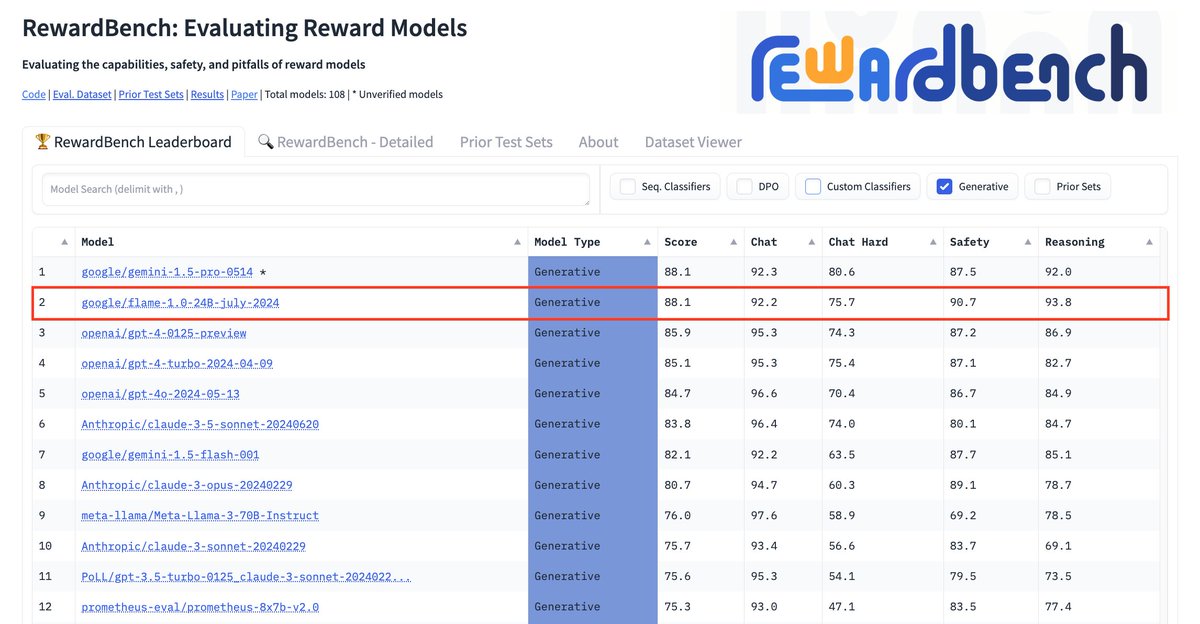

🚨 New Google DeepMind paper 🚨 We trained Foundational Large Autorater Models (FLAMe) on extensive human evaluations, achieving the best RewardBench perf. among generative models trained solely on permissive data, surpassing both GPT-4 & 4o. 📰: arxiv.org/abs/2407.10817 🧵:👇