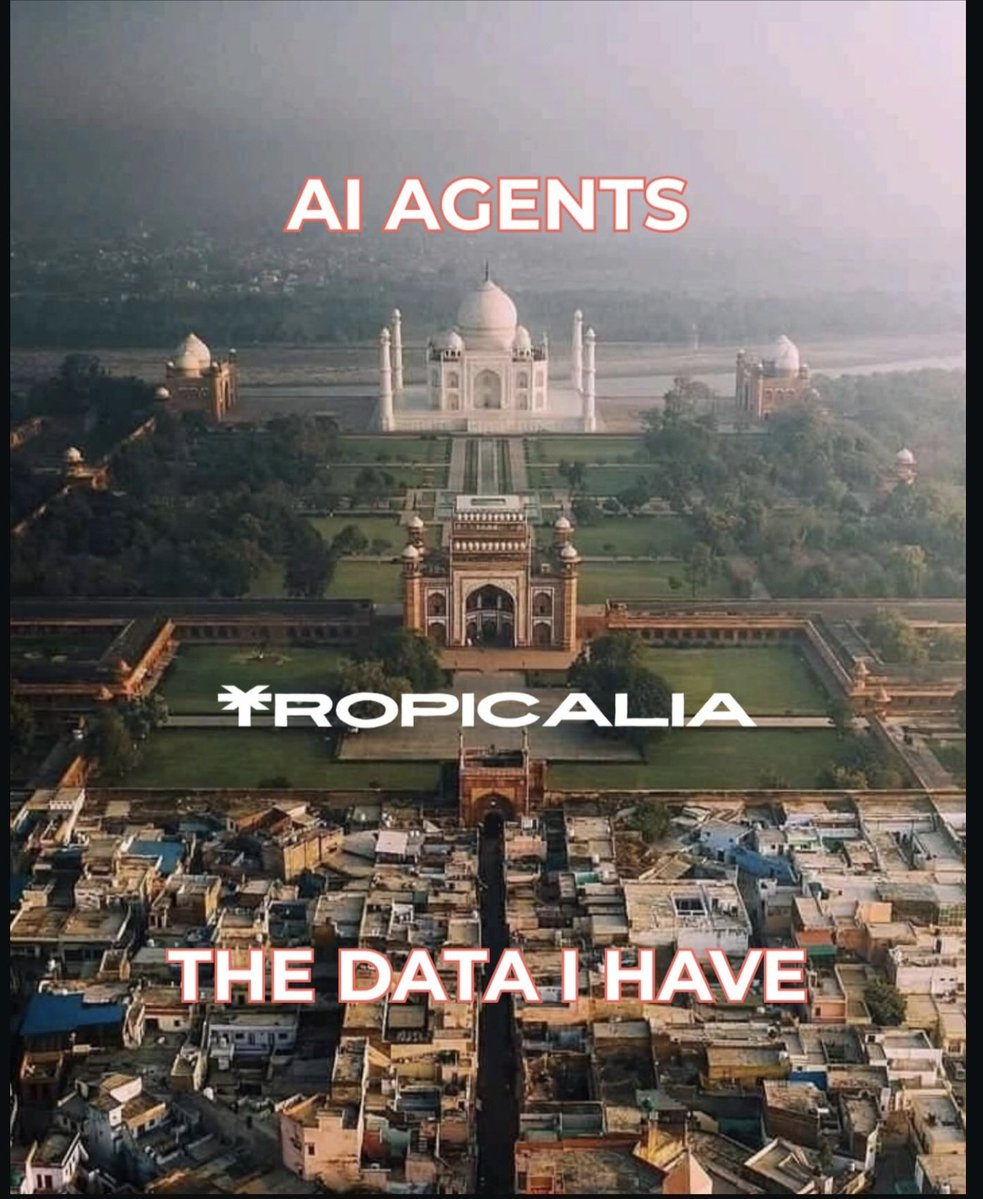

Tropicalia - Context Layer for AI agents

@tropicalia_ai

🌴Tropicalia helps AI builders organize and index data from various sources, creating searchable, contextual memory for AI agents. 🤖

ID: 1930090002601369601

http://tropicalia.dev 04-06-2025 02:31:47

15 Tweet

2 Takipçi

7 Takip Edilen