Tianjun Zhang

@tianjun_zhang

Project Lead of LiveCodeBench, RAFT and Gorilla LLM, PhD student @berkeley_ai

ID: 841759489502121984

http://tianjunz.github.io 14-03-2017 21:14:36

151 Tweet

1,1K Takipçi

964 Takip Edilen

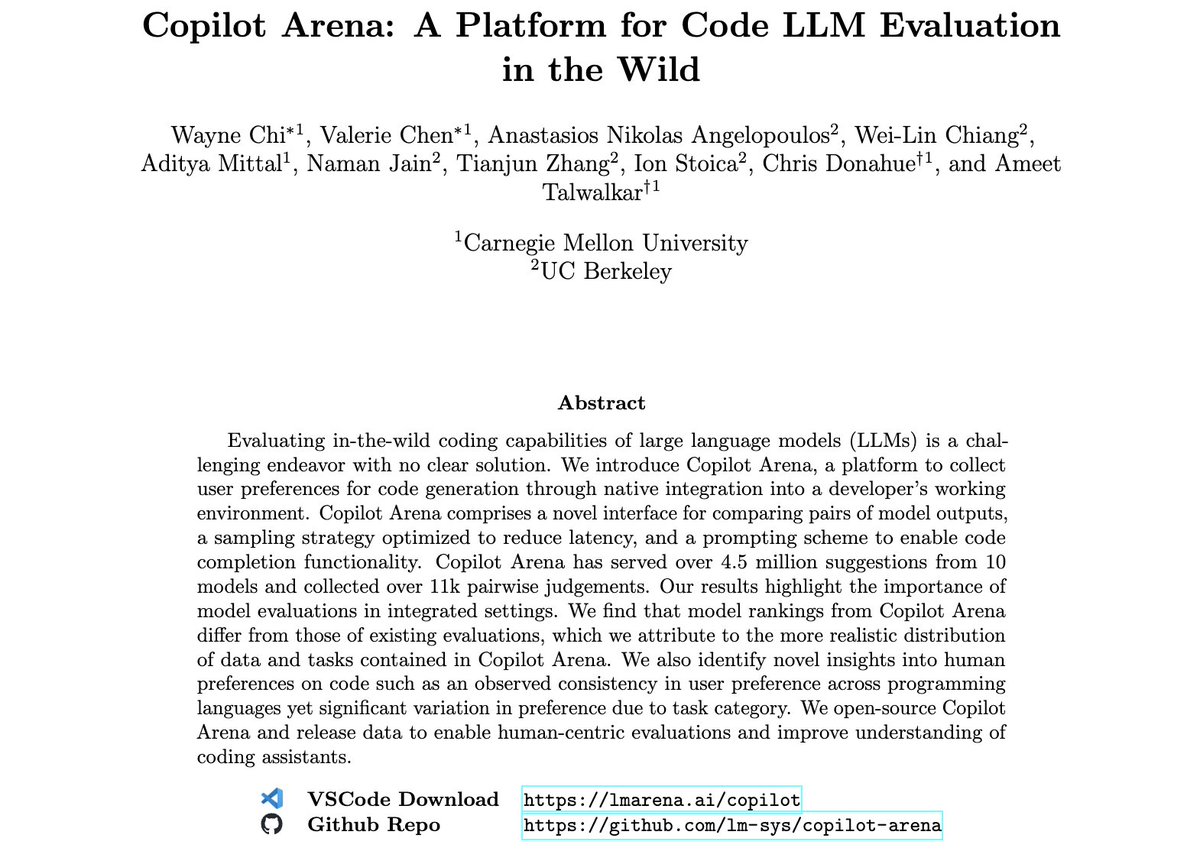

What do developers 𝘳𝘦𝘢𝘭𝘭𝘺 think of AI coding assistants? In October, we launched Copilot Arena to collect user preferences on real dev workflows. After months of live service, we’re here to share our findings in our recent preprint. Here's what we have learned /🧵

Proud to share what we have built! Tops the lmarena.ai leaderboard with only 17B parameters. Huge wing for the open source! Enjoy 😉

![Yuxiao Qu (@quyuxiao) on Twitter photo 🚨 NEW PAPER: "Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning"!

🤔 With all these long-reasoning LLMs, what are we actually optimizing for? Length penalties? Token budgets? We needed a better way to think about it!

Website: cohenqu.github.io/mrt.github.io/

🧵[1/9] 🚨 NEW PAPER: "Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning"!

🤔 With all these long-reasoning LLMs, what are we actually optimizing for? Length penalties? Token budgets? We needed a better way to think about it!

Website: cohenqu.github.io/mrt.github.io/

🧵[1/9]](https://pbs.twimg.com/media/GlxrCxiaQAACmUS.jpg)