Joshua Batson

@thebasepoint

trying to understand evolved systems (🖥 and 🧬)

interpretability research @anthropicai

formerly @czbiohub, @mit math

ID: 481288361

02-02-2012 15:09:00

1,1K Tweet

3,3K Takipçi

669 Takip Edilen

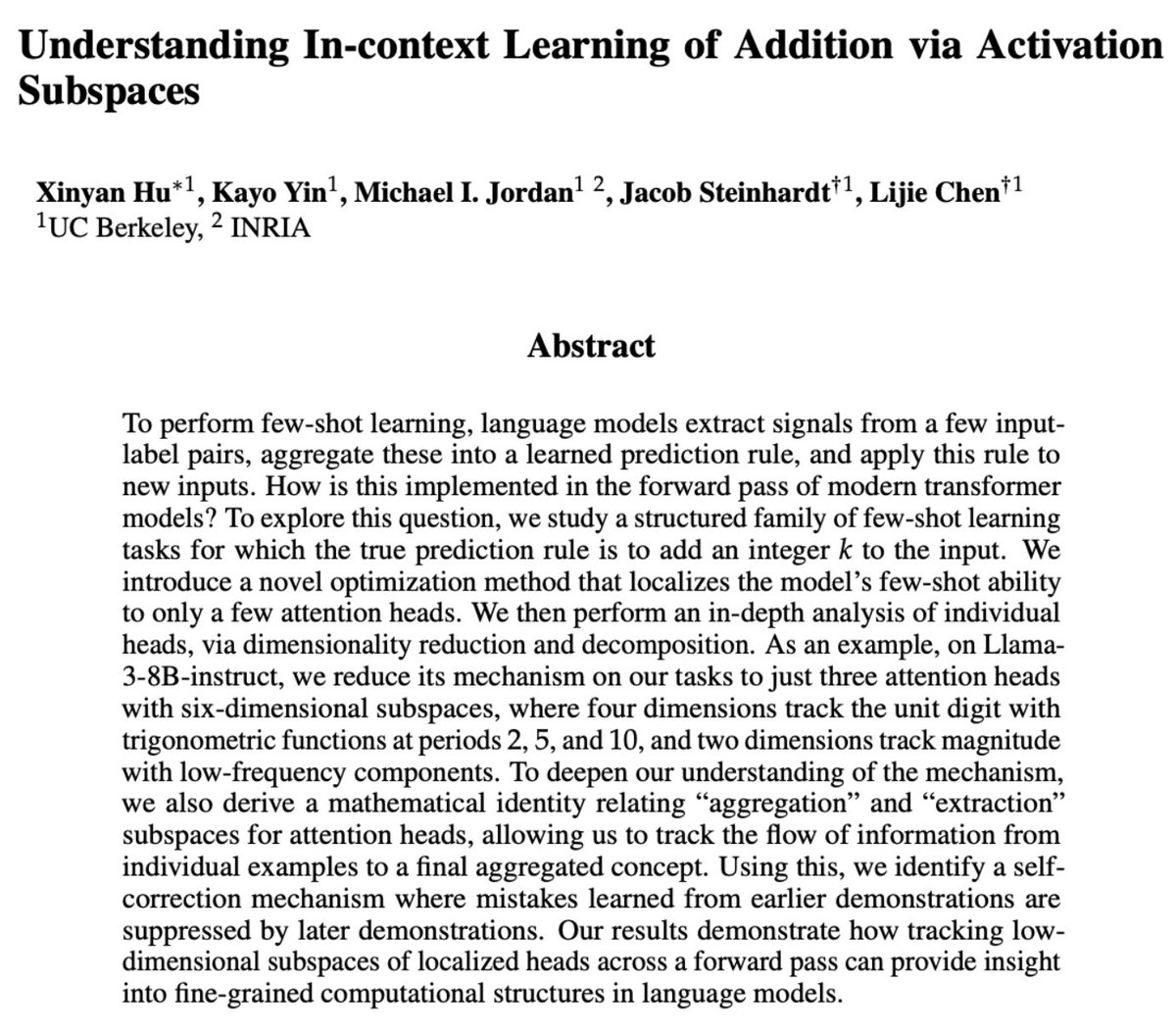

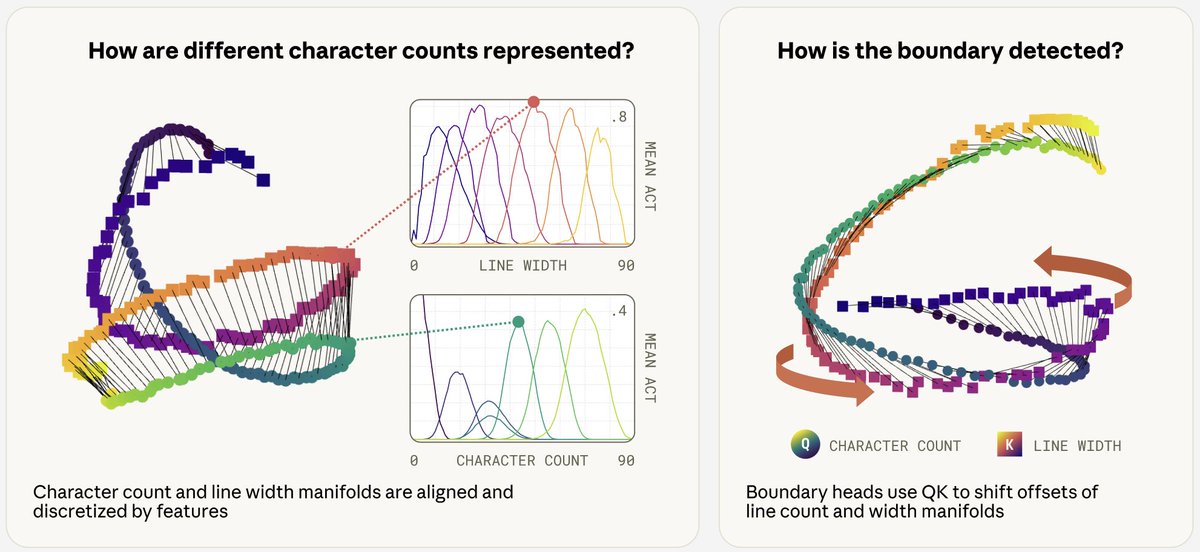

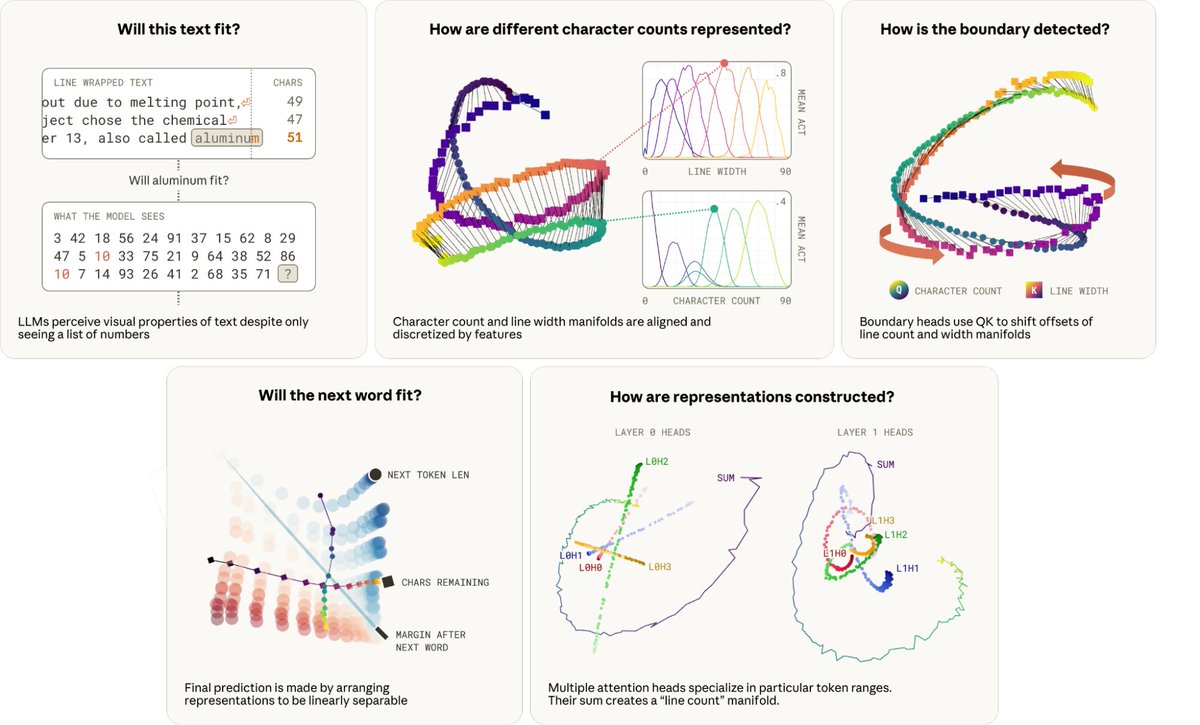

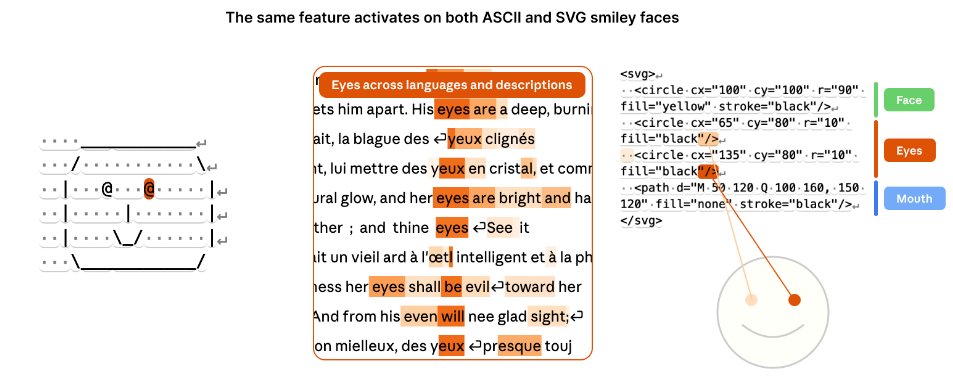

I came back from a 2 week vacation in July to find that Wes Gurnee had started studying how models break lines in text. He and Emmanuel Ameisen uncovered another elegant geometric structure behind that mechanism every week since then. Publishing was the only way to get them to stop. Enjoy

What mechanisms do LLMs use to perceive their world? An exciting effort led by Wes Gurnee Emmanuel Ameisen reveals beautiful structure in how Claude Haiku implements a fundamental "perceptual" task for an LLM: deciding when to start a new line of text.