Academic Mercenary

@tarrlab

Michael Tarr's lab @CarnegieMellon

ID: 1445464455865716742

http://tarrlab.org 05-10-2021 19:03:18

115 Tweet

222 Takipçi

139 Takip Edilen

With Nadine Chang we introduce a new task, Labeling Instruction Generation, to address missing publicly available labeling instructions from large-scale, visual datasets. Timely for new article from Josh Dzieza in The Verge and New York Magazine arXiv preprint: arxiv.org/abs/2306.14035

Worked hard, learned a lot, and met a great team during the first-ever competition for generative everyday sounds at DCASE Challenge, Task 7 Foley sound! Keunwoo Choi Jaekwon Im KeisukeImoto Shinnosuke Takamichi / 高道 慎之介 Yuki Okamoto Mathieu Lagrange, Brian McFee.

Our lab at Carnegie Mellon University is recruiting 18+ #misophonics in the Pittsburgh area for an in-person study on #misophonia! 🔊 1.5-2hrs at $12/hr 🔊 Listen to everyday sounds while being monitored by our team Please share with your networks! More info below

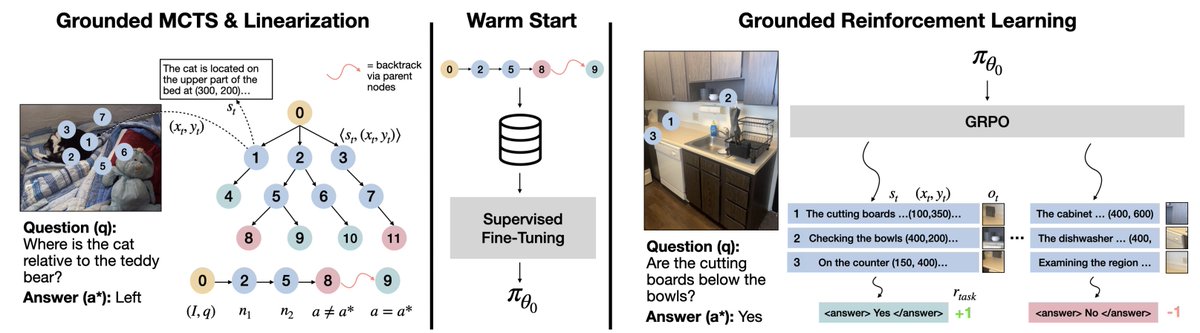

👉 Paper: arxiv.org/abs/2505.23678 👉 Project page: visually-grounded-rl.github.io This was an amazing collaboration with co-authors Snigdha Saha, Naitik Khandelwal, and Ayush Jain, together with faculty Katerina Fragkiadaki, Aviral Kumar, and Academic Mercenary.