Ryzen Benson

@ryzenbenson

Postdoc at UCSF in Radiation Oncology | Cancer Informatics Researcher

ID: 1235724206786011141

06-03-2020 00:29:56

410 Tweet

166 Takipçi

190 Takip Edilen

Come swing by resident Will Chen’s poster presenting findings from our prostate cancer metastasis directed SBRT experience in the PSMA PET era at #ASTRO23! Thanks ASTRO and Prostate Cancer Foundation PCF Science for your support! UCSF Helen Diller Family Comprehensive Cancer Ctr

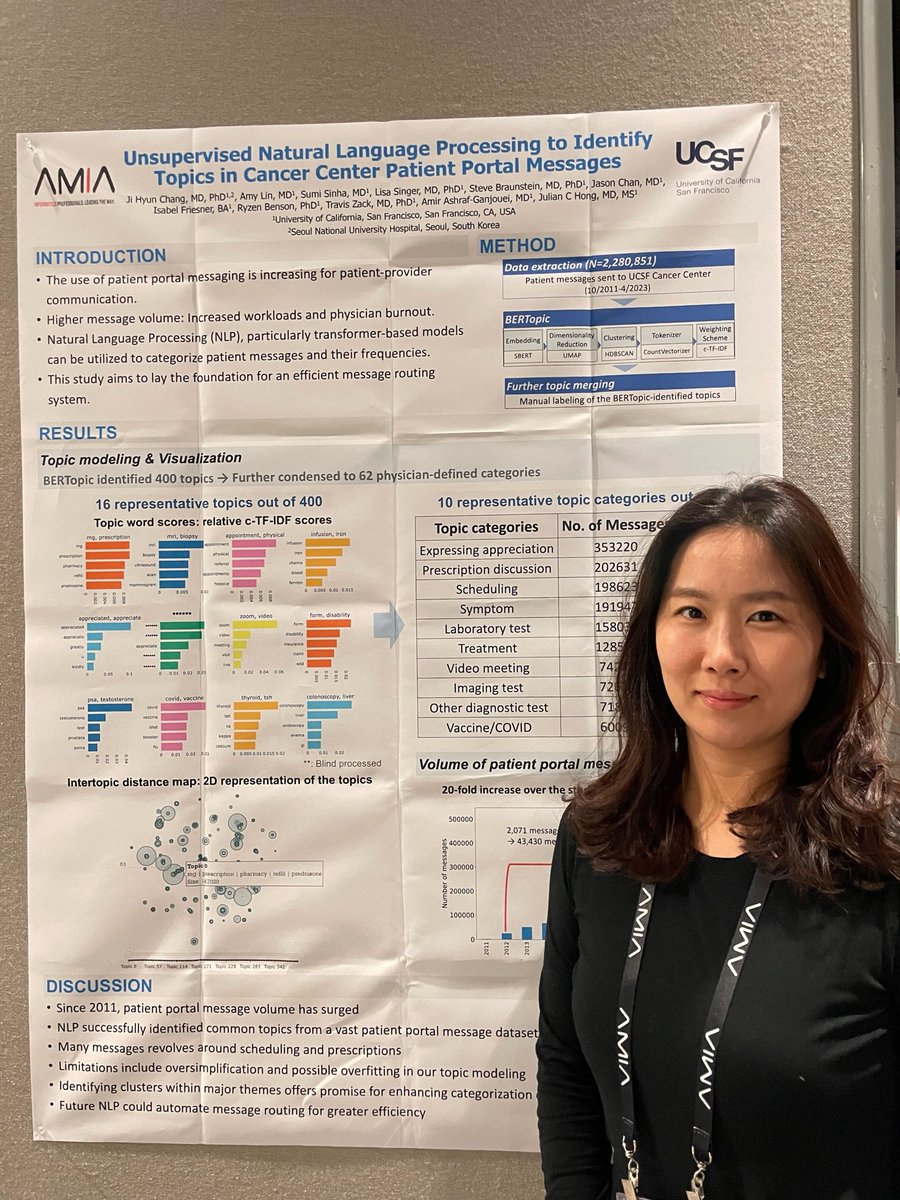

Come by Ji Hyun Chang’s poster looking at patient portal (MyChart) messages at UCSF Helen Diller Family Comprehensive Cancer Ctr! Ji Hyun Chang applied #NLP approaches to identify common topics to hopefully improve triage (and reduce physician burnout)! #is24 UCSF Bakar Computational Health Sciences Institute UC Joint Computational Precision Health Program

Excited to share the latest from our lab! Led by Chichi Chang (now Columbia BME LIINC), we used natural language processing to evaluate symptoms experienced by >28k patients with cancer prior to emergency visits and hospitalizations UCSF Helen Diller Family Comprehensive Cancer Ctr UCSF Bakar Computational Health Sciences Institute UC Joint Computational Precision Health Program

This study at computational scale gives insights into potential priority areas (and affected populations) to focus symptom mitigation strategies, which impacts both cancer outcomes and healthcare costs Thanks to collaborators including Ryzen Benson Jie Jane Chen, MD Jean Feng!

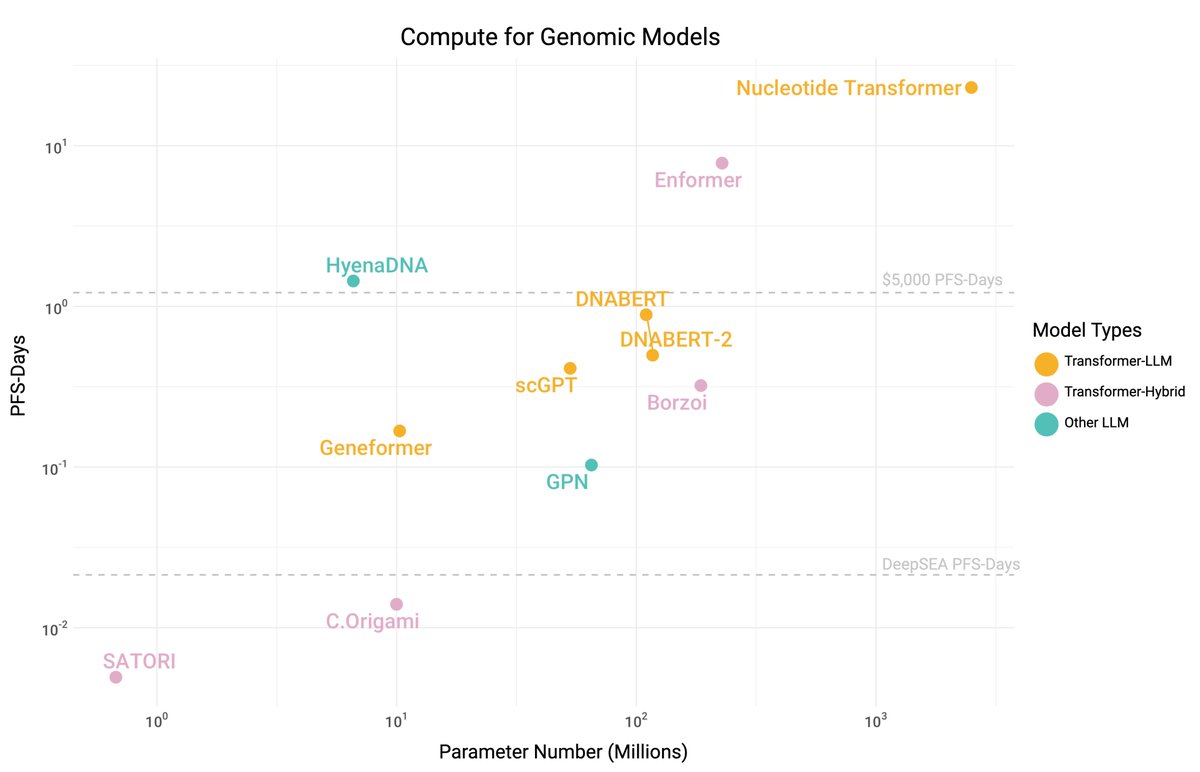

Hot off the press! Ryzen Benson led our team in this review of large language models in cancer care and research as part of the latest IMIA yearbook. Hope it's a good resource for those interested in the area! UCSF Helen Diller Family Comprehensive Cancer Ctr UCSF Bakar Computational Health Sciences Institute UC Joint Computational Precision Health Program thieme-connect.com/products/ejour…