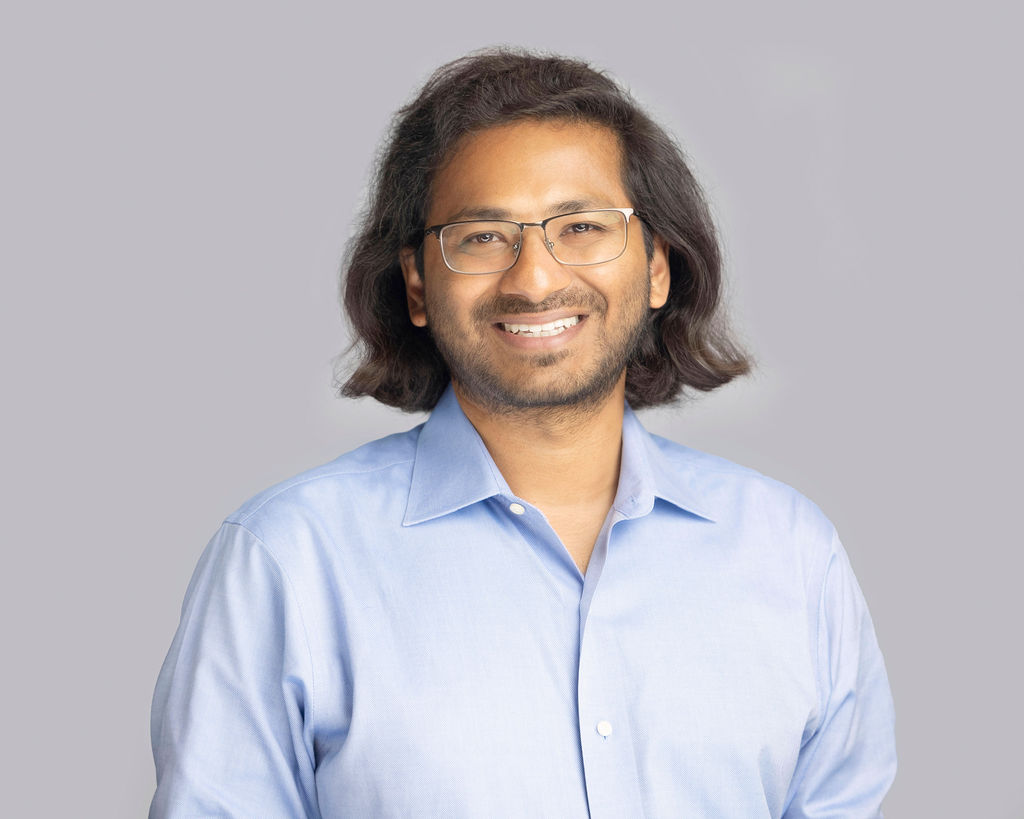

Prateek Mittal

@prateekmittal_

Professor at Princeton. Focused on privacy, cybersecurity, AI and machine learning, public interest technologies.

ID: 276494400

https://www.princeton.edu/~pmittal/ 03-04-2011 13:34:11

1,1K Tweet

2,2K Takipçi

352 Takip Edilen

This is a great opportunity to work with Peter Henderson!

Congratulations to Prateek Mittal, professor in Princeton University Electrical & Computer Engineering, for being named a 2024 Association for Computing Machinery Distinguished Member🎉These are awarded for technical & professional achievements & contributions in computer science & information technology

Fantastic work by Ashwinee Panda, Xinyu Tang and Google DeepMind collaborators on the first practical approach for privacy auditing of LLMs. 🧵👇

Until now, pricing structure on rideshare apps has been opaque for both drivers and riders. 🚗 To help fix this, the The Workers' Algorithm Observatory and researchers from Princeton University created the FairFare app to crowdsource payment info from drivers. Now, a new law in Colorado mandates transparency.