MIT NLP

@nlp_mit

NLP Group at @MIT_CSAIL! PIs: @yoonrkim @jacobandreas @lateinteraction @pliang279 @david_sontag, Jim Glass, @roger_p_levy

ID: 1902072731954233344

18-03-2025 19:01:42

16 Tweet

3,3K Takipçi

45 Takip Edilen

MIT NLP @ ICLR 2025 - catch Mehul Damani at poster 219, Thursday 3PM to chat about "Learning How Hard to Think: Input Adaptive Allocation of LM Computation"!

MIT NLP @ ICLR 2025 - catch Belinda Li at poster 252, Thursday 10AM to chat about "Eliciting Human Preferences With Language Models"!

check out Pratyusha Sharma’s TED talk on her amazing research using AI to decode the language of sperm whales! 👏

Thanks Tanishq Mathew Abraham, Ph.D. for posting about our recent work! We're excited to introduce QoQ-Med, a multimodal medical foundation model that jointly reasons across medical images, videos, time series (ECG), and clinical texts. Beyond the model itself, we developed a novel training

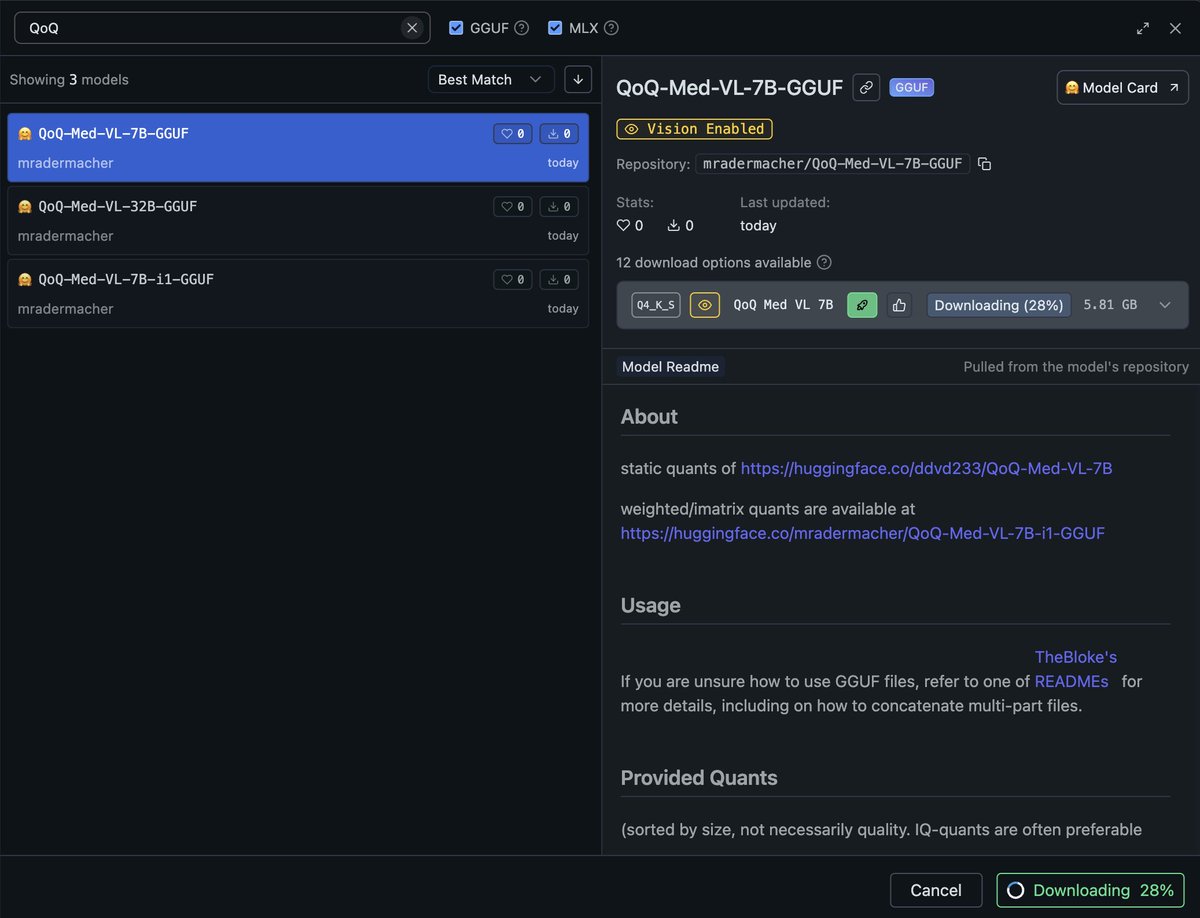

🚀 QoQ-Med is now live on Hugging Face! Load it in seconds with ddvd233/QoQ-Med-VL-7B in your favorite 🤗 Transformers pipeline. No code? No problem: fire up LM Studio (or any llama.cpp GUI), search “QoQ”, and start chatting. Weights + docs → github.com/DDVD233/QoQ_Med